A security professional must be proficient in all that pertains to an organization's system security strategy and the security assessments that need to be performed, including testing and audits. The proper implementation of controls, process audits, testing of pre-release versions, and test output analysis of applications are vital to the organization's security health.

For this reason, domain 6 of the CISSP certification covers security assessment and testing, which comprises 12% of the exam material. We will explore everything you need to know to pass domain 6 in this guide successfully.

6.1 Design and validate assessment, test, and audit strategies

Purpose of security assessment and testing

Domain 6 primarily focuses on security assessment and testing architectures, assets, and systems. The information covered in previous domains underscored the importance of every control to address security from the perspective of the two pillars: functional and assurance.

Security assessment and testing specifically focus on assurance, providing assurance to stakeholders, identifying how security is contributing to goals and objectives, and ensuring the right level of security is built into any architecture that gives value to the organization.

In other words, security assessment and testing ensure that security requirements/controls are defined, tested, and operating effectively. It also applies to the development of new applications and systems as well as the ongoing operations, including end-of-life, related to assets.

Validation and verification

Validation is the process that begins prior to an application or product being built. Validation is concerned with answering one fundamental question: Is the right product being built? From the start, requirements must be understood and documented correctly.

It answers one fundamental question: Are we building the right product?

Verification follows validation and is the process that confirms an application or product is being built correctly.

It asks a related and equally important question: Are we building the product correctly?

Both terms underscore the necessity and importance of early and ongoing testing, not simply testing after a product has been built.

Both terms underscore the necessity and importance of early and ongoing testing, not simply testing after a product has been built.

Effort to invest in testing

The effort to invest in testing should be proportionate to the value the application or system represents to the organization. Value drives security, including the testing done to prove to stakeholders that security is contributing. Testing strategies flow from this value/security relationship.

Testing strategies

Testing strategies can be considered from three broad contexts: internal, external, and third party.

Internal audit | External audit | Third-party audit |

|---|---|---|

Testing conducted by somebody internal to the organization | Testing via either of two scenarios:

| Three parties are involved: customer, vendor, or independent audit firm, for example |

Role of security professionals

The role of security professionals is:

- To identify risk

- To advise testing processes and ensure risks are appropriately evaluated

- To provide advice and support to stakeholders

6.2 Conduct security control testing

Testing overview

Unit testing | Examines and tests individual components of an application. As specific aspects (units) of functionality are finished, they can be tested. |

Interface testing | As more and more individual components are built and tested, interface testing can take place. Interfaces are standardized, defined ways that units connect and communicate with each other. Interface testing serves to verify components connect properly |

Integration testing | Integration testing focuses on testing component groups (groups of software units) together. |

System testing | System testing tests the integrated system (the whole system). |

Examples of testing performed

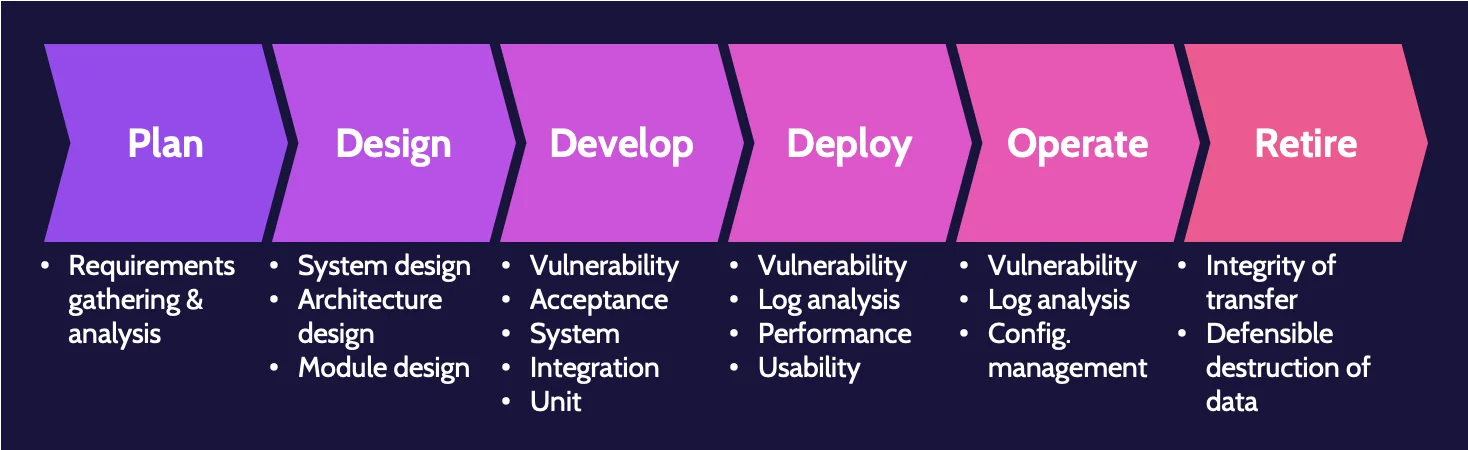

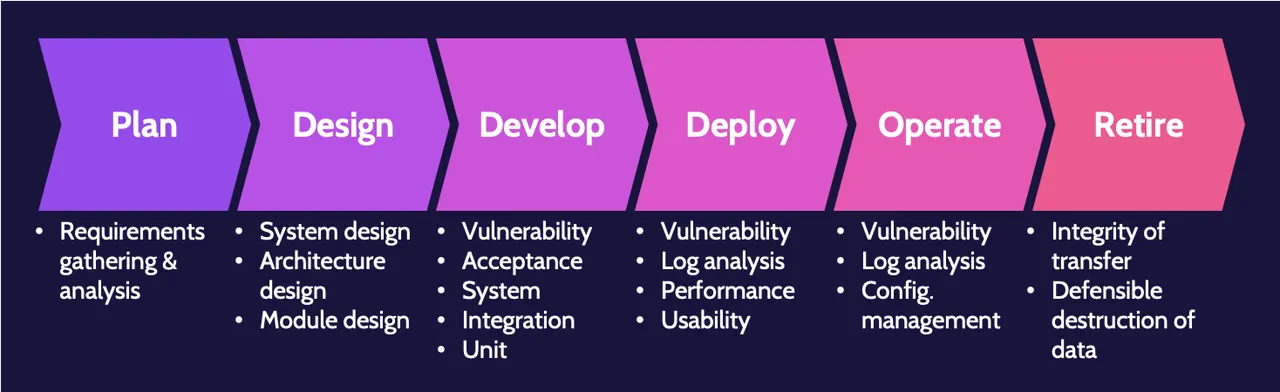

The following graphic provides a high-level overview of some of the required software testings throughout the system life cycle:

Keep in mind that this is not an official framework and shouldn't be memorized; it is meant to provide an overview and highlight that testing is required during every stage of a system's life cycle from the start and throughout.

Testing techniques

Methods and tools

Several testing techniques can and should be utilized when developing an application or other software, and they can be done via manual or automated means.

Manual testing means a person or team of people is performing the tests. They might be following a specific process, but they're actually sitting in front of a computer, looking at code, testing a form by entering input, and so on.

Automated testing means that test scripts and batch files are being automatically manipulated and executed by software. Thorough testing employs both approaches to produce many outcomes and achieve the best results.

Runtime

Runtime specifically refers to whether an application is running.

With Static Application Security Testing (SAST), an application is not running, and it's the underlying source code that is being examined. Static testing is a form of white box testing because the code is visible.

With Dynamic Application Security Testing (DAST) testing, an application is running, and the focus is on the application and system as the underlying code executes. As opposed to static testing, dynamic testing is a form of black box testing because the code is not visible. The entire focus is on the application and its behavior based upon inputs

Fuzz testing is a form of dynamic testing. The entire premise behind fuzz testing is chaos. In other words, fuzz testing involves throwing randomness at an application to see how it responds and where it might "break." Fuzz testing is quite effective because application developers and programmers tend to be very logical people, and the software they develop tends to reflect this fact.

Code review/access to source code

Code review and access to source code can be considered from two perspectives when testing:

- No access to the source code exists (also known as black box testing)

- Access to the source code does exist (also known as white box testing)

Both types of testing provide value and can be mixed and matched as needed to provide comprehensive results.

Test types

When a system is running, it's possible to test it as if a user was using it. Specifically, the system can be tested in a few different ways—via positive testing, negative testing, or misuse testing.

Positive testing | It focuses on the response of a system based on normal usage and expectations, checking if the system is working as expected and designed. |

Negative testing | It focuses on the response of a system when normal errors are introduced. |

Misuse testing | With this type of testing, the perspective of someone trying to break or attack the system is applied. If a system can be tested and understood from the standpoint of normal expected usage, it should also be understood from the viewpoint of somebody who wants to break into or otherwise abuse the system in order to gain further access. |

Equivalence partitioning and boundary value analysis

These two testing techniques are designed to make testing more efficient.

Boundary value analysis requires first identifying where there are changes in behavior. Once the boundaries have been identified, testing can be focused on either side of the boundaries, as this is where there are most likely to be bugs.

Equivalence partitioning starts with the same first step of boundary value analysis – identifying the boundaries, and then goes a step further to identify partitions. Partitions are groups of inputs that exhibit the same behavior.

Test coverage analysis

Test coverage analysis, or simply "coverage analysis," refers to the relationship between the amount of source code in a given application and the percentage of code that has been covered by the completed tests.

Test coverage is a simple mathematical formula: amount of code covered/total amount of code in application = test coverage percent.

Vulnerability assessment and penetration testing

Purpose of vulnerability assessment

With any risk analysis, it is important to first know what assets exist. Next, the threats these assets face must be identified, which can happen through threat modeling. Two well-known and often-used threat modeling methodologies are STRIDE (Spoofing, Tampering, Repudiation, Information disclosure, Denial-of-Service, Elevation of privilege) and PASTA (Process for Attack Simulation and Threat Analysis). Finally, to understand the full breadth of risk that exists, vulnerabilities must also be identified.

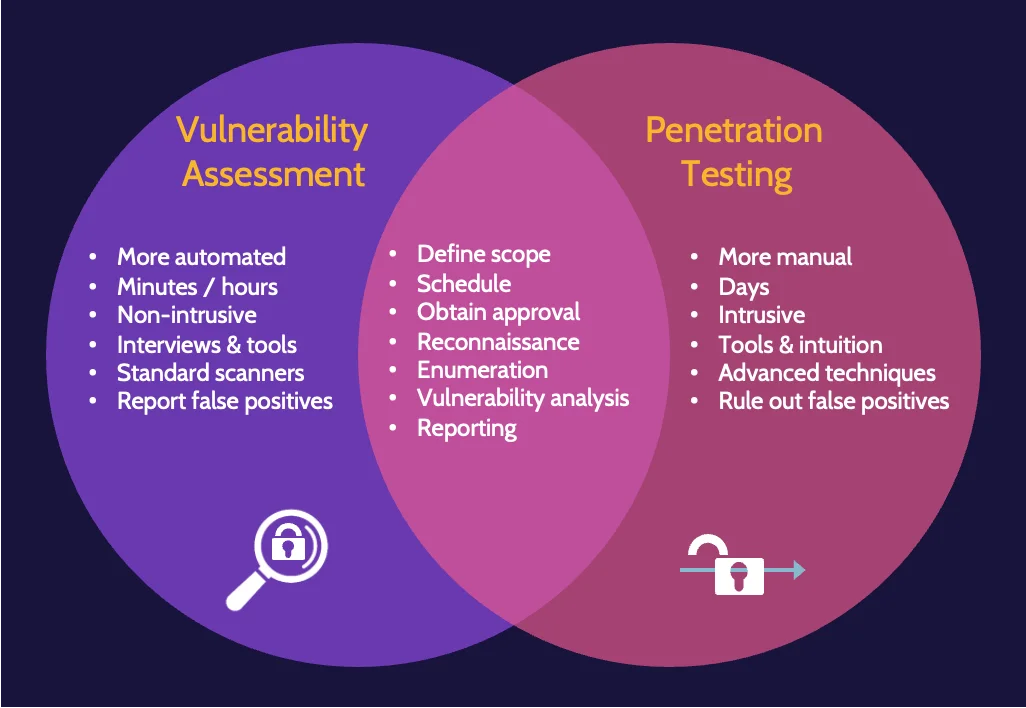

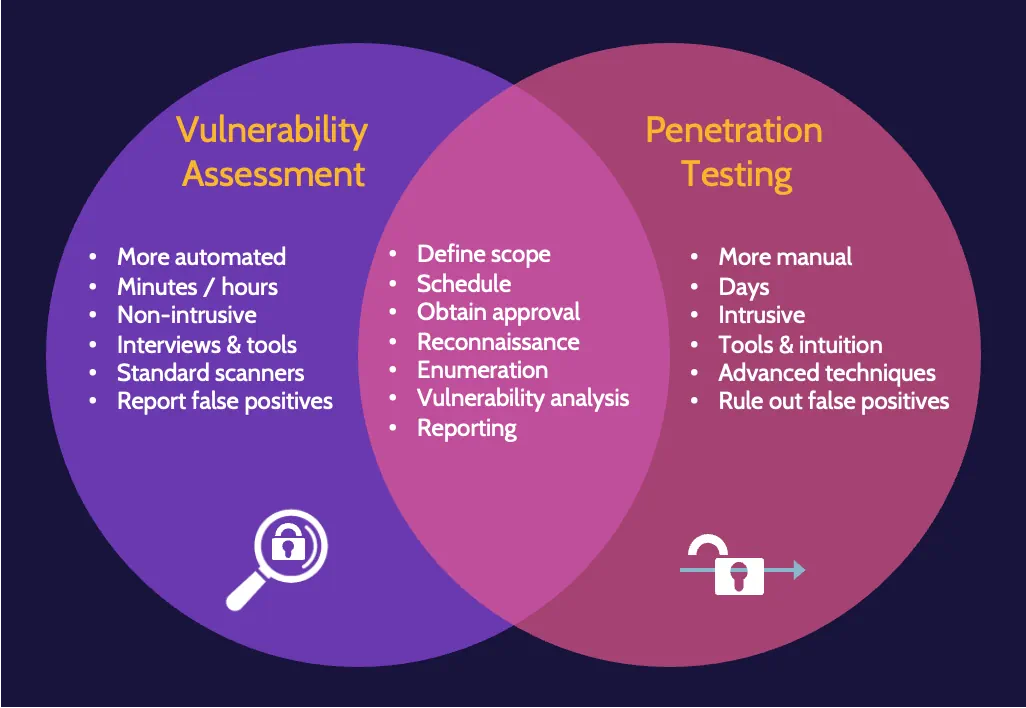

Vulnerability assessment versus penetration test

Both processes start the same way as they seek to identify potential vulnerabilities. However, with a vulnerability assessment, once vulnerabilities are noted, no further action is taken apart from producing a report of findings. A penetration test goes an essential step further: after identifying vulnerabilities, an attempt is made to exploit each vulnerability (breach attack simulations).

Vulnerability testing techniques tend to be automated and can be performed in minutes, hours, or a few days; penetration testing techniques tend to be manual and can take several days, depending on the complexity involved.

Testing stages include:

- Reconnaissance

- Enumeration

- Vulnerability analysis

- Exploitation

- Reporting

Testing techniques

Vulnerability assessments and penetration testing can be quite nuanced, and several variables come into play. These variables include perspective, approach, and knowledge.

Perspective

Internal Testing | The test is being performed from inside the corporate network. |

External Testing | Testing from an external perspective, where threats from outside the network can be considered and tested. |

Approach

Blind testing | The assessor is given little to no information about the target being tested. |

Double-blind testing | A double-blind approach goes one step further. In addition to the accessor being given little to no information about the target company, its IT and Security Operations teams will not know of any upcoming tests. |

Knowledge

Zero Knowledge (black box) | The assessor has zero knowledge—the same as the blind approach noted above. |

Partial Knowledge (gray box) | The assessor is given information about the target network but not the whole set that a white box test would have. It lies somewhere in between a white and black box test. |

Full Knowledge (white box) | The assessor is given full knowledge (including items like IP addresses/range, network diagrams, information about critical systems, and perhaps even password policies). |

Vulnerability management

At a high level, an effective vulnerability management process should include the following:

- An understanding of all assets in an organization, which requires an accurate asset inventory.

- Identifying the value of each asset in the inventory.

- Identifying the vulnerabilities for each asset and remediation of identified vulnerabilities.

- Ongoing review and assessment of all steps.

Vulnerability scanning

Automated vulnerability scanning can help identify vulnerabilities from an organizational perspective as well as from the perspective of an attacker.

Two primary types of vulnerability scans: credentialed/authenticated scans and uncredentialed/unauthenticated scans.

Banner grabbing and OS fingerprinting

Banner grabbing is a process used to identify a system's operating system, applications, and versions.

Fingerprinting works to identify the unique characteristics of a system through an examination of how packets and other system-level information are formed.

Interpreting and understanding results

Closely coupled with identifying and reporting results from activities like vulnerability scanning, banner grabbing, and fingerprinting is identifying the severity and exact nature of the results. This is done using two tools: CVE and CVSS:

- CVE, also known as Common Vulnerability & Exposures dictionary, is "a list of records—each containing an identification number, a description, and at least one public reference—for publicly known cybersecurity vulnerabilities."

- CVSS, known as Common Vulnerability Scoring System, reflects a method to characterize a vulnerability through a scoring system considering various characteristics.

False positives and false negatives

With any type of monitoring system, two types of alerts often show up:

- False-positives: the system claims a vulnerability exists, but there is none.

- False-negatives: the system says everything is fine, but a vulnerability exists; false-negatives are bad.

Log review and analysis

Log review and analysis is a best practice that should be used in every organization. Logs should include what is relevant, be proactively reviewed, and be especially scrutinized for errors and anomalies that point to problems, modifications, or breaches.

Log event time

Synchronized log event times are critical for activity correlation, especially if a breach occurs; NTP is typically used for purposes of time synchronization.

If an organization has deployed multiple servers and other network devices—like switches and firewalls—and each device is generating events that are logged, it's critical that the system time, and therefore the event log time, for each device is the same. Consistent time stamps mean all system and device clocks are set to the exact same time, which is done by using Network Time Protocol (NTP).

Log data generation

Log data is generated by virtually every system operating in an organization. Logging and monitoring processes go deeper and include:

- Generation

- Transmission

- Collection

- Normalization

- Analysis

- Retention

- Disposal

Limiting log sizes

Log file management is essential in any organization, especially regarding sizes. Two log file management methods are used: circular overwrite and clipping levels.

Circular overwrite limits the maximum size of a log file by overwriting entries, starting from the earliest.

Clipping levels focus on when to log a given event based upon threshold settings, and log file sizes are limited as a result.

Operational testing—synthetic transactions and RUM

Operational testing is performed when a system is in operation. Within the context of operational testing, two testing techniques are often employed:

- Real User Monitoring (RUM) is a passive monitoring technique that monitors user interactions and activity with a website or application.

- Synthetic performance monitoring examines functionality as well as functionality and performance under load. Test scripts for each type of functionality can be created and then run at any time.

For accuracy, the best environment to perform synthetic testing in is in production.

Regression testing

Regression testing is the process of verifying that previously tested and functional software still works after updates have been made. It should be performed after enhancements have been made or after patches to address vulnerabilities or problems have been issued.

Compliance checks

With security control testing in mind, compliance checking is an integral and ongoing part of the security control testing process. Compliance can be confirmed by reviewing and analyzing implemented controls and their output to confirm alignment with documented security requirements.

Furthermore, compliance checking can confirm that testing and controls are aligned with organizational policies, standards, procedures, and baselines, and it can effectively put a bow on all the activities mentioned above.

6.3 Collect security process data

Key risk and performance indicators

Key risk and performance indicators help inform goal setting, action planning, performance, and review, among other things.

Key risk indicators (KRI) are forward-looking indicators and aid risk-related decision-making.

Key performance indicators (KPI) are backward-looking indicators and look at historical data for purposes of evaluating if performance targets were achieved.

Key Performance Indicators (KPIs) | Key Risk Indicators (KRIs) |

|---|---|

|

|

|

|

|

|

The SMART metrics are as follows:

- Specific. Result clearly stated and easy to understand?

- Measurable. Result can be measured/have the data?

- Achievable. Results can drive desired outcomes?

- Relevant. Aligned to business strategy?

- Timely. Results available when needed?

How do you decide what to focus metrics on?

Organizations make decisions on which metrics to focus on based on their business goals and objectives, denoting what's most important to the organization. For example:

- Account management

- Management review and approval

- Backup verification

- Training and awareness

- Disaster Recovery (DR) and Business Continuity (BC)

6.4 Analyze test output and generate report

Test output

The results of security assessments and testing should include steps related to remediation, exception handling, and ethical disclosure.

Remediation | Based upon assessments and testing, remediation steps for all identified vulnerabilities should be documented. |

Exception handling | If an identified vulnerability is not remediated, this should be documented too, including the reason why that's the case. Regardless of the reason, exceptions should be carefully considered and noted. |

Ethical disclosure | Some identified vulnerabilities might be new discoveries and point to significant flaws and weaknesses in widely used software and hardware. In this context and for the sake of other users, customers, and vendors, newly discovered vulnerabilities must be shared to the extent necessary to protect anybody who may be exposed to the same vulnerabilities. |

6.5 Conduct or facilitate security audits

Audit process

- Define the audit objective

- Define the audit scope

- Conduct the audit

- Refine the audit process

To the last point, a typical audit process might include:

- Determining audit goals

- Involving the right business unit leader(s)

- Determining the audit scope

- Choosing the audit team

- Planning the audit

- Conducting the audit

- Documenting the audit results

- Communicating the results

System Organization Controls (SOC) reports

Over the years, audit standards have evolved and matured over the years from SAS70 → SSAE 16 → SSAE 18.

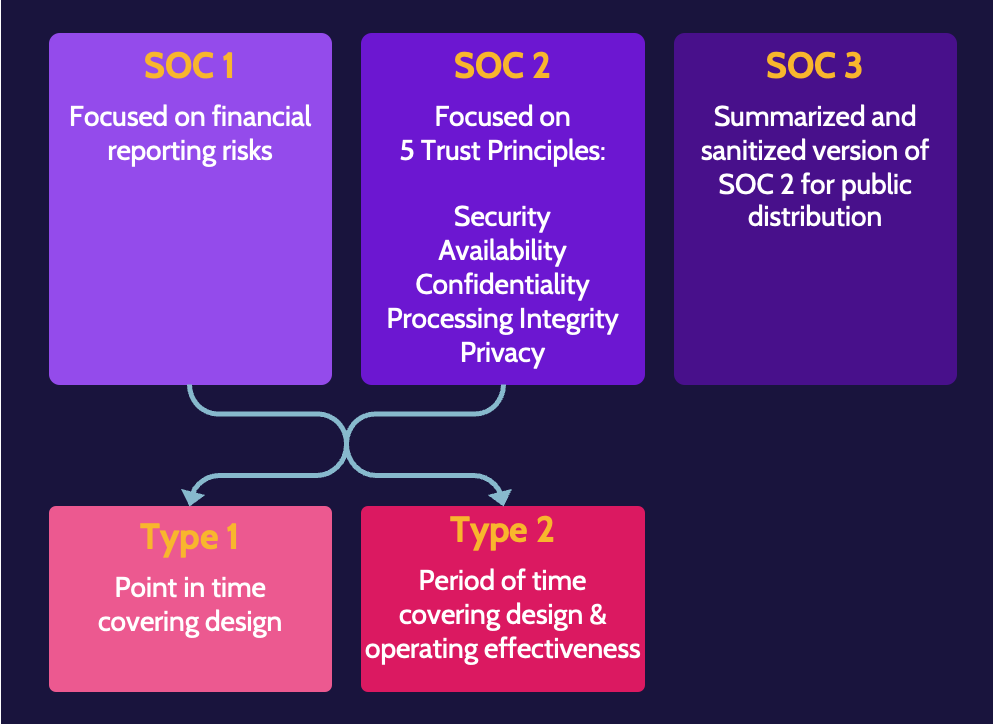

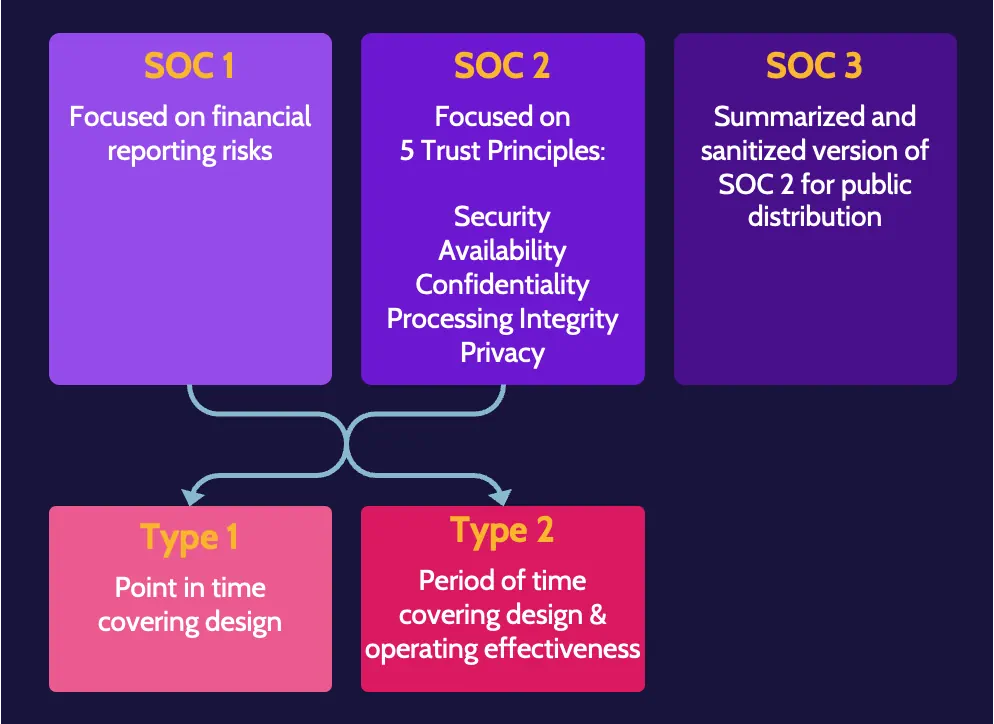

The SSAE 18 standard defines three types of Service Organization Controls (SOC) reports:

- SOC 1 reports are quite basic and focus on financial reporting risks.

- SOC 2 reports are much more involved and focus on the controls related to the five trust principles: security, availability, confidentiality, processing integrity, and privacy.

- SOC 3 reports are stripped-down versions of SOC 2 reports—typically used for marketing purposes.

There are also two types of SOC 1 and SOC 2 reports:

- A Type 1 report focuses on the design of controls at a point in time.

- A Type 2 report examines not only the design of a control but, more importantly, the operating effectiveness over a period of time, typically a year.

From the types of reports defined, it should be clear that SOC 2, Type 2 are the most desirable reports for security professionals, as they report on the operating effectiveness of the security controls at a service provider over a period of time.

Audit roles and responsibilities

Audit roles include executive (senior) management, audit committee, security officer, compliance manager, internal auditors, and external auditors.

Pass the exam with our CISSP MasterClass

We know that preparing for the CISSP certification exam is an arduous journey. On Destination Certification, we offer an intelligent learning system to help you pass the exam. We have a deep involvement with ISC2 and have worked with thousands of successful students.

Filter out all the irrelevant information and focus on what serves you. Enjoy clear step-to-step guidance and live support from CISSP experts to achieve your goals. Enroll now in our CISSP MasterClass here and become the security professional you want to be.