Domain 3 of the CISSP certification exam is called Security Architecture and Engineering. It contains the concepts, principles, structures, and standards used to design, implement, monitor, and secure various architectures such as systems, applications, operating systems, equipment, networks, and those controls used to enforce multiple appropriate levels of security.

The study of this domain is of the utmost importance since there's a vast amount of information to grasp and understand properly. Here's a complete guide to approaching Domain 3: Security Architecture and Engineering—which comprises 13% of the total marks—and what you need to know to pass the exam.

3.1 Research, implement, and manage engineering processes using secure design principles

Security must be involved in all phases of designing and building a product or system; it must be involved from beginning to end. It's important to understand the meaning of the domain's title.

The word architecture implies many components that work together to allow that architecture to be used for its intended purposes.

If we add the word security, that would include security policies, knowledge, and experience that must be applied to protect this architecture to the level of value relating to the individual components and the overall architecture.

This is what is meant by the term security architecture

And finally, the word engineering commonly points to designing a solution by walking through a series of steps and phases to put the components together so they can work in harmony as an architecture.

Determining appropriate security controls

Regardless of the framework, model, or methodology used, the risk management process should be used to identify the most valuable assets and risks to those assets and to determine appropriate and cost-effective security controls to implement this.

Some secure design principles are:

- Threat modeling

- Least privilege

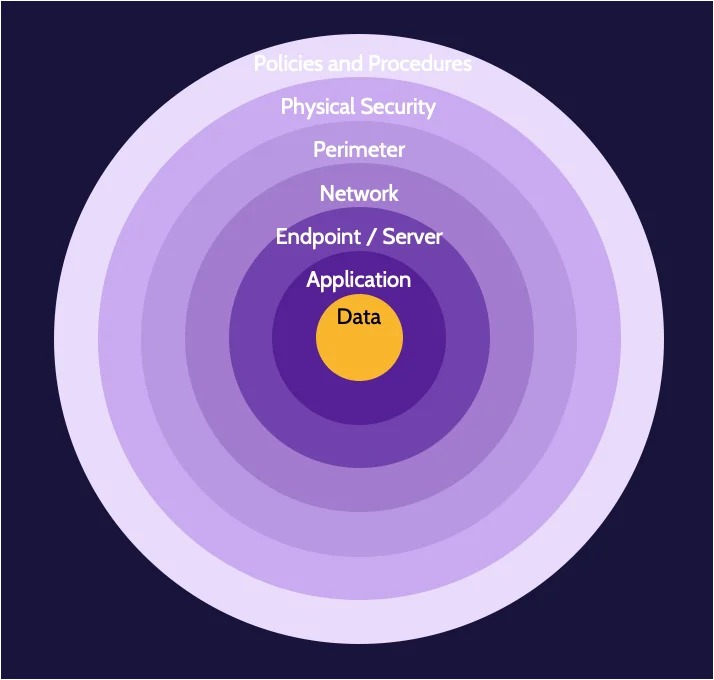

- Defense in depth

- Secure defaults

- Fail securely

- Separation of duties

- Keep it simple

- Zero trust

- Trust but verify

- Privacy by Design

- Shared responsibility

Secure defaults

Any default settings a system has should be secured to the extent possible so no compromise is facilitated.

Fail securely

If a system or its components fail, they should do so in a manner that doesn't expose the system to a potential attack.

Keep it simple

Remove as much complexity from a situation as possible and focus on what matters most.

Zero trust

It is based upon the premise that organizations should not automatically trust anything internal or external to enter their perimeter. Instead, before granting access to systems and individuals, those must first be authenticated and authorized.

Trust but verify

Trust but verify means being able to authenticate users and perform authorization based on their permissions to perform activities on the network so they can access the various resources.

It also means that real-time monitoring is a requirement. In short, focus on employing complete controls that include better detection and response mechanisms.

Privacy by design

Privacy by design is premised on the belief that privacy should be incorporated into networked systems and technologies by default and designed into the architecture.

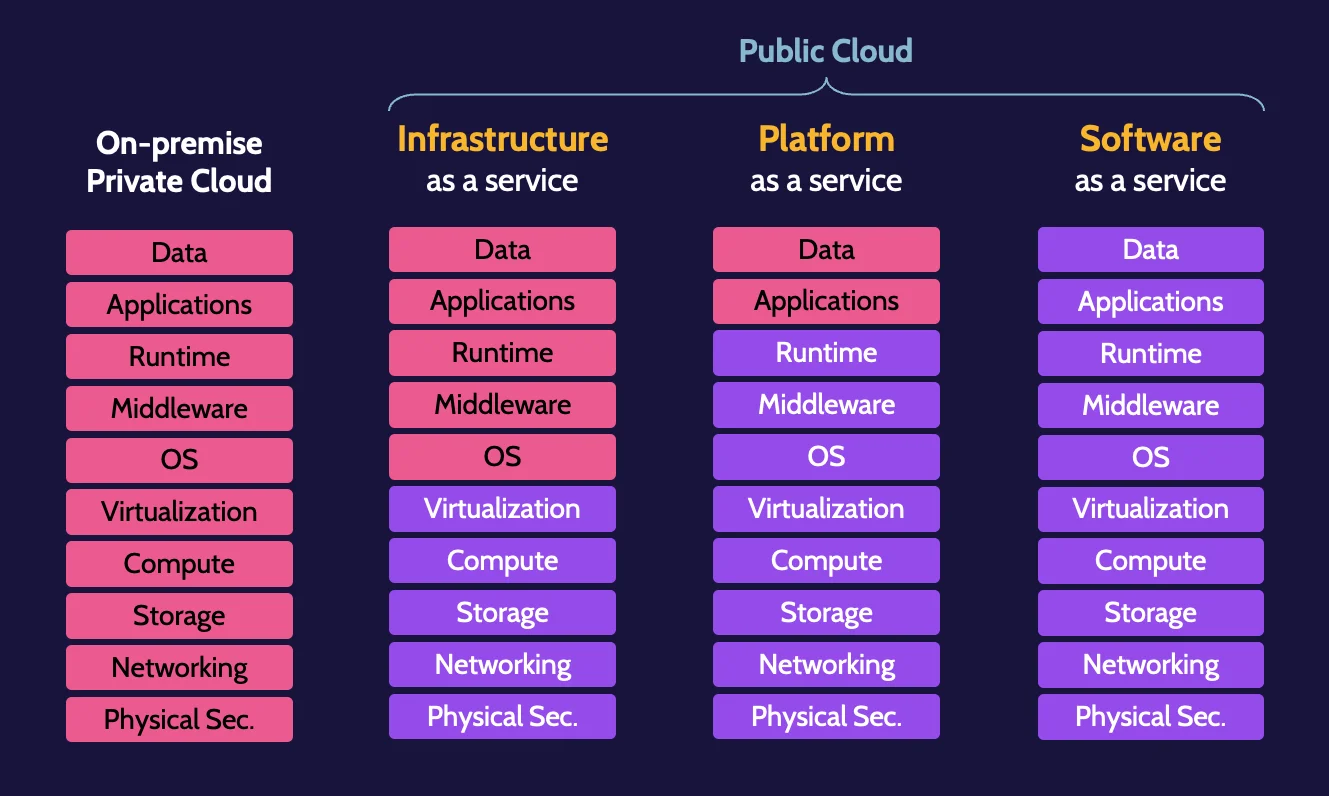

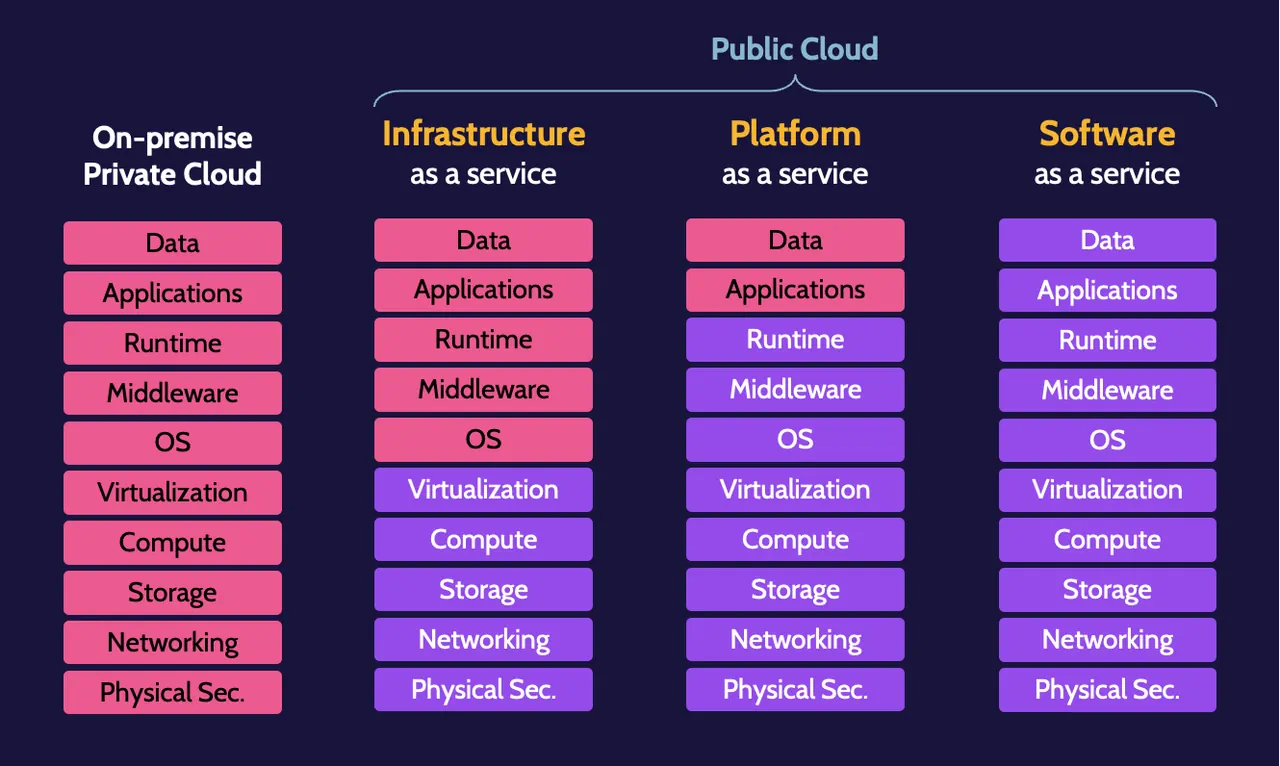

Shared responsibility

Because of increased reliance on third-party services, a corresponding increase in clarity on shared security expectations should exist. The cloud customer and service provider must clearly communicate expectations both ways and define related responsibilities.

To this end, consumers and providers must act on these responsibilities and define clear contracts and agreements.

3.2 Understand the fundamental concepts of security models (e.g., Biba, Star Model, Bell–LaPadula)

What is a model?

A security model represents what security should look like in an architecture being built. Security models have existed and have been used for years. Some of these models include Bell–LaPadula, Biba, Clark–Wilson, and Brewer–Nash (also referred to as the Chinese Wall model).

It's valuable to understand these models and their underlying rules as they govern the implementation of the model.

Concept of security

To ensure the protection of any architecture, it must be broken down into individual components, and adequate security for each component needs to be put in place.

Any system should be broken down and individual components secured to the degree that value dictates doing so.

Enterprise security architecture

Security architecture involves breaking down a system into its components and protecting each component based on its value.

Three of the most popular enterprise security architectures are:

- Zachman

- Sherwood Applied Business Security Architecture (SABSA)

- The Open Group Architecture Framework (TOGAF)

Security models

Security models are rules that need to be implemented to achieve security. Many security models exist, but most of them are one of two types: lattice-based or rule-based.

A good way to envision a lattice-based model is to think of a ladder with a framework and steps that look like layers going up and down. In other words, a lattice-based model is a layer-based model. It requires layers of security to address the requirements.

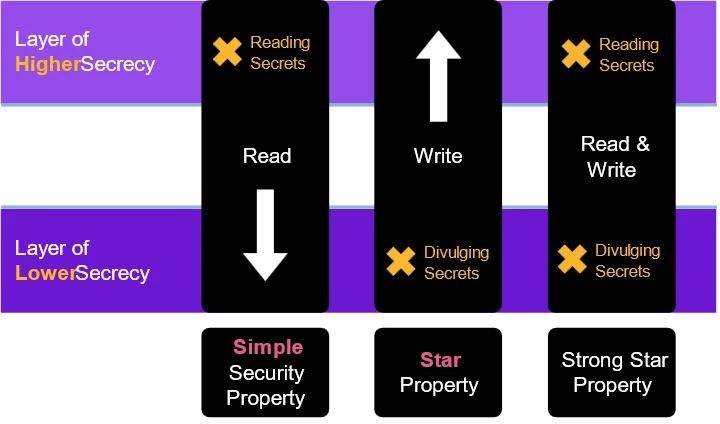

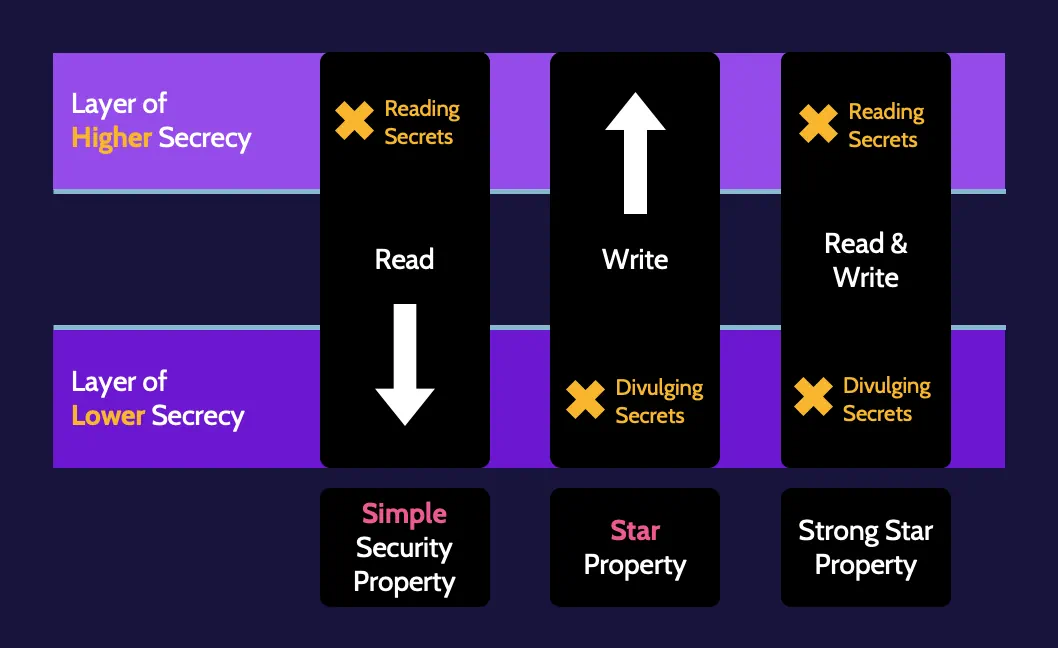

Two lattice-based models exist: Bell–LaPadula, and Biba. Bell–LaPadula addresses one primary component of the CIA triad: confidentiality. Biba addresses another component: integrity.

All other models are rule-based, meaning specific rules dictate how security operates.

The following table provides a summary of the various lattice-based and rule-based security models:

Layer / Lattice-based models | Rule-based models |

|---|---|

|

|

|

|

Cell |

|

Cell |

|

Cell |

|

Layer-based models

Lattice-based security models, like Bell–LaPadula, and Biba, can also be thought of as layer-based security models.

Bell–LaPadula

Bell–LaPadula is based on incorporating the necessary rules that need to be implemented to achieve confidentiality.

Biba

Biba focuses on ensuring data integrity.

Lipner implementation

What happens if you want to have both confidentiality and integrity? The Lipner implementation is simply an attempt to combine the best features of Bell–LaPadula and Biba regarding confidentiality and integrity. As such, it is not truly a model but rather an implementation of two models.

Rule-based models

Depending on the model, the number and complexity of the rules employed may vary widely, and the model's focus can also change. In addition to looking at some specific rule-based models further below, a basic understanding of information flow models and covert channels should first be covered.

Information flow

If the flow of information can be tracked, this implies it can be tracked throughout its life cycle; in other words, it can be tracked from the point of origin, whether collected or created, to its storage, use, dissemination, sharing with others, and eventually to its end of life (e.g., archival and destruction). Information flow can also help the identification of vulnerabilities and insecurities, like covert channels, and serves as the basis for both Bell–LaPadula, and Biba.

Covert channels

Covert channels are unintentional communication paths that may lead to the disclosure of confidential information. An example of a storage covert channel exists in most technology architectures. On a laptop, sensitive information could be placed in RAM because a process needs to be used, but when that process finishes, the sensitive data remains in memory. That could become available to other processes that are placed in memory and can read it.

They are also called secret channels, are unintentional communications paths, and two types of covert channels exist, as summarized in the following table:

Storage | Timing |

|---|---|

The process writes sensitive data to RAM, and the data remains present after the process completes; now, other processes can potentially read the data. | An online web server responds to a user providing an existing username within three seconds, while it takes one second if the username doesn't exist. That allows the attacker to perform username enumeration. |

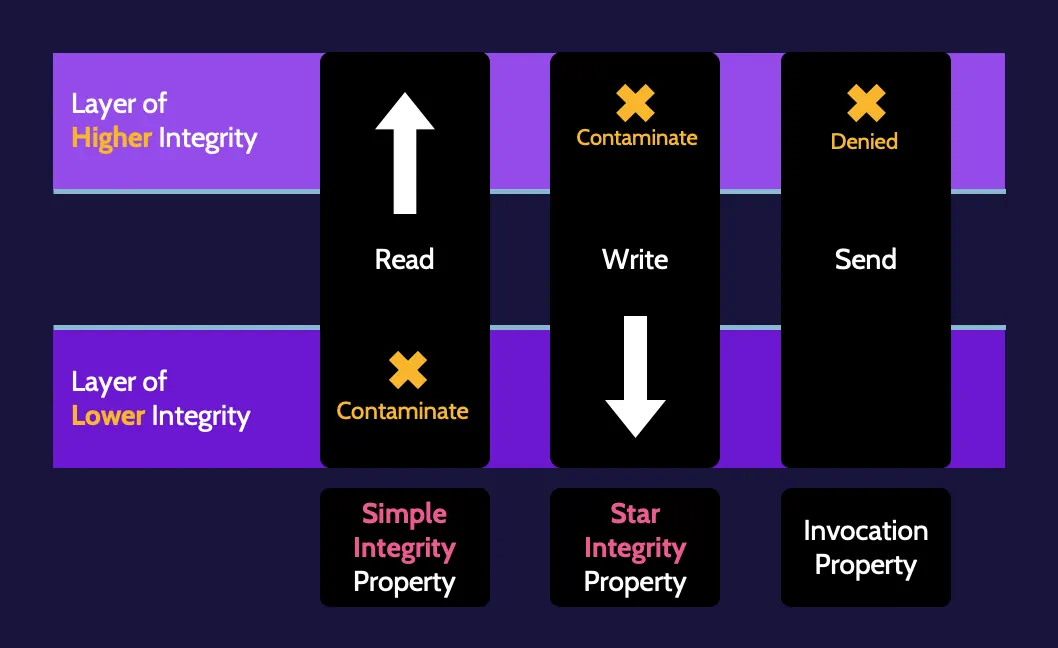

Clark-Wilson

Clark–Wilson is an important, rules-based model focusing only on integrity. Unlike Biba, which only prevents unauthorized subjects from making any changes, Clark–Wilson offers further protection and meets three goals of integrity:

- Prevent unauthorized subjects from making any changes (this is the only of the three that Biba addresses)

- Prevent authorized subjects from making bad changes

- Maintain consistency of the system

Biba only addresses #1 and therefore falls short of truly addressing security concerns related to protecting all integrity, while Clark–Wilson addresses #1 and then further protects integrity through #2 and #3.

Clark–Wilson achieves each of the goals specifically through the application of the three rules of integrity noted here:

Well-Formed transactions | Separation of duties | Access triple |

|---|---|---|

Good, consistent, validated data. Only perform operations that won't compromise the integrity of objects. | One person shouldn't be allowed to perform all tasks related to a critical function. | Subject | Program | Object |

Brewer–Nash (The Chinese Wall) model

Brewer–Nash is also known as "The Chinese Wall" model and has one primary goal: Preventing conflicts of interest.

An example of where Brewer–Nash might be implemented is between the Development and Production departments in an organization, as the two departments should not be able to influence each other or even allow access between each other.

Graham-Denning model

Graham–Denning is another lesser known, rule-based model that specifies rules allowing a subject to access an object.

Harrison–Ruzzo–Ullman Model

Like Graham–Denning, Harrison–Ruzzo–Ullman is also a rule-based model that focuses on the integrity of access rights via a finite set of rules available to edit a subject's access rights. It adds the ability to add generic rights to groups of individuals.

Certification and accreditation

Certification is the comprehensive technical analysis of a solution to confirm it meets the desired needs.

Accreditation is management's official sign-off of certification for a predetermined period.

When architectures—especially security architectures—are built, products are often purchased from vendors. Security today often relies on solutions and mechanisms provided by vendors. This fact introduces a potential problem: How do we know vendor solutions actually provide the level of security we think they provide?

Any vendor is going to say they have the best products, the best solutions, and the best architectures. For example, if a firewall needs to be purchased, every firewall vendor will say their firewall is the best one available and will meet our needs perfectly. How can statements like this be confirmed and verified? We would need an independent and objective measurement system that vendors can use for evaluation and purchasing purposes. Such a system could be used by any organization around the globe to make purchasing decisions and not need to rely on vendors themselves.

These evaluations, these measurements, could be trusted because they've been created using an independent, vendor-neutral, objective system. These measurement systems do, in fact, exist and are called evaluation criteria systems.

The most well-known evaluation criteria systems are:

- Trusted Computer System Evaluation Criteria (TCSEC)—also known as the Orange Book

- The European equivalent of TCSEC called Information Technology Security Evaluation Criteria (ITSEC)

- An ISO standard, called the Common Criteria.

Evaluation criteria (ITSEC and TCSEC)

Orange Book/Trusted Computer System Evaluation Criteria (TCSEC)

The first evaluation criteria system created is often referred to as the Orange Book due to the fact the cover of the book is orange. It was written as part of a series of books known as the "rainbow series," published by the US Department of Defense in the '80s. Each book in the series deals with a topic related to security, and the cover of each is a different color, thus the nickname "rainbow series."

The classification levels—the criteria—used in the Orange Book are:

- A1. Verified design

- B3. Security labels, verification of no covert channels, and must stay secure during start up

- B2. Security labels and verification of no covert channels

- B1. Security labels

- C2. Strict login procedures

- C1. Weak protection mechanisms

- D1. Failed or was not tested

The Orange Book only measures confidentiality. Even by today's standards and when many people say it's obsolete, if you're interested only in confidentiality, there's no better system than TCSEC.

In addition, it only measures single-box architectures; it does not map well to networked environments. This is why many European organizations considered the model from a more current perspective and revamped it. They took what they thought to be a good idea and made it better in what is known as the Information Technology Security Evaluation Criteria (ITSEC).

Information Technology Security Evaluation Criteria (ITSEC)

ITSEC measures more than confidentiality and works well in a network environment. Also, when ITSEC was created, ways to measure function and assurance separate from each other were incorporated.

When a product is considered through the lens of ITSEC, two ratings are given. One rating—the "F" levels—is a functional rating, like the ones used in the Orange Book. The other rating—the "E" levels—was introduced as part of ITSEC and refers to levels of assurance. E levels range from E0 to E6, as shown in the following list:

- E6. Formal end-to-end security tests + source code reviews

- E5. Semiformal system + unit tests and source code review

- E4. Semiformal system + unit tests

- E3. Informal system + unit tests

- E2. Informal system tests

- E1. System in development

- E0. Inadequate assurance

Common Criteria replaced ITSEC in 2005.

Common Criteria

ISO 15408, better known as the Common Criteria, is the most used of the evaluation criteria systems and is also the most popular; most products are evaluated using it.

As such, it's critical to understand Common Criteria components, Evaluation Assurance Levels (EAL), and the ramifications if changes to an EAL-rated system take place.

Like the other evaluation systems, the Common Criteria provides confidence in the industry for consumers, security functions, vendors, and others. The Common Criteria is the latest measurement system, and it's also an ISO standard (ISO 15408). It's called the Common Criteria because several countries joined together with a common goal: to create a common measurement system that could be trusted globally.

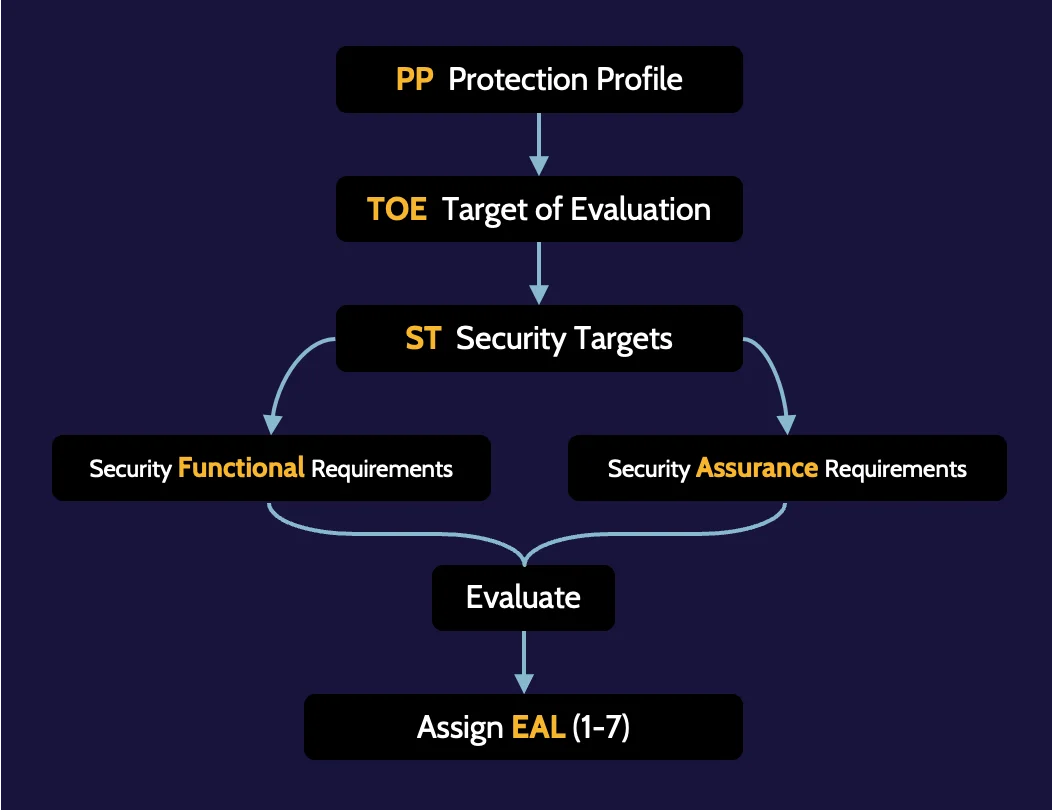

Common Criteria process

The first component is the Protection Profile (PP). The PP lists the security capabilities that a type or category of security products should possess. For example, there's a Protection Profile for firewalls; it lists the security capabilities that any firewall should contain—for example, two-factor authentication (2FA) capabilities, VPN capabilities, ability to encrypt to 128-bit encryption level, and secure logging, to name a few.

Target of Evaluation (TOE) is the next component. Using the earlier firewall example, if a vendor desires their firewall to be rated according to the Common Criteria, the firewall would be considered the TOE.

The next component, the Security Targets (ST), describe—from the vendor's perspective—each of the firewall's security capabilities that match up with capabilities outlined in the Protection Profile. When the firewall is measured, capabilities like VPN, encryption, two-factor authentication, secure logging, and so on will be compared against standards listed in the protection profile and tested extensively. For example, the firewall may perform two-factor authentication very well but lacks strong VPN capabilities.

EAL levels

Despite the table below illustrating multiple EAL levels, EAL7 is not necessarily the best for the sake of a vendor marketing and selling its product. In fact, most organizations will not purchase a product rated above EAL4. Operating systems are typically at EAL3, and firewalls at EAL4.

EAL7 | Formally verified, designed, and tested |

EAL6 | Semi-formally verified, designed, and tested |

EAL5 | Semi-formally designed and tested |

EAL4 | Methodically designed, tested, and reviewed |

EAL3 | Methodically tested and checked |

EAL2 | Structurally tested |

EAL1 | Functionally tested |

If a product is at EAL7, it could become more vulnerable to compromise due to being more complex and harder to maintain. Yes, the product might offer more security features and capabilities, but consumers will likely not use them if they require extensive configuration, administrative skills, and maintenance. This could ultimately leave an organization at greater risk.

Vendors, therefore, must balance the trade-off between functionality and security. Too much of the latter always impacts product speed and administrative overhead, which might also lead to the creation of an expensive product.

A final thing to note is that after a product undergoes an evaluation and is assigned an EAL level, the EAL level for that product will remain the same throughout its lifespan unless a major change in product functionality is introduced. In other words, when a patch or software update to the product is made, the EAL level remains unchanged.

3.3 Select controls based on systems security requirements

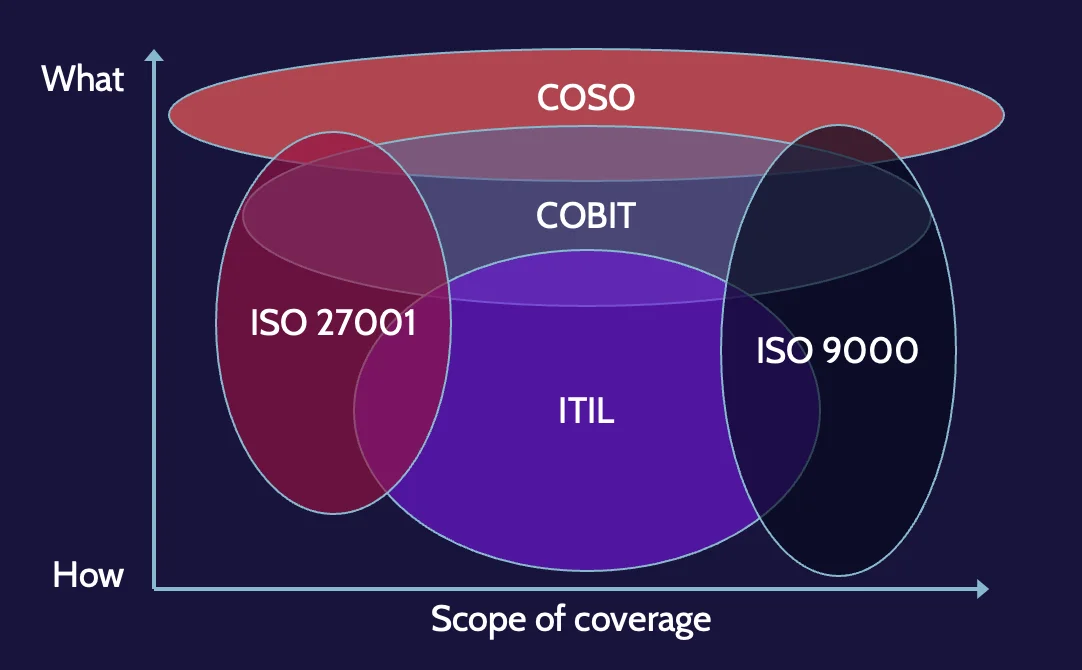

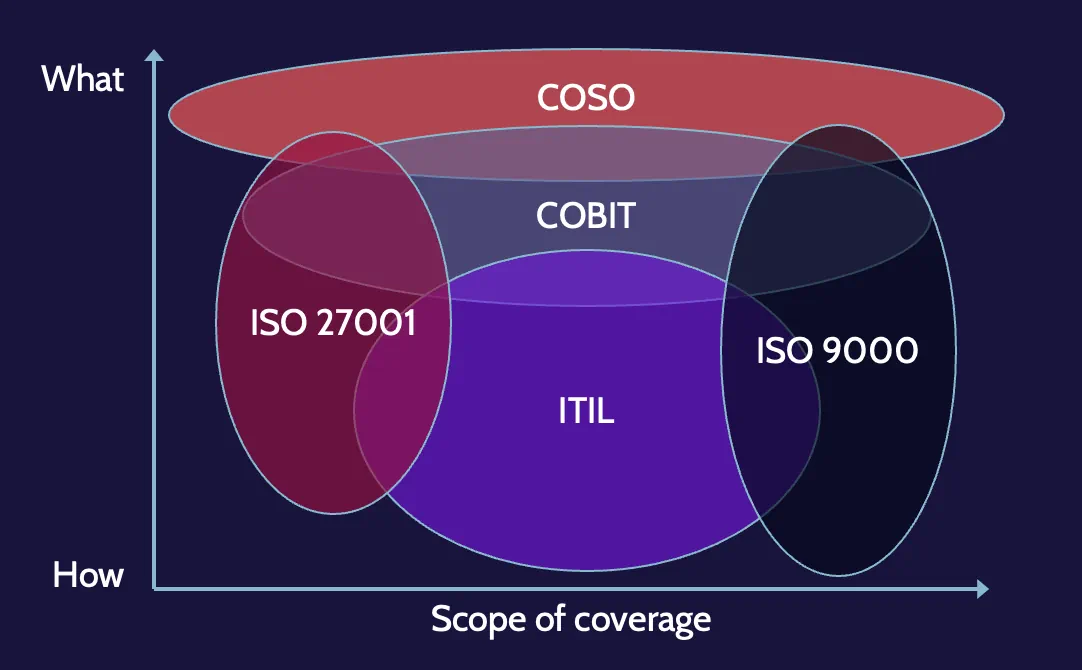

Security control frameworks aid with the control selection process, and security control frameworks provide guidance based on best practices.

Additionally, as control frameworks offer guidance, the best and most applicable elements of multiple frameworks could potentially be utilized as a part of the control selection process:

- COBIT

- ITIL

- NIST SP 800-53

- PCI DSS

- ISO 27001

- ISO 27002

- COSO

- HIPAA

- FISMA

- FedRAMP

- SOX

Rationalizing frameworks

The following figure shows how all these different security frameworks relate to one another:

Notice that they overlap, which means that frameworks can span contexts, and as also noted earlier, organizations will often choose to use features from multiple frameworks to meet their needs.

3.4 Understand security capabilities of information systems (IS)

RMC, security kernel, and TCB

Subjects and objects

Before diving into concepts like the RMC and security kernel, it's important to understand subjects and objects, as those concepts are heavily used throughout the following section.

Subject | Object |

|---|---|

Active entities | Passive entities |

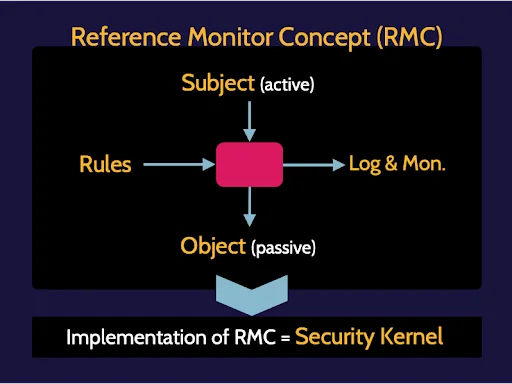

Reference Monitor Concept (RMC)

The RMC is simply the concept of a subject accessing an object through some form of mediation that is based on a set of rules, with this access being logged and monitored. This is the reference monitor concept, which is prevalent throughout security and is a topic often seen on the exam.

RMC features include:

- Must mediate all access

- Be protected from modification

- Be verifiable as correct

- Always be invoked

Security kernel

It's important to remember that the implementation of the reference monitor concept is known as a security kernel. Any system that is actually controlling access must be an actual implementation. If it's implemented, it's a security kernel.

Any time a security kernel is implemented, it should demonstrate the three characteristics or properties of the RMC: completeness, isolation, and verifiability, as shown in the following table:

Completeness | Isolation | Verifiability |

|---|---|---|

Impossible to bypass mediation; impossible to bypass the security kernel | Mediation rules are tamperproof | Logging and monitoring, and other forms of testing to ensure the security kernel is functioning correctly |

Trusted Computing Base (TCB)

Trusted Computing Base a(TCB) encompasses all the security controls that would be implemented to protect an architecture. It'.s the totality of protection mechanisms within an architecture. Examples of components that would be within the TCB include all the hardware, firmware, and software processes that make up the security system.

It's worth highlighting that all the components noted below are found in the TCB:

- Processors (CPUs)

- Memory

- Primary storage

- Secondary storage

- Virtual memory

- Firmware

- Operating systems

- System kernel

Processors (CPUs)

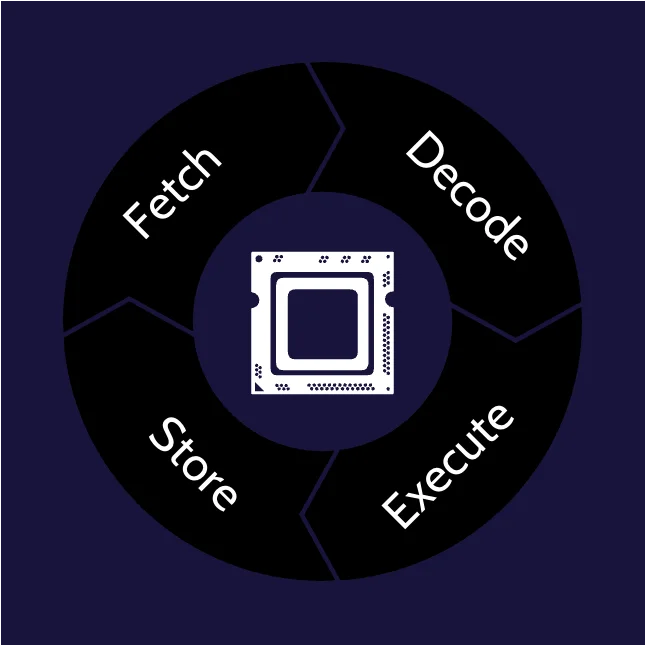

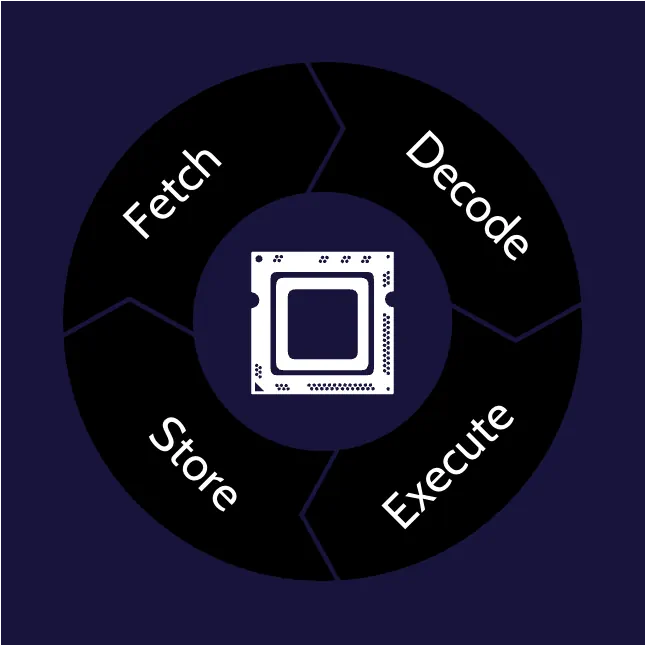

A central processing unit (CPU) is the brain of a computer; it processes all of the instructions and ultimately solves problems. A CPU constantly iterates through this four-step process:

- Fetching instructions and data

- Decoding instructions

- Executing instructions

- Storing results

Processor states

From a security perspective, CPUs operate in one of two processor states: the supervisor or problem state. These states can also be thought of as privilege levels and are simply operating modes for the processor that restrict the operations that can be performed by certain processes.

Process isolation

From a security perspective, process isolation is a critical element of computing, as it prevents objects from interacting with each other and their resources. It is often accomplished using either of the two following methods:

- Time-division multiplexing. Time-division multiplexing relates more to the CPU. With time-division multiplexing, process isolation is determined by the CPU. As before, when multiple applications are running, multiple accompanying processes are also running. In this case, the CPU allocates very small slots of time to each process.

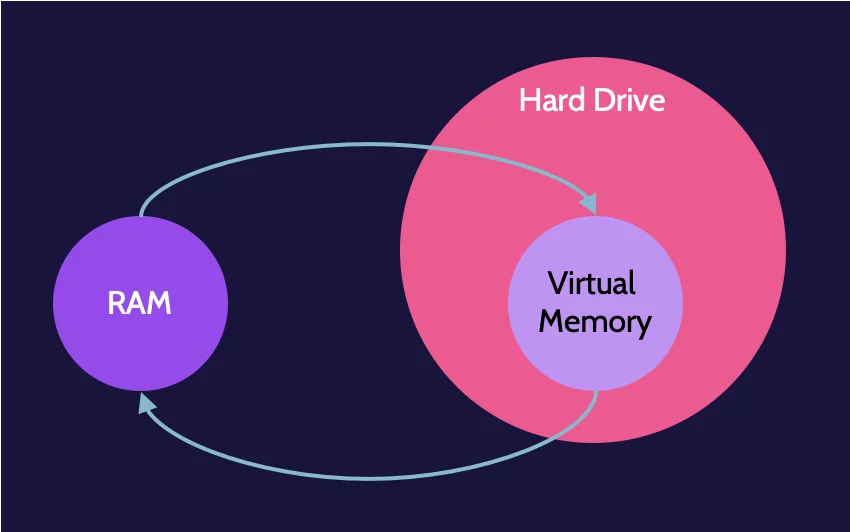

- Memory segmentation. is all about separating memory segments from each other to protect the contents, including processes that may be running in those segments. In many cases, it relates more to Random-Access Memory (RAM)—the high-speed volatile storage area found in computer systems. Memory segmentation ensures that the memory assigned to one application is only accessible by that application.

Types of storage

Storage is where data in a computer system can be found. At a high level, two main types of storage exist: primary and secondary storage.

Primary Storage | Secondary Storage |

|---|---|

|

|

Another related concept refers to what happens because of RAM filling up when many applications are running at the same time. Data related to each program and running process are loaded into RAM, and if RAM fills up, the system will eventually crash. A way to mitigate this is using what's known as paging, or virtual memory.

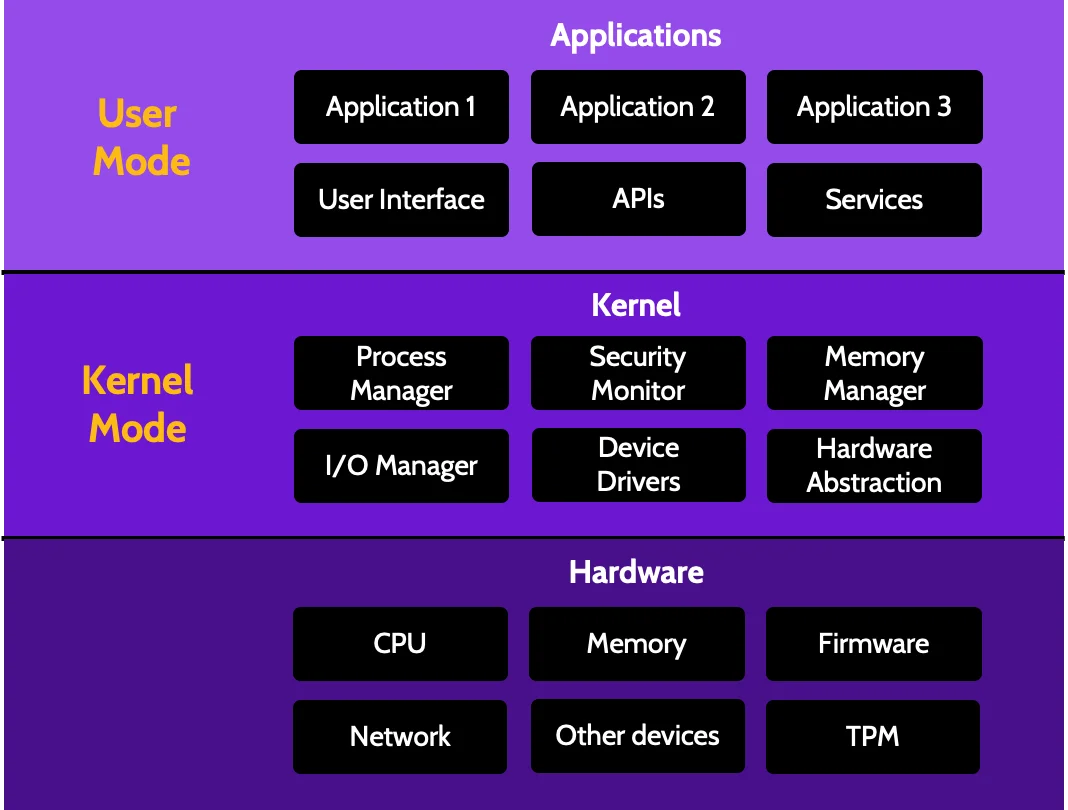

System kernel

The system kernel is the core of the operating system and has complete control over everything in the system. It has low-level control over all the fine details and operational components of the operating system. In essence, it has access to everything.

The system kernel and the security kernel are not the same thing. As noted, the system kernel drives the operating system. The security kernel is the implementation of the reference monitor concept.

From a security perspective, it's critical to protect the system kernel and ensure that it is operating correctly, and privilege levels aid in this regard.

Privilege levels

Privilege levels establish operational trust boundaries for software running on a computer. Subjects of higher trust (e.g., the System kernel) can access more system capabilities and operate in kernel mode. Subjects with lower trust (most applications running on a computer) can only access a smaller portion of system capabilities and operate in user mode.

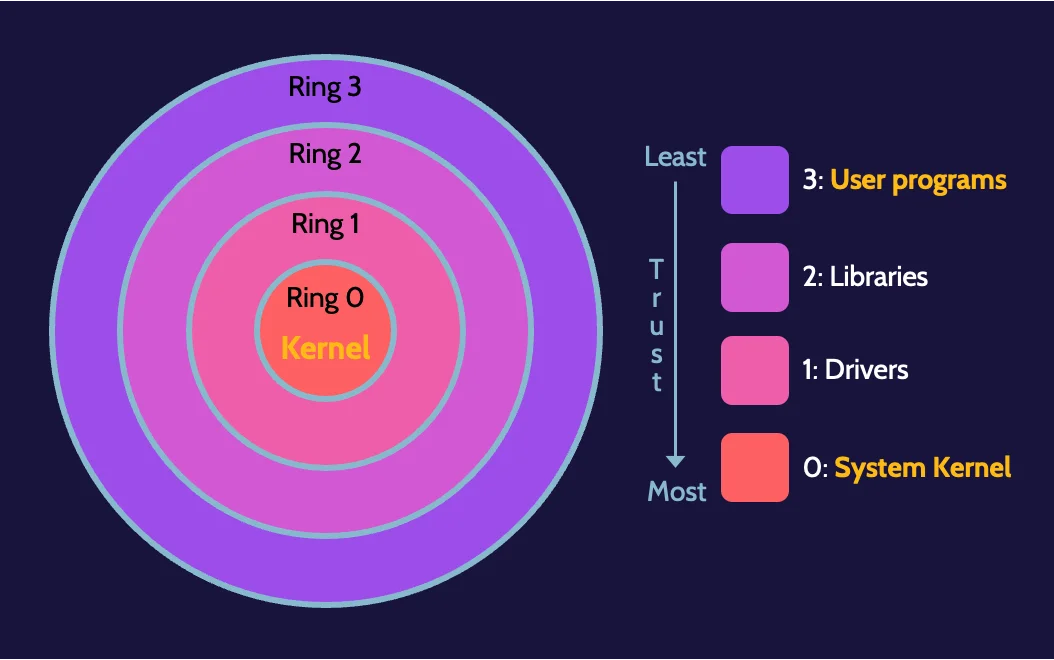

Ring protection model

The ring protection model is a form of conceptual layering that segregates and protects operational domains from each other. Ring 0 is the most trusted and, therefore, the most secure ring. Firmware and other critical system-related processes run in Ring 0. Ring 3 (user programs and applications), on the other hand, is the least trusted and secure level, where the least access exists to protect the kernel from unwanted side effects like malware infecting the machine.

Firmware

Firmware is software that provides low-level control of hardware systems; it's the code that boots up hardware and brings it online. One of the challenges with firmware is that it is no longer hard-coded; therefore, it can be updated and modified, which makes it vulnerable to attacks. Changeable, updateable, or modifiable firmware means that the hardware itself is now vulnerable to attacks.

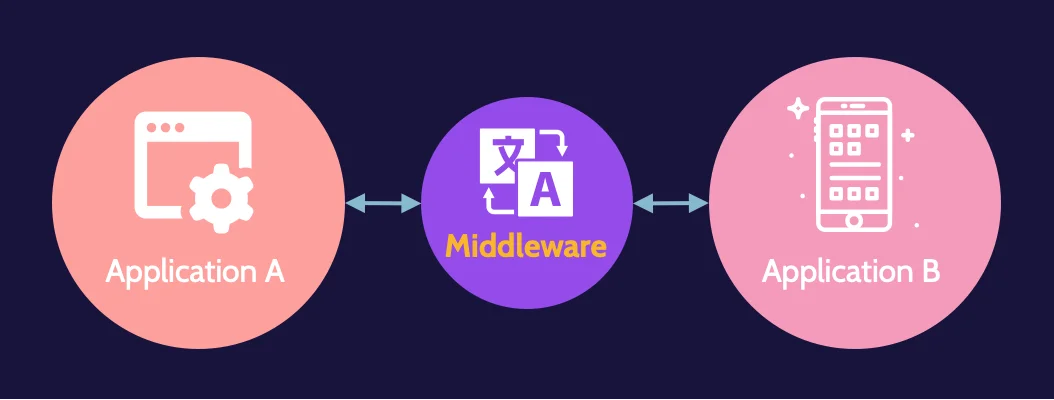

Middleware

The idea of middleware is it's an intermediary; it's a layer of software that enables interoperability (glue) between otherwise incompatible applications. In other words, middleware speaks two languages and can thereby enable communication between two completely different systems that otherwise could not communicate with each other.

Abstraction and virtualization

Abstraction

Abstraction is a concept that is used extensively in computing. It is an idea that the underlying complexity and details of a system are hidden. Examples of abstraction include driving a car and computing.

It is used in programming. CPUs, at their core, understand 1s and 0s. From a human perspective, however, 1s and 0s are very hard to understand, and over the years, numerous iterations of programming languages have evolved and abstracted the complexity of computing to a human-readable form.

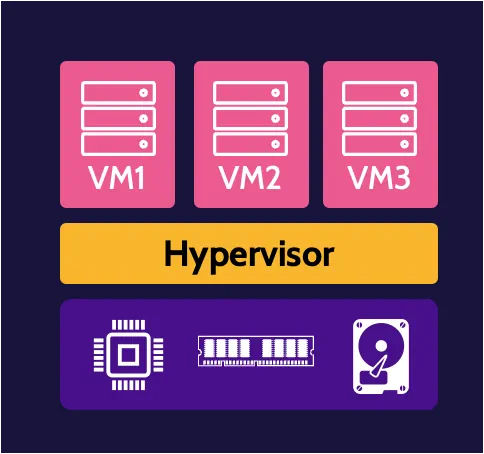

Virtualization

Carrying the concept of abstraction further, virtualization is the process of creating a virtual version of something to abstract away from the true underlying hardware or software. Specifically, to facilitate virtualization, a hy pervisor is employed. A hypervisor serves as a layer of abstraction between underlying physical hardware and virtual machines (VMs).

Layering/Defense-in-depth

Another important concept is the concept of layered defense or defense-in-depth. What this simply means is the protection of a valuable asset should never rely on just one control. If that control fails, the asset would be unprotected. Instead, multiple control layers should be implemented, and the control at each layer should be a complete control—a combination of preventive, detective, and corrective controls.

Trusted platform modules (TPM)

A trusted platform module (TPM) is a piece of hardware that implements an ISO standard, resulting in the ability to establish trust involving security and privacy. In other words, a TPM is a chip that performs cryptographic operations like key generation and storage in addition to platform integrity.

For example, when a machine boots, the TPM can be used to identify if there has been any tampering with critical system components, in which case the system wouldn't boot. So, a TPM is a piece of hardware—usually installed on the motherboard—that incorporates the international standard denoted by ISO/IEC 11889 on computing devices, like desktop and laptop computers, and mobile devices, among others.

In many ways, a TPM is a black box, meaning that commands can be sent to the TPM, but the information stored within the TPM cannot be extracted.

Computers that contain a TPM can create cryptographic keys and encrypt them—using the endorsement key—so only the TPM can be used for decryption. This process is known as binding.

Computers can also create a bound key that is also associated with certain computer configuration settings and parameters. This key can only be unbound when the configuration settings and parameters match the values at the time the key was created. This process is known as sealing.

3.5 Assess and mitigate the vulnerabilities of security architectures, designs, and solution elements

Vulnerabilities in systems

Single point of failure

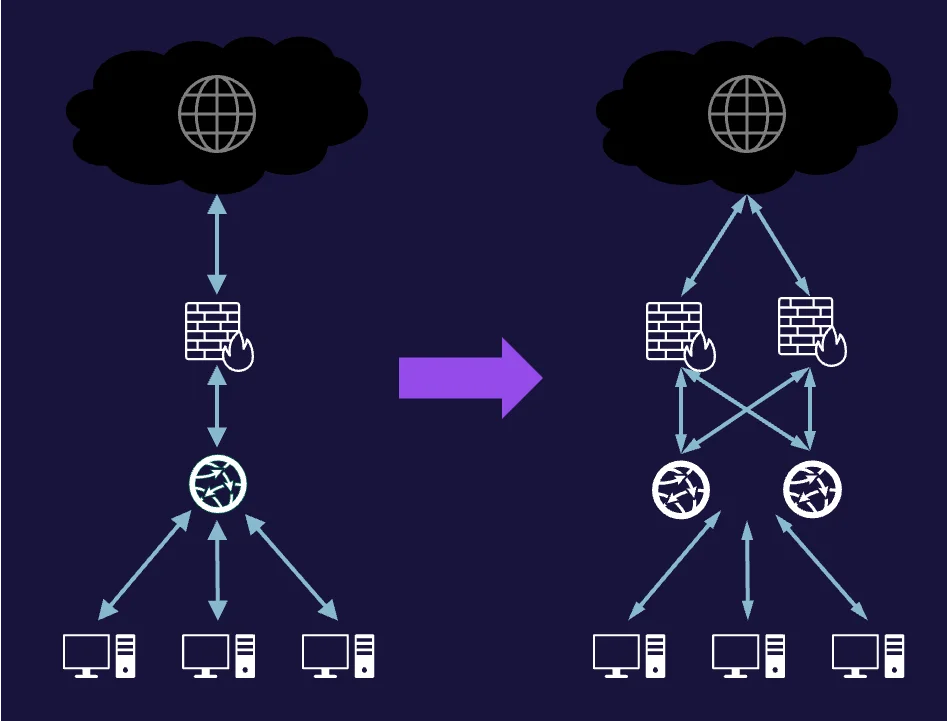

Let's answer the question of what single point of failure means with the graphic below:

The cloud represents the internet. Below it, the brick wall with the flame represents a firewall. Next, the ball with arrows pointing in every direction is a router. Finally, below the router are several computer systems. What are the single points of failure in this diagram? In this example, the firewall and the router are each considered a single point of failure; if either device fails, the connection to the internet is broken. In other words, a single point of failure means that when a single device or connection fails, it impacts the entire architecture.

Reduce the risk of single point of failure

Single points of failure can become very dangerous for any organization and need to be dealt with accordingly, usually by implementing redundancy. Looking at the previous example, two firewalls and two routers could be installed to create redundancy and mitigate the risk of single points of failure. Each pair can be configured in what is known as "high availability" so that if firewall 1 fails, traffic can be rerouted through firewall 2; if router 1 fails, traffic can be rerouted through router 2.

Bypass controls

Bypass controls are a potential vulnerability or new source of risk, but they are intentional. Let's examine this concept through an example: You need to access the administrative settings of your home router, but for some reason, you can't remember the password you set up the last time you did this. Being able to perform a factory reset of that device would allow you to enter the configuration utility with default credentials and set up the device from scratch.

Reduce the risk of bypass controls

Bypass controls are needed, and other compensating controls should always be implemented with them to mitigate or prevent their exploitation.

Ways to mitigate the risk associated with bypass controls include:

- Segregation of duties

- Logging and monitoring

- Physical security

TOCTOU or race condition

TOCTOU stands for Time-of-Check Time-of-Use and essentially represents a short window of time between when something is used and when authorization or access for that use is checked. In other words, in that short time period, something unintended or malicious can transpire. This is also sometimes known as a race condition.

Reduce the risk of race conditions

To mitigate the risk of race conditions, the frequency of access checks should increase. The more frequent the checks, the greater the frequency of re-authentication, thus reducing the overall risk.

Emanations

Emanations represent a valid security concern since any time a device is emanating, valuable data could be available that a properly equipped eavesdropper or system could collect.

Various ways exist to protect from emanation, such as:

Shielding (TEMPEST) | Proper walls, Faraday cage use, copper-lined envelopes that prevent sensitive information from leaking out or being intercepted; TEMPEST is a specific technology that prevents emanations from a device |

White noise | Strong signal of random noise emanated where sensitive information is being processed |

Control zones | Preventing access or proximity to locations where sensitive information is being processed |

Hardening

Hardening is the process of looking at individual components of a system and then securing each component to reduce the overall vulnerability of the system.

Vulnerabilities in systems

To protect all devices, they need to be broken down into components, and each component would need to be secured. Each component within a given device is secured based on value.

Organizational relevance is another term you need to be familiar with, and it indicates how valuable something is to the organization. This is a term you could possibly see on the exam, and you need to remember that it implies value.

Reduce risk in client and server-based systems

Examples of hardening include doing things like disabling unnecessary services on a computer system or uninstalling software that shouldn't be there (like an SFTP server running on a user's endpoint). A service represents a small subset of code running on a system for a particular reason.

Other ways to harden systems include:

- Installation of antivirus software

- Installation of host-based IDS/IPS and firewall

- Perform device configuration reviews

- Implementation of full-disk encryption

- Enforcement of strong passwords

- Obtaining routine system backups

- Implement sufficient logging and monitoring

The most important question to ask is, "What is this system meant to do?" That will guide the hardening effort. If a system is supposed to act as a web server, then it shouldn't have fifty different ports open and services installed, as that heavily increases an attacker's chances of breaching it. Each time a system is deployed, a hardening procedure should be followed, and after each hardening process, the resulting configuration should be verified to confirm the system is working as expected.

Risk in mobile systems

Reduce risk in mobile-based systems

Mobile device management (MDM) and mobile application management (MAM) solutions help organizations secure devices and the applications that run on them.

Mobile device management solutions should particularly focus on securing remote access using a VPN and end-point security, as well as securing applications on the device through application whitelisting.

MDM and MAM can be combined with policy enforcement, application of device encryption, and related policies to adequately protect mobile devices if they are lost or stolen.

- Policies. One of the best ways to reduce risk related to mobile devices is using policies like Acceptable Use, Personal Computers, BYOD/CYOD (Bring Your Own Device/Choose Your Own Device), and Education, Awareness, and Training.

- Process related to lost or stolen devices. Typically, this involves notification of IT and security personnel as well as a means by which the device can be remotely wiped.

- Remote access security. VPN and 2FA capabilities should be enabled by default to prevent a mobile device from being used to connect to a remote network in an insecure manner.

- Endpoint security. Antivirus/malware, DLP, and similar MDM-provisioned software should be installed on mobile devices just like standard computing equipment. Additionally, the concept of hardening should be employed to minimize the potential attack surface of the devices.

- Application whitelisting. Organizations should control which applications users may install on their mobile devices through application whitelisting and not allow them to install anything not present on the approved application list.

OWASP Mobile Top 10

The Open Web Application Security Project (OWASP) Foundation is an organization that is driven by community-led efforts dedicated to improving the security of software, including software and applications that run on mobile devices.

Among OWASP's many substantial contributions to the security community are the globally recognized OWASP Top 10 and OWASP Mobile Top 10 lists that conform data from a variety of sources like security vendors and consultancies, bug bounties, and numerous organizations located around the world. The recent OWASP Mobile Top 10 is listed here:

- M1. Improper Platform Usage

- M2. Insecure Data Storage

- M3. Insecure Communication

- M4. Insecure Authentication

- M5. Insufficient Cryptography

- M6. Insecure Authorization

- M7. Poor Client Code Quality

- M8. Code Tampering

- M9. Reverse Engineering

- M10. Extraneous Functionality

For each identified risk, specific details about it can be found, including threat agents, attack vectors, security weaknesses, technical impacts, and business impacts, as well as ways to prevent or mitigate the risk.

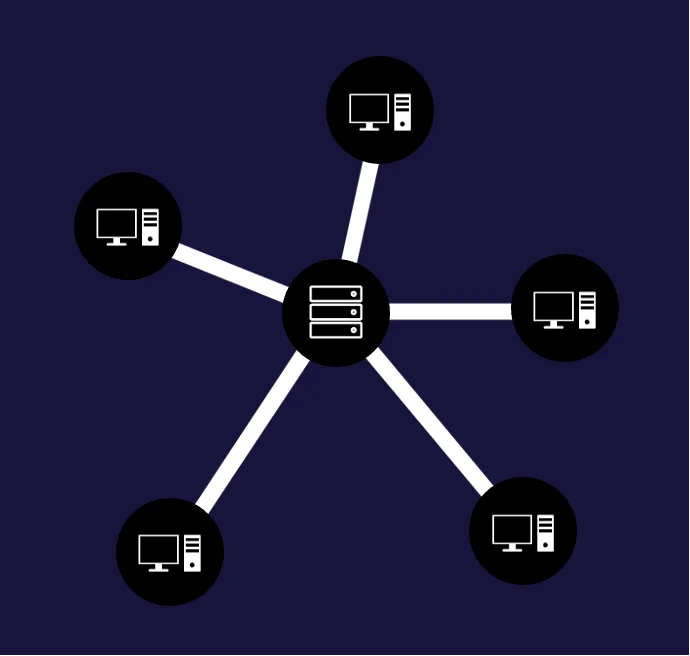

Distributed systems

Distributed systems are systems that are spread out and can communicate with each other across a network. The internet is a great example of a distributed system.

Although there is significant value in connecting the systems within an organization and then connecting the organization to the internet, there are also significant risks, such as providing a means for potential attackers to gain access to the corporate network and cause mayhem (data breaches, denial-of-service, ransomware, etc.).

Distributed file systems (DFS) take the concept of distributed systems a step further by allowing files to be hosted by multiple hosts and shared and accessed across a network. DFS software helps manage the files being hosted and presents them to users as if they're stored in one central location.

Grid computing

Grid computing is like distributed systems as it still relates to systems that are connected together, but the thinking behind grid systems is that they're usually connected via a very high-speed connection to serve a greater purpose than simply passing the occasional email or file back and forth.

What's the security risk with grid computing?

The security risks with grid computing are as follows:

- Data integrity

- Data validation

- Inappropriate use of the grid computer

Interference and aggregation

Data warehouse

The idea behind a data warehouse is to perform data analytics from a number of different data sets with the hope of identifying interesting bits of information. A common term related to data warehouses is data island, and it's used in the form of a question: "Where are the data islands located?" As this question alludes, the premise of a data warehouse is that all the data from these islands are brought together in one central location. Once in one location, the data is much easier to analyze.

What are the security risks related to a data warehouse?

For a couple of reasons, it could be a single point of failure. The first relates to availability. The second relates to the fact that if someone gains unauthorized access to the data warehouses, they could have access to significant amounts of valuable information.

Big data

Data from many different locations are brought into a central repository to be analyzed. On the surface, this sounds very similar to a data warehouse. What's the difference? Three things:

- Variety means that data can be pulled from a number of different sources. In big data, just about anything can be stored, meaning it represents the variety that can be found within a big data repository.

- Volume refers to the size of the data sets. With a data warehouse, storage is typically restricted to the storage capacity of a single system; with big data, storage spans multiple systems.

- Velocity refers to the fact that data can be ingested and analyzed very rapidly in big data—even faster than is possible with data warehouses.

Examples of big data tools include Hadoop, MongoDB, and Tableau.

Data mining and analytics

The primary driver behind data warehouses and big data is the desire to identify trends and other interesting insights. Through the analysis of seemingly disparate data, otherwise invisible relationships and little nuggets of valuable information can be gleaned. These insights are typically referred to as inference and aggregation.

Aggregation | Inference |

|---|---|

Collecting, gathering, or combining data for the purpose of statistical analysis | Deducing information from evidence and reasoning rather than from explicit statements |

Reduce the risk of inference and aggregation

Inference, especially unauthorized inference, can create a significant risk to an organization. One method to reduce the risk of unauthorized inference is using polyinstantiation, which allows information to existing in different classification levels for the purpose of preventing unauthorized inference and aggregation.

Industrial Control Systems (ICS)

Industrial control system (ICS) is a general term used to describe control systems related to industrial processes and critical infrastructure.

Reduce risk in industrial control systems

One of the best ways to protect ICS is keeping them offline, also known as air gapping or creating an air gap. What this simply means is that ICS devices can communicate with each other, but the ICS network is not connected to the internet or even the corporate network in any way. So, even if someone does try to connect to these ICS systems from the internet or corporate network, they'll be unable to do so.

The three primary types of ICSs are Supervisory Control and Data Acquisition (SCADA), Distributed Control System (DCS), and Programmable Logic Controller (PLC).

Patching industrial control systems

Strong configuration management processes, good patch management and backup/archive plans, and so on should be in place and used when and where possible. When patching ICS systems is not possible, additional mitigating steps can be taken to reduce the risk and impact of disruption of critical infrastructure:

- Implement nonstop logging and monitoring and anomaly detection systems.

- Conduct regular vulnerability assessments of ICS networks, with a particular focus on connections to the internet or direct connections to internet-connected systems, rogue devices, and plaintext authentication.

- Use VLANs and zoning techniques to mitigate or prevent an attacker from pivoting to other neighboring systems if the ICS is breached.

Internet of Things (IoT)

The Internet of Things (IoT) refers to all the devices, like home appliances, that are connected to the internet. IoT devices, by their nature, are risky. Reducing their risk involves making different purchase decisions, taking every precaution when installing, and keeping the technology up to date

Cloud service and deployment models.

Cloud computing

A cloud can be a private, public, or hybrid model. It can also allow greater or smaller control to fall on the client or the cloud service provider. It all depends on what the goals are. So many options and variations exist. Some of the most common characteristics of a cloud provider are:

- On-demand self-service

- Broad network access

- Resource pooling

- Rapid elasticity and scalability

- Measured service

- Multitenancy

Cloud service models

There are three primary service models used in the cloud:

- Software as a Service (SaaS) provides access to an application that is rented—usually via a monthly/annual, subscriber-based fee—and the application is typically web-based.

- Infrastructure as a Service (IaaS) is an environment where customers can deploy virtualized infrastructure servers, appliances, storage, and networking components.

- Platform as a Service (PaaS) is a platform that provides the services and functionality for customers to develop and deploy applications.

Two additional cloud service models that are now pervasively used are:

- Containers as a Service (CaaS). It allows for multiple programming language stacks, like Ruby on Rails or node.js, to name a couple, to be deployed in one container.

- Function as a Service (FaaS). It describes serverless and the use of microservices to accomplish business goals inexpensively and quickly.

Significant responsibility is still often shared between the cloud service provider and the cloud customer. The cloud customer is always accountable for their data and other assets existing in a cloud environment.

Cloud deployment models

Several cloud deployment models exist, and these refer to how the cloud is deployed, what hardware it runs on, and where the hardware is located. Most of the deployment models are intuitive and easy to understand.

Header | Infrastructure managed by | Infrastructure owned by | Infrastructure located | Accessible by |

|---|---|---|---|---|

Public | Third-party provider | Third-party provider | Off-premises | Everyone (untrusted) |

Private/ Community | Organization | Organization | On-premises | Trusted |

Hybrid | Both: | Both: | Both: | Both: |

Protection and privacy of data in the cloud

In addition to implementing strong access controls, strong encryption practices should be used when and where necessary to properly secure this data. This is especially true when an organization makes the initial decision to move from legacy, on-premises infrastructure to that of a cloud provider. In cases like this, best practices indicate that data should be encrypted and secured locally and then migrated to the cloud.

Cloud computing roles

Multiple computing roles relate to cloud computing: cloud consumer, cloud provider, cloud partner, and cloud broker.

Cloud Service | Individual or organization who is accessing cloud services |

Cloud Service | The organization that is providing cloud services/resources to consumers |

Cloud Service | The organization which supports either the cloud provider or customer (e.g., cloud auditor or cloud service broker) |

Broker | Carrier, Architect, Administrator, Developer, Operator, Services Manager, Reseller, Data Subject, Owner, Controller, Processor, Steward |

Accountability versus responsibility

Accountability can never be delegated or outsourced. It can't be outsourced or passed down, and it always remains with the owner.

Responsibility, on the other hand, can be outsourced, and this often happens to a great extent when working with a cloud service provider.

Accountability | Responsibility |

|---|---|

Where the buck stops | The doer |

Have ultimate ownership, answerability, blameworthiness, and liability | In charge of task or event |

Only one entity can be accountable | Multiple entities can be responsible |

Sets rules and policies | Develops plans, makes things happen |

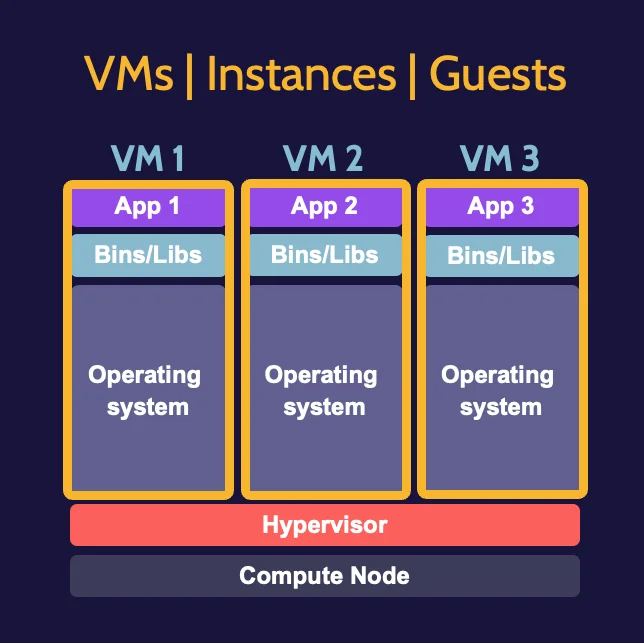

Compute in the cloud Hypervisors, virtual machines (VM), containers, serverless

A hypervisor, also known as a virtual machine manager/monitor (VMM), is software that allows multiple operating systems to share the resources of a single physical machine. On the other hand, a virtual machine (VM) resembles a computer, but everything is emulated using software.

One of the characteristics of cloud computing is resource pooling, which describes the relationship between the fundamental hardware that makes up the compute, storage, and network resources and the multiple customers that utilize those resources. Cloud customers can access compute resources through:

- Virtual Machines (VM)

- Containers

- Serverless

A useful capability of virtual machines is the ability to create a baseline image of a virtual machine. An image is essentially a pre-built virtual machine ready to be deployed. Once an image has been created it is easy to spin up numerous virtual machines from the pre-built image.

Compromising the hypervisor would give an attacker access to the multiple virtual machines it controls, so considerable hardening should be enforced to avoid that.

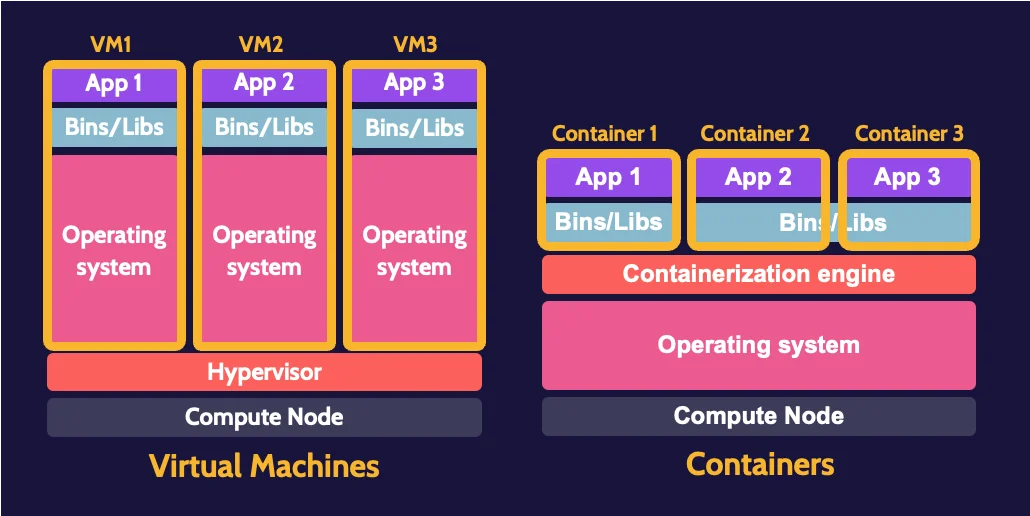

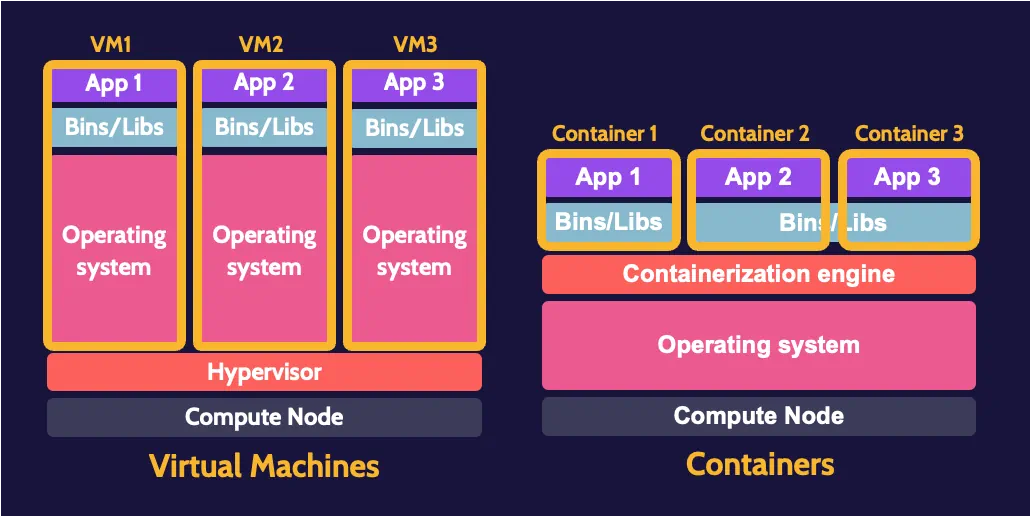

Virtual machines versus containers

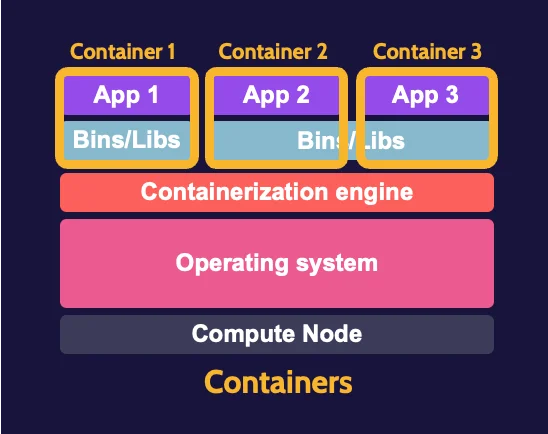

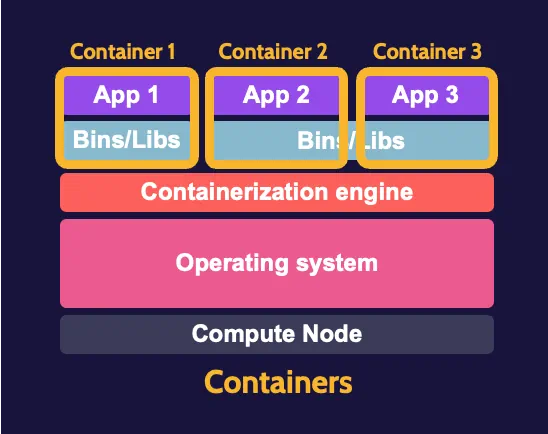

Virtual machines are quite "heavy" relative to containers. For every virtual machine being created, an accompanying operating system must also be installed. The hypervisor acts as the layer of abstraction that manages all the underlying physical infrastructure on behalf of each virtual machine. Containers, in comparison, are quite lightweight relative to virtual machines.

Containers

Containers are highly portable, self-contained applications, with an abstraction layer known as the containerization engine sharing and leveraging resources of that operating system on behalf of each container. Multiple containers can exist and operate in the context of one operating system.

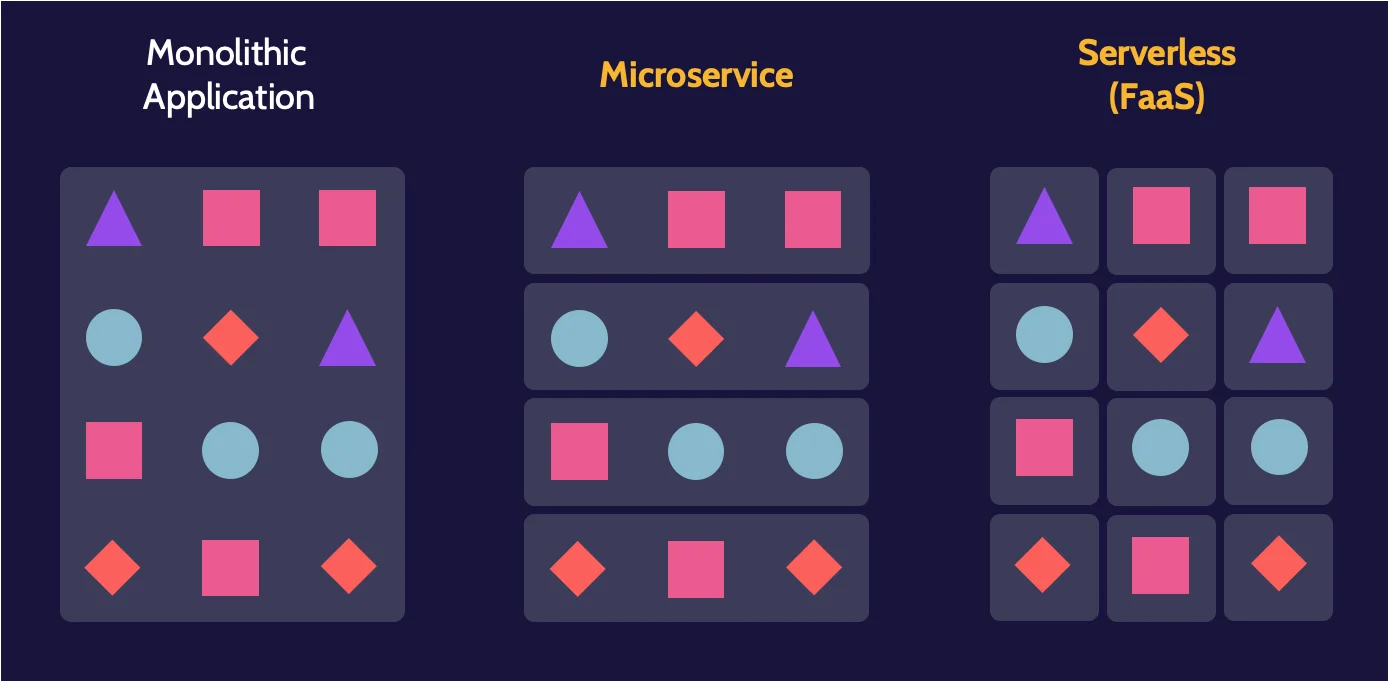

Dividing up services

All functionality of a monolithic application is wrapped together as a single unit, whereas with microservices and serverless, functionality is more defined and self-contained in smaller or individual units.

Microservices

A great way to understand a microservice is to imagine one piece of functionality found in a monolithic application operating as a stand-alone service or a small set of related services—a microservice. Compared to a monolithic application that exists and operates as one unit, microservices exist and function as separate units that are loosely coupled via API calls. Due to the loosely coupled nature of these services, all the disadvantages inherent with a monolithic application are mitigated, as each part of application functionality operates as a separate service.

Cloud forensics

The focus is on the forensic process in cloud computing environments and is typically more complex than on-premises forensic investigations. Virtual disks and VM images are often analyzed as part of cloud forensics.

In essence, a snapshot is a backup of the disk or the machine. As with other types of digital evidence, two bit-for-bit copies of a snapshot should be created for purposes of forensic analysis, with the original snapshot and one copy being essentially locked up and untouched and only the second copy actually being examined.

The following table shows the type of forensic evidence that can be acquired based on the cloud model being used:

SaaS |

|

PaaS |

|

IaaS |

|

Cloud identities

Identity and Access Management (IAM) in any context can be challenging, especially so in the cloud. Security-related access control principles, like separation of duties, least privilege, and need to know still apply, therefore requiring streamlined and efficient IAM solutions.

For many years, most organizations have used on-premise IAM solutions such as Microsoft Active Directory (AD) and Lightweight Directory Access Protocol (LDAP).

These traditional solutions served organizations well for years, but as cloud-based applications and the need to use them grew, so too did the pressure (and related challenges) to extend on-premise IAM capabilities outward. To resolve these challenges, Identity as a Service (IDaaS) solutions began to emerge.

The following are identity technologies that can be used summarized:

SPML | SPML is a deprecated XML-based, Organization for the Advancement of Structured Information Standards (OASIS) standard that was developed to allow cooperating users, resource owners, and service providers—the federation—to exchange information seamlessly for purposes of provisioning. |

SAML | SAML is an XML-based, OASIS standard that utilizes security tokens that contain assertions about a user. SAML facilitates service requests made by users to service providers in the form of requests to identity providers. |

OAuth | OAuth (Authorization) is a Federated Identity Management (FIM) open-standard protocol that typically works in conjunction with OpenID (authentication). |

Cloud migration

Cloud migration involves benefits and risks that should be carefully considered. Benefits of making such a move include shifting costs from a capitalization expense (CapEx) model, where networking and computer equipment is owned by the organization, to an operations expense (OpEx) model.

One of the most important considerations of cloud migration relates to vendor lock-in—the notion that once migrated, an organization is "stuck" with the cloud service provider and will be unable to move elsewhere. The possibility of this taking place requires an organization to perform significant due diligence and analyze its needs in relation to what the cloud service provider is offering.

In addition, security in the cloud should be understood thoroughly, and organizations should work closely with the cloud service provider to implement security that follows best practices.

XSS and CRF

Web-based applications are used as a conduit between a client (e.g., a user's browser on their local machine) and an underlying information source (like a SQL database).

They are becoming extremely pervasive, not only because of the growth of the cloud but simply because this is how most organizations now deploy new systems in their environment.

With this in mind, let's explore a few major web-based vulnerabilities.

Cross-Site Scripting (XSS)

Cross-site scripting is seen most often in two forms:

Stored/Persistent/Type I

Reflected/Nonpersistent/Type II

Stored/Persistent/Type I XSS

The following figure illustrates what stored XSS looks like:

One of the great advantages of this attack is that it literally executes each time a victim visits the vulnerable webpage. The way that this can manifest follows this sequence of steps:

- The attacker identifies a vulnerable website. The website must be vulnerable to XSS attacks for this to be successful. The attacker will typically use specific tools to examine underlying code related to pages on the website.

- The attacker injects malicious code into a page on the website. Based upon #1, the attacker can inject JavaScript into the website. This could be as simple as typing the code into a comment or a form field, with the result that the JavaScript is stored on the web server.

- When a victim visits the website, their browser downloads and executes the malicious code. With the JavaScript injected and stored on the website, every subsequent visitor's browser will access the webpage and execute the malicious code.

- When the victim's browser executes the JavaScript, information from the victim will be sent to the attacker. Until the malicious code is cleared from the website, many site visitors could be impacted, and a savvy attacker might be able to gain username/password, banking, or other sensitive information because of the JavaScript executing in the browser.

Attackers might use XSS for any of a number of malicious reasons. For example, the JavaScript code could be used to:

- Send a copy of the victim's browser cookies, including session cookies, which could lead to session hijacking and taking over a user's active session to connect to the destination resource.

- Disclose files on the victim's computer system.

- Install malicious applications.

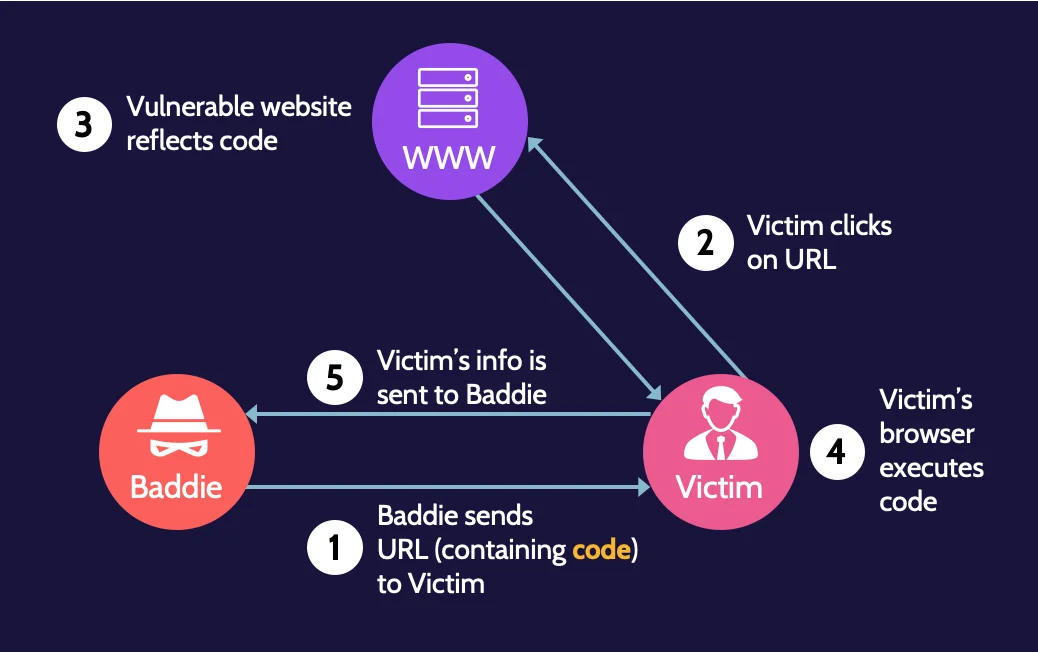

Reflected/Nonpersistent/Type II XSS

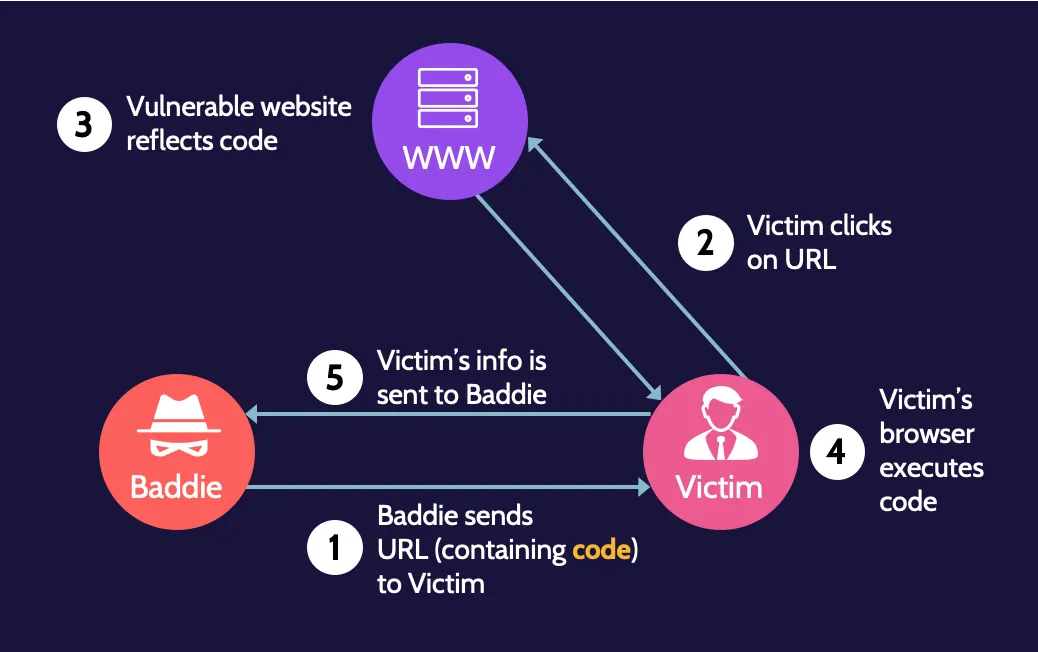

The following figure shows how reflected cross-site scripting works:

Essentially what is happening in the background is the user is clicking on a URL, which directs them to a website, which then reflects malicious code that was sent to the website back to the victim.

This can take place through the following sequence of steps:

- The attacker sends a malicious URL to a victim. The URL containing malicious JavaScript code is sent to the victim (commonly via a phishing email).

- The victim clicks on the URL. Because the URL looks legitimate (or through some other social engineering technique), the user clicks on the malicious link.

- The vulnerable website reflects malicious code to the victim. After clicking on the link, the URL that contains the JavaScript code is going to reflect that code back to the victim's browser.

- The victim's browser executes the reflected code. Because the malicious code has been reflected back to the victim, their browser is going to execute the JavaScript code.

- JavaScript code executes (i.e., sensitive data pertaining to the victim is sent to the attacker). As a result of the JavaScript executing, sensitive data from the victim will be transmitted to the attacker (or any other malicious action the code entails is performed).

Reflected is by far the most common and often results when a link in a phishing email is clicked.

Cross-Site Request Forgery (CSRF)

The success of a cross-site request forgery attack is predicated upon the concept of "persistence" that cookies in browsers facilitate.

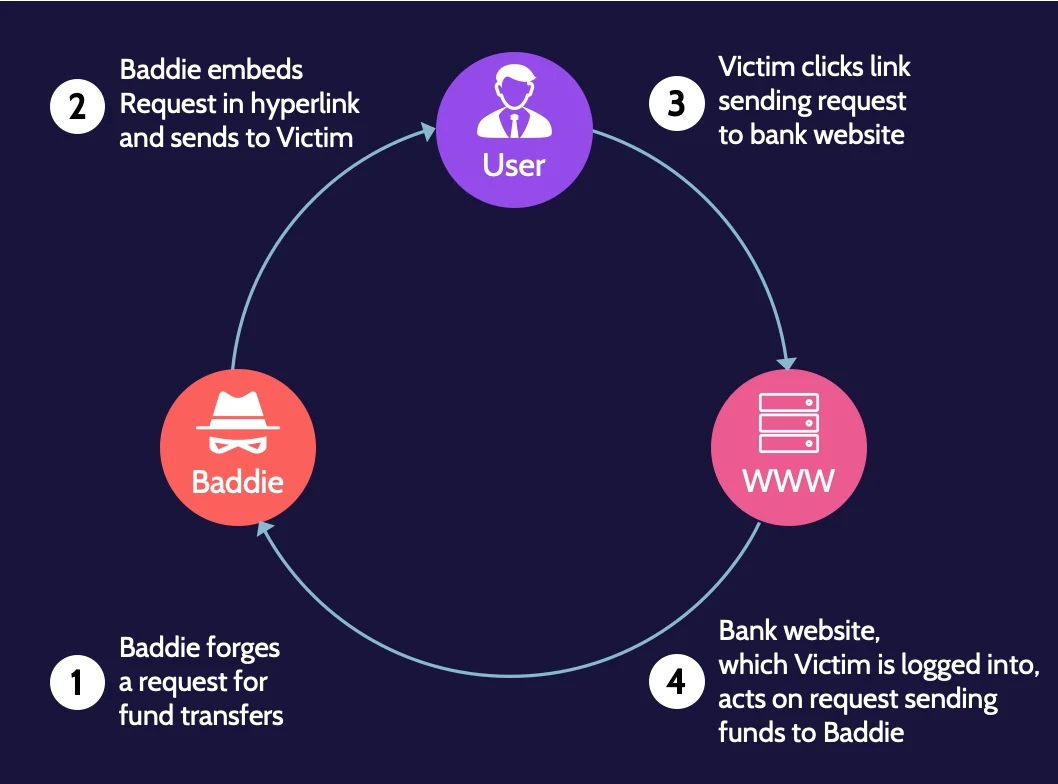

The attack is illustrated here and follows the steps below:

- The attacker forges a request, e.g., a funds transfer request. The attacker crafts a forged request to make it look legitimate, so the victim is fooled into executing it.

- The attacker embeds a forged request in a hyperlink and sends the URL to the victim (e.g., via a phishing email). The forged request could be sent via email, SMS, or another way that appears valid to the victim.

- The victim clicks the link, sending the request to a legitimate entity, like their bank. Through social engineering or other means, the attacker can entice the victim into clicking on the link.

- The legitimate entity, which the victim is logged, acts on the request as requested by the attacker. Because the victim is already logged into the legitimate entity's online portal (e.g., their bank), the funds' transfer request appears valid. As a result, a malicious action can be taken, like transferring funds to the attacker's account, as the bank's web server considers this a legitimate action that is performed by the victim.

The target of the attack is always the server.

XSS versus CSRF

With XSS, the target of the attack is the user's browser; with CSRF, the target of the attack is the web server.

SQL Injection

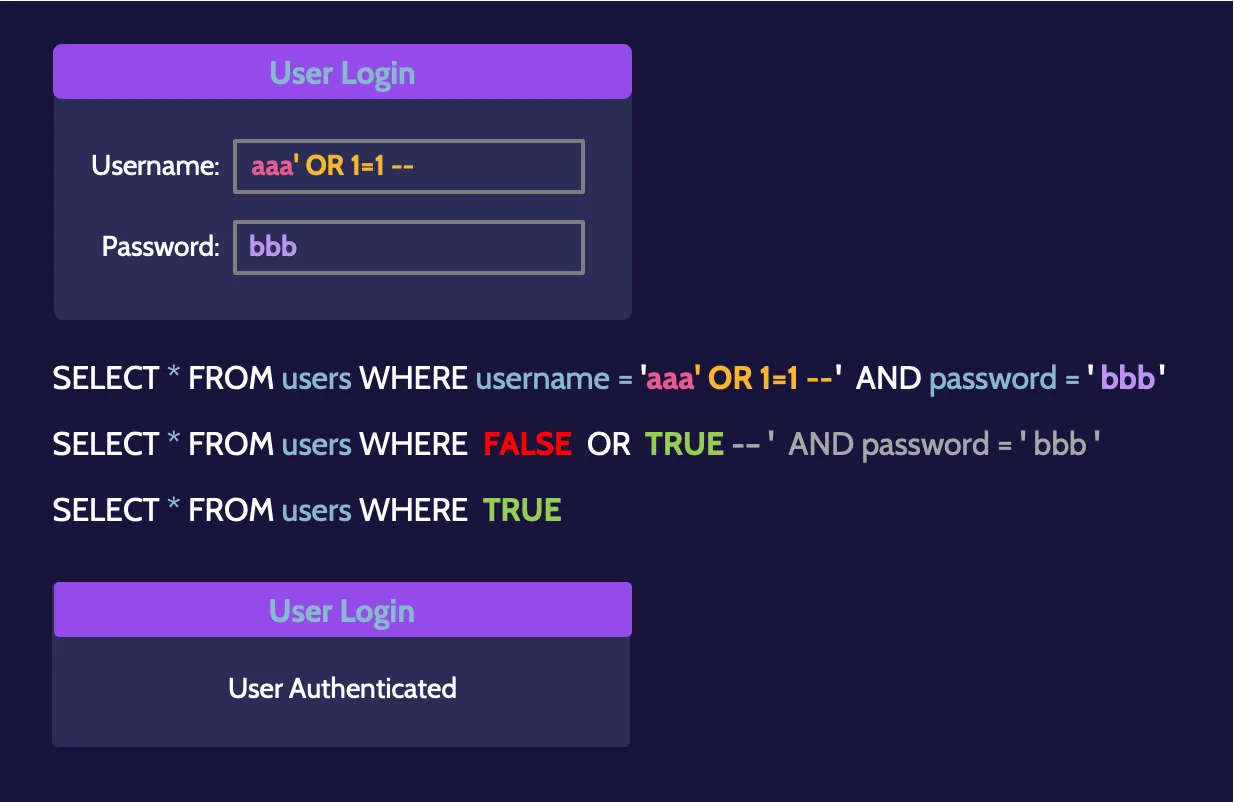

Structured Query Language (SQL) is the language used for communicating with databases. SQL Injection is a method of attack that utilizes SQL commands and can be used for modification, corruption, insertion, or deletion of data in a database.

A simple login screen is used so that when a person enters their username and password, the database will be queried for the corresponding information, and if it is valid, the user should be authenticated. Using SQL Injection, however, neither a correct nor incorrect username is entered into the "Username" field; rather, a bit of SQL code is entered, as shown in the following figure.

In essence, the interpreter executes a logical OR query, which is true if either of the conditions accompanying it are considered true. aaa doesn't exist (resulting in a false state); however, 1 always equals 1, so that returns a true state.

Unvalidated data should never be passed directly from a web server to a database server. In other words, user input should always be validated, sanitized, or otherwise made to conform to expected formatting standards.

Additionally, the use of things like prepared statement/parameterized queries and stored procedures can also help protect against SQL Injection attacks.

SQL commands

The SQL commands shown in the table below do not need to be memorized. These are just examples of some of the most common commands.

CREATE | SELECT | GRANT | COMMIT |

ALTER | INSERT | REVOKE | ROLLBACK |

DROP | UPDATE | Cell | SAVE POINT |

TRUNCATE | DELETE | Cell | Cell |

RENAME | MERGE | Cell | Cell |

Cell | LOCK TABLE | Cell | Cell |

SQL code examples

Again, the aim here is to recognize SQL code. Note that none of these code snippets would be used for SQL injection.

SELECT * FROM users; | This command would return all the data stored in the "users" table. |

NSERT INTO users (userID, password) VALUES (rob, Pass123); | This command would insert a new record in the |

DROP accountsReceivable; | This command essentially works like "delete" and results in deleting the table named "accountsReceivable" from the database. Let's better hope a working copy of the database backup is handy, or else it may become very interesting for the database administrator. |

Input validation

No input validation can lead to numerous web application vulnerabilities being exploited.

Server-side input validation—checking the contents of input fields—is one of the best ways to prevent XSS and SQL Injection attacks from succeeding. By validating data in an input field on the server side and only allowing data that meets input requirements, SQL code, and commands used in injection attacks can be prevented from running.

In addition to standard input validation, which involves clearing input of invalid codes, characters, and commands, another form known as whitelist validation is often used. Allow list (Whitelist) input validation only allows acceptable input that consists of very well-defined characteristics, e.g., numbers, characters, or both, size, or length, to name a few formats and standards.

Contrary to server-side input validation, client-side input validation—because it's done on the client side—may be bypassed and effectively rendered useless.

Lack of input validation

The more tightly controlled and managed the input, the more secure the application and environment. On the other end of the spectrum, no input validation can lead to serious negative consequences, as numerous web application attacks may be possible.

Reduce the risk of web-based vulnerabilities

In its simplest form, the goal of hardening is to reduce the potential attack surface of a system; in other words, to reduce risk. The hardening process could involve one or a combination of the following:

- Utilization of a manufacturer's product guide, specifically portions related to security or hardening

- Best practice guidance specific to the organization's industry

- Information gleaned from online sources

3.6 Apply cryptography

The history of cryptography spans approximately four thousand years. Regardless of the encryption method or tool, the most important aspect of cryptography is key management.

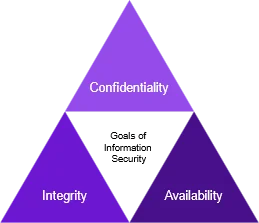

With any cryptographic system, one (or a combination of services) denoted in the following table can be achieved:

Integrity | Integrity ensures that information has not been manipulated or changed by unauthorized individuals without our knowledge; it helps identify unauthorized or unexpected changes to data. |

Authenticity | Authenticity allows verification that a message came from a particular sender. |

Nonrepudiation | Nonrepudiation prevents someone from denying prior actions. There are two flavors of nonrepudiation:

|

Access control | Cryptography enables a form of access control; by controlling the distribution of ciphertext and the corresponding decryption key to only certain people, control over the decryption and, therefore, access to data can also be controlled |

Cryptographic terminology

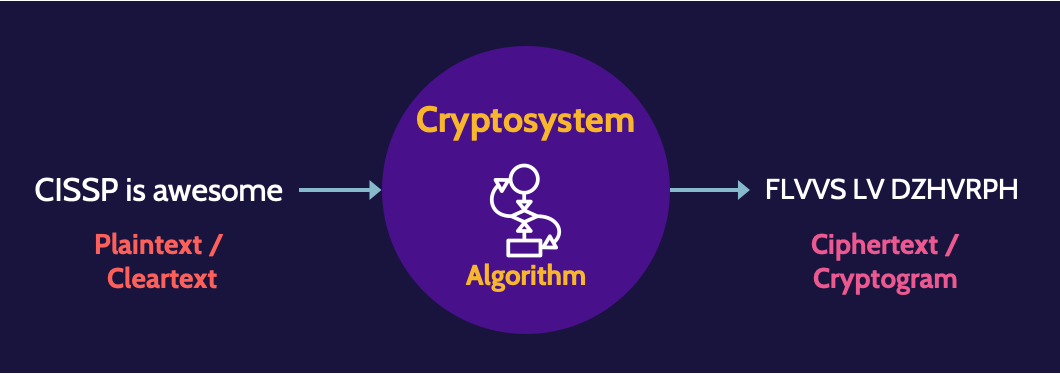

Cryptography involves its own nomenclature. The next figure shows how cryptography systems work:

Plaintext (in this case, "CISSP is awesome") is provided as input into a cryptosystem, and a cryptographic algorithm transforms it into ciphertext. The only way this ciphertext can be transformed back into plaintext by a recipient is through the use of a compatible cryptosystem and the same cryptographic algorithm.

Some of the terms that you will need to be aware of:

- Plaintext

- Encrypt/encryption

- Key/crypto variable

- Decrypt/decryption

- Key clustering

- Work factor

- Initialization Vector (IV)/nonce

- Confusion

- Diffusion

- Avalanche

Key space

The term key space refers to the unique number of keys that is available based on the length of the key. For example, a 2-bit key has a total of four possible or unique keys:

00

01

10

11

Data Encryption Standard (DES) uses a 56-bit key, which equates to 2^56 unique keys, or 72,000,000,000,000,000 (15 zeros, 72 quadrillion) unique keys.

This is a really large number of keys, but modern computers can brute-force a 56-bit key in a matter of anywhere from a few hours to several days. The amount of time needed to break a key is also known as the work factor.

Substitution and transposition

Encryption involves methods known as substitution and transposition. Encryption is accomplished through the manipulation of bits—1s and 0s—via synchronous or asynchronous means, and patterns must be avoided.

When implemented and used correctly, one-time pads are the only unbreakable cipher systems. Bits are encrypted/decrypted as stream ciphers or block ciphers.

Methods of encryption

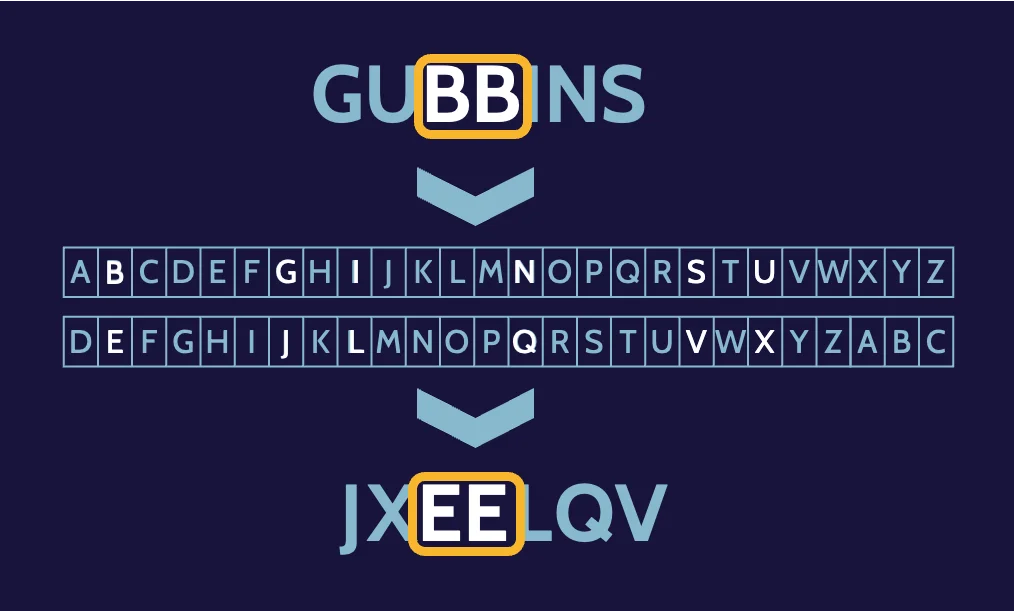

The strongest ones are substitution and transposition:

Substitution | Transposition |

|---|---|

Characters are replaced with a different character | The order of characters is rearranged |

GUBBINS > JXEELQV | GUBBINS > BINBUGS |

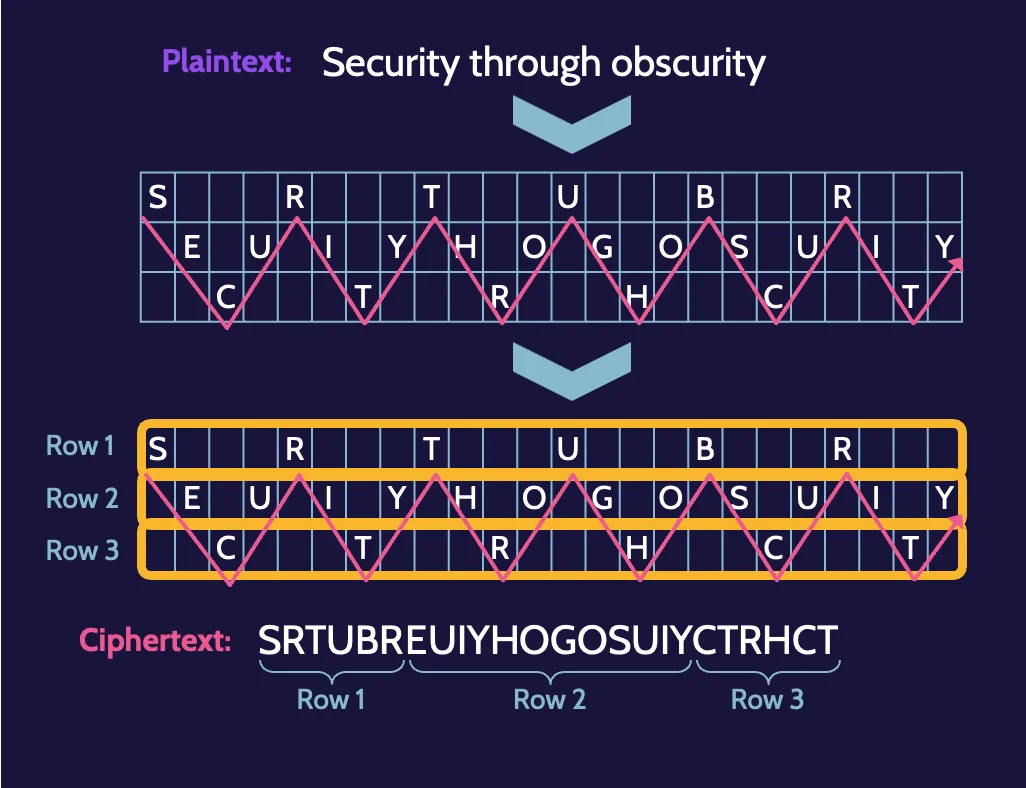

Rail fence (zigzag)

The text is transposed by writing it in a table where each row represents a rail, following a zigzag pattern.

Synchronous versus asynchronous

The bits are manipulated via synchronous or asynchronous methods. Synchronous involves working with bits synchronized through some type of timing mechanism, for example, a clock, while encryption/decryption takes place immediately.

Synchronous | Asynchronous |

|---|---|

|

|

|

|

Substitution patterns in monoalphabetic ciphers

Worth reiterating is that simple substitution and transposition do not hide patterns in monoalphabetic ciphers. Frequency analysis can easily detect patterns in them, which can then lead to the determination of the key.

Substitution—polyalphabetic ciphers

By using polyalphabetic ciphers, frequency analysis becomes much more difficult because patterns are reduced significantly.

The prefix poly means many, so with polyalphabetic ciphers, multiple alphabets are created and used.

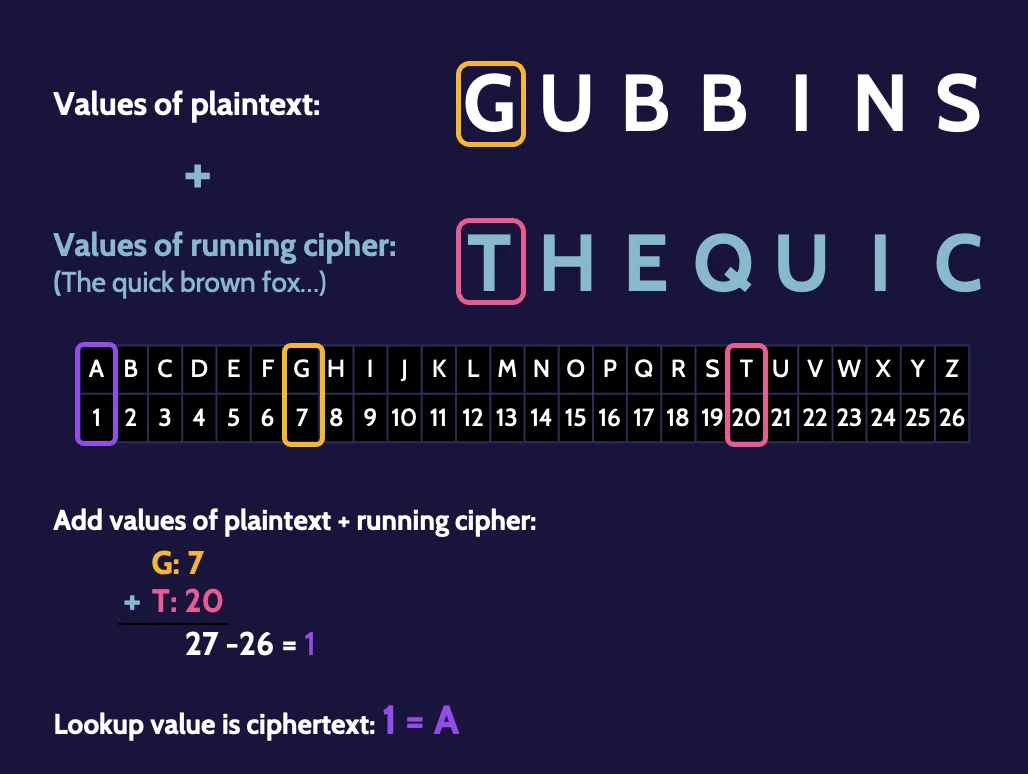

Substitution—running key ciphers

Running key ciphers has been used since World War II. To utilize the running key cipher, the same "book" must exist at both ends of the communication channel.

As long as a previously used key is not reused, this method of communication can remain very secure.

Substitution—one-time pads

With a one-time pad, after every message is encrypted, the key is changed and never reused. One-time pads are the only unbreakable cipher systems.

Stream versus block ciphers

The two types of ciphers that exist are known as stream ciphers and block ciphers. Variables like speed and where it makes sense to use the block as opposed to stream help determine which cipher algorithm to use.

Stream | Block |

|---|---|

Encrypt/decrypt data one bit at a time | Encrypt/decrypt blocks of bits at a time (typically 64-bit blocks) |

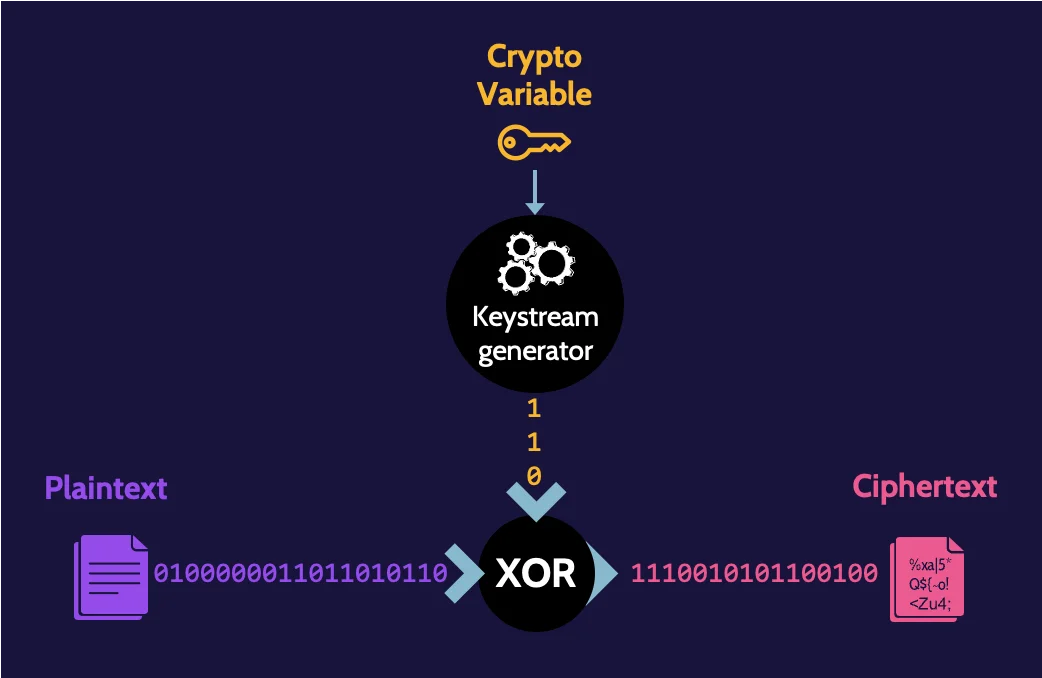

Stream ciphers

Encryption of only 0 or 1 leaves little to no options, so a bit of creativity needs to be employed to enhance the encryption process:

- Plaintext bits that need to be encrypted are combined with bits generated by a keystream generator.

- The bits are combined using a logical operation called "exclusive or," or XOR.

- The result from each XOR operation becomes the ciphertext.

Block ciphers

Instead of bits being encrypted one at a time, they're encrypted in blocks.

Symmetric block modes

In cryptography, stream ciphers provide a clear speed advantage over block mode ciphers because they work with one bit at a time as opposed to block ciphers that need to fill blocks and do creative operations with those blocks.

These are five block cipher modes you need to know for the exam:

- Electronic Codebook (ECB)

- Cipher Block Chaining (CBC)

- Cipher Feedback (CFB)

- Output Feedback (OFB)

- Counter (CTR)

Steganography and null ciphers

Steganography is hiding information of a particular type within something else (like a sound file hidden in a picture). You also need to be familiar with slack space, which is the leftover storage that exists when a file does not need all the space it has been allocated.

A null cipher hides a message by embedding the plaintext message within other plaintext or non cipher materials.

Symmetric cryptography

Symmetric key cryptography is extremely fast and can encrypt massive amounts of data. In the context of networks—trusted and untrusted—where significant amounts of data need to be encrypted/decrypted quickly, symmetric cryptography tends to be the best solution.

A problem with scalability also exists. Scalability refers to the number of symmetric keys that would be required to support secure communications among a large group of users.

Advantages | Disadvantages |

|---|---|

|

|

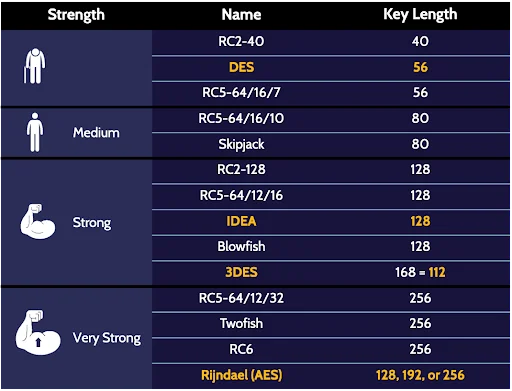

The following table illustrates rank symmetric algorithms from weakest to strongest:

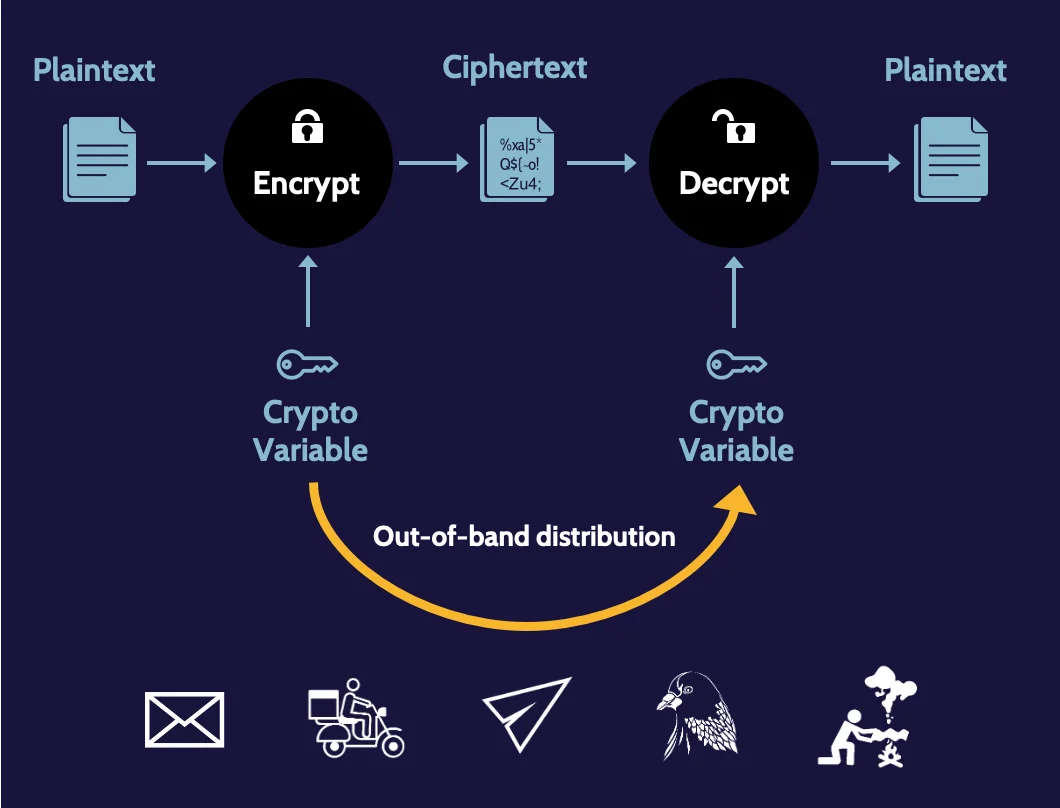

Out-of-band key distribution

With symmetric key cryptography, one of the biggest challenges is key distribution because the sender and receiver of an encrypted message must have the same key.

Sending the key via the same communication channel used to send the message itself is ineffective because the key could easily be intercepted and used to read the encrypted message as well as any further encrypted communication.

Asymmetric cryptography

Asymmetric cryptography solves the key exchange problem associated with symmetric cryptography. It enables digital signatures, digital certificates, authenticity, and nonrepudiation (of origin and delivery) and utilizes key pairs consisting of a public key and a private key.

Because the linkage between the public and private key pair is mathematically based, it's critical that very complex mathematics be utilized to prevent someone from looking at a public key and computing the private key relationship.

Advantages | Disadvantages |

|---|---|

|

|

To obtain authenticity or proof of origin—identify with certainty who a message came from—a sender should encrypt the message using the sender's private key. Anybody with the sender's public key can decrypt the message and therefore know without a doubt who sent the message, as the sender is the only person having access to their private key.

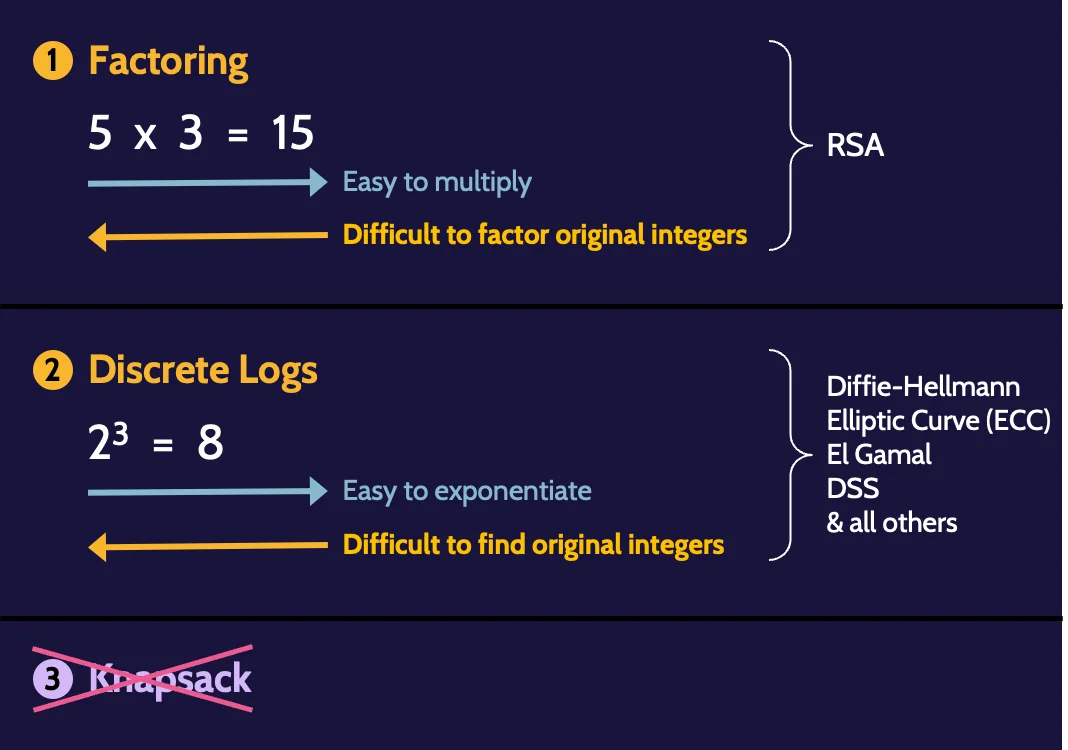

Hard math problems

Factoring and discrete log asymmetric algorithms depend on using very large prime numbers. When using such large numbers, it is very difficult to work backward to determine the original integers.

Asymmetric logarithms

The following table summarizes asymmetric logarithms:

Rivest, Shamir, and Adleman (RSA) | Uses factoring mathematics for key generation. |

Elliptic Curve | Uses discrete logarithm mathematics for key generation. ECC uses shorter keys than RSA to achieve the same level of security, which means ECC is faster and more efficient. |

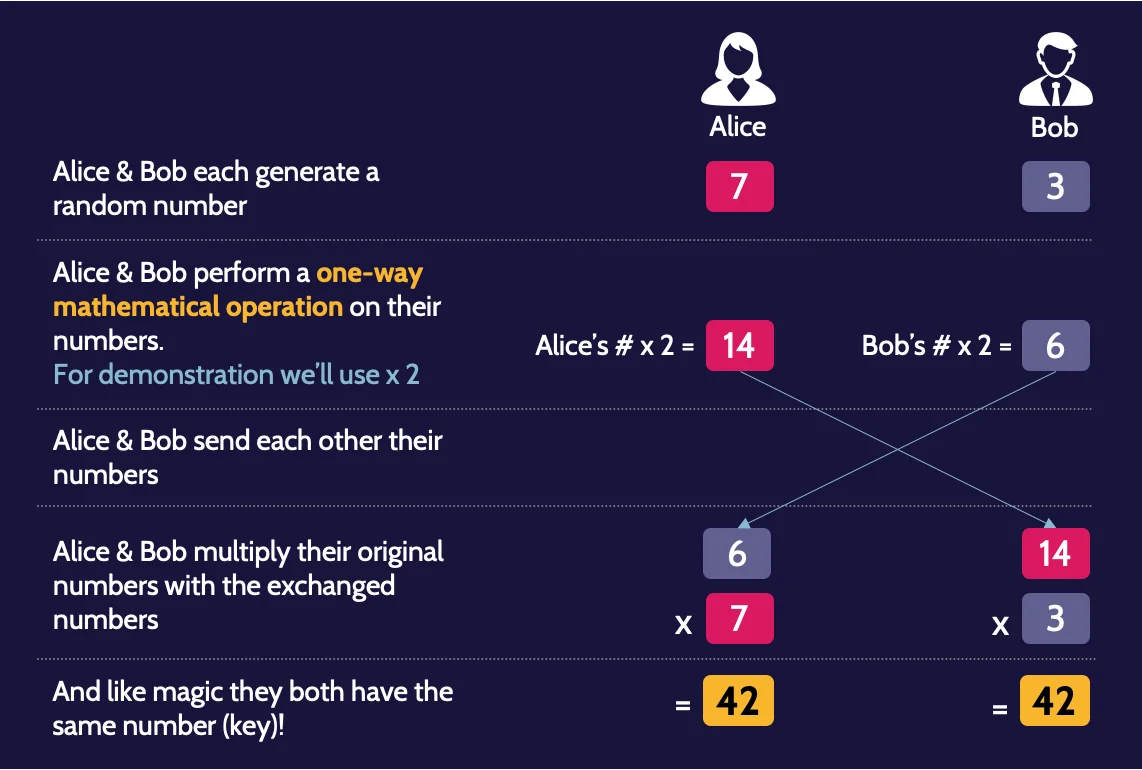

Diffie–Hellman Key Exchange | Uses discrete logarithm mathematics for key generation is primarily used for the exchange of symmetric keys between parties |

Hybrid key exchange

Diffie–Hellman Key Exchange (uses discrete logarithms) is an asymmetric algorithm used primarily for symmetric key exchange.

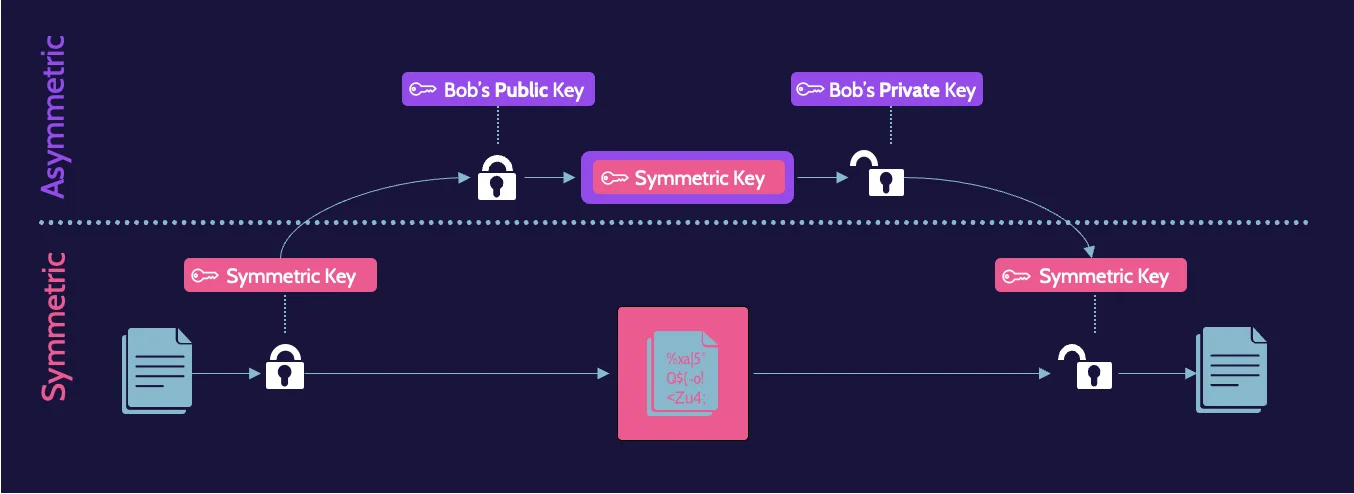

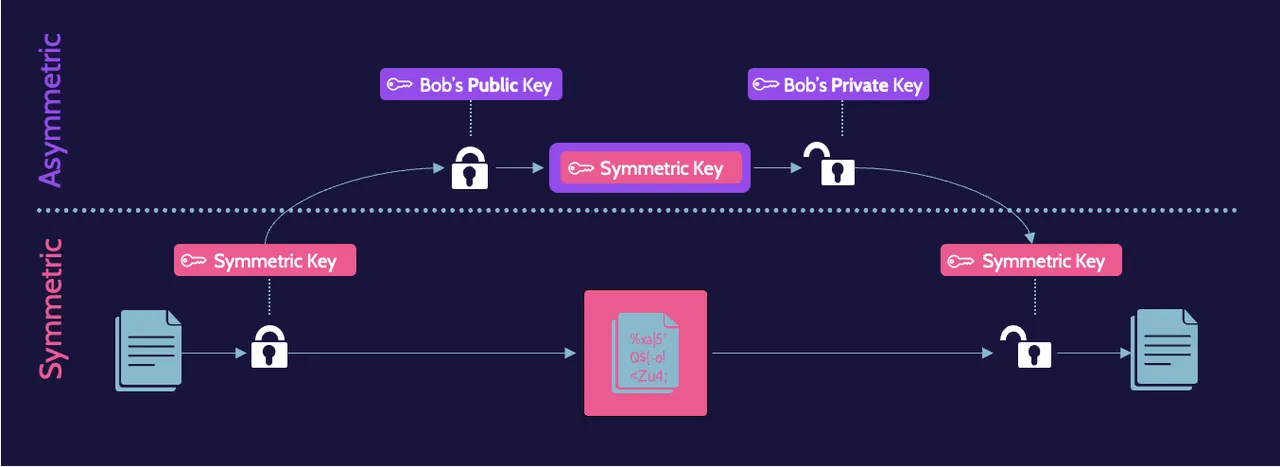

Hybrid cryptography

Hybrid cryptography solutions employ the advantages of symmetric and asymmetric cryptography.

Symmetric algorithms are used for bulk processing and speed—for anything that requires frequent encryption and decryption and where both need to be done very quickly, while asymmetric algorithms are used to exchange symmetric keys.

A combination of both are illustrated here:

Message integrity controls

Message integrity check (MIC) helps to ensure the integrity of a message between the time it is created and the time it is read. A MIC works by creating a representation of the message, which is sent with the message.

In addition, the message integrity checks are based upon math, some more complex—and therefore more effective—than others. The use of simple math can result in a collision, meaning two different messages can result in the same representation.

Hashing algorithms

Hashing algorithms, on the other hand, are much more sensitive to small bit changes and much more resistant to collisions, and they are, therefore, much more effective as integrity control mechanisms.

The key elements that make hashing algorithms so effective are:

- Fixed length digest

- One-way

- Deterministic

- Calculated on the entire message

- Uniformly distributed

Several of the most popular hashing algorithms are noted below:

- MD5: 128-bit digest

- SHA-1: 160-bit digest

- SHA-2: 224/256/384/512-bit digests

- SHA-3: 224/256/384/512-bit digests

Digital signatures

Digital signatures provide three services:

- Integrity

- Authenticity

- Nonrepudiation

A digital signature uses include having the same legal significance as a written signature, code signing to verify the integrity and authenticity of software, and nonrepudiation (of origin and delivery).

The process of creating a digital signature is quite easy and fundamentally involves two steps:

- The sender hashes the message, which produces a fixed-length message digest.

- The sender encrypts the hash value with the sender's private key.

Digital certificates

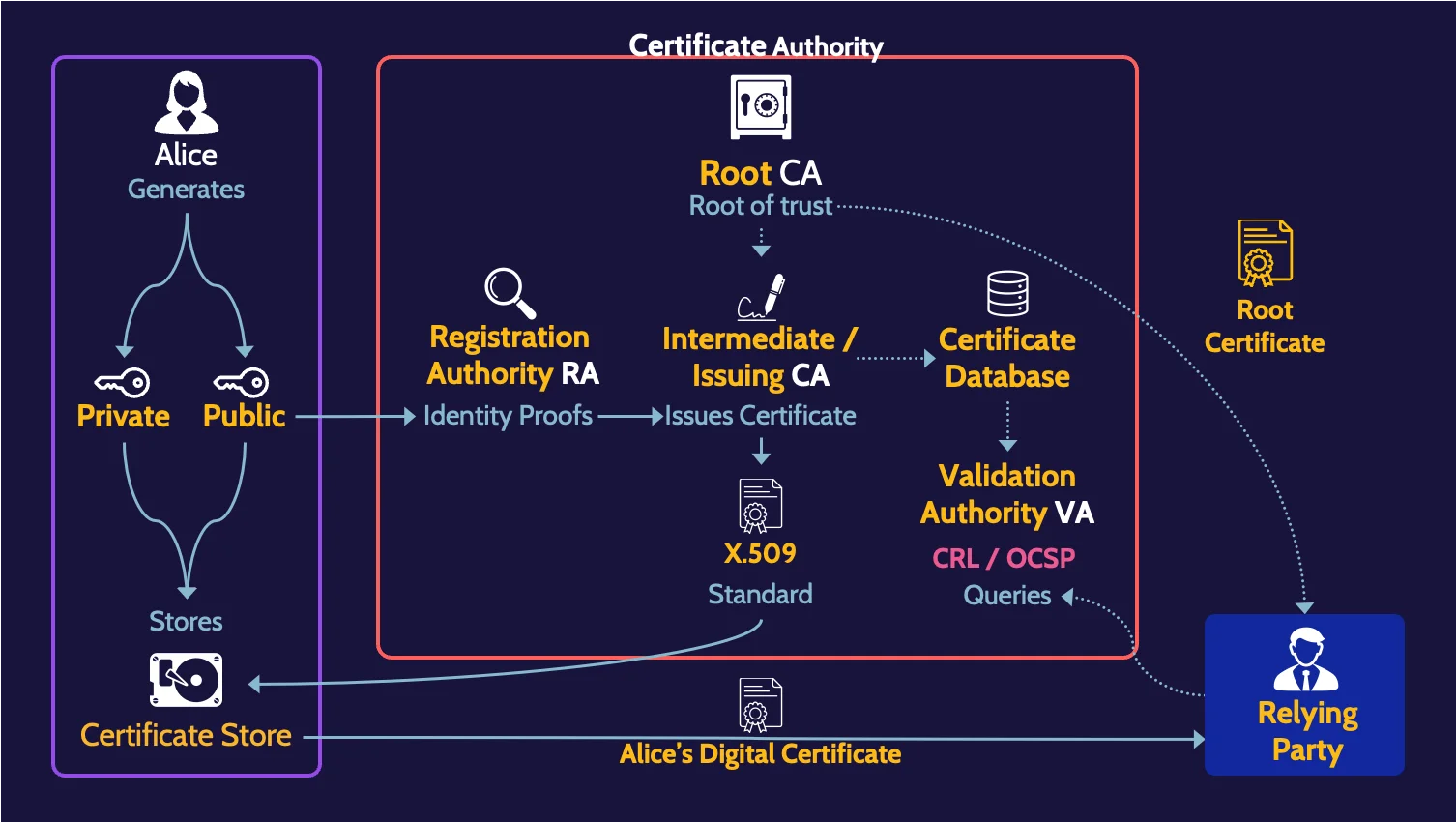

Digital certificates bind an individual to their public key, and all certificate authorities conform to the X.509 certificate standard.

The "root of trust" or "trust anchor" is the foundation of all digital certificates and is represented by a root certificate authority (CA). The Root CA self-signs its certificate, and it is then used to sign subordinate certificates, usually known as intermediate CAs. Intermediate CAs can also sign certificates, shown as Issuing CAs.

Digital certificate best practices suggest that public/private key pairs be periodically replaced, which means the associated digital certificate is also replaced. When a private key has been compromised, a digital certificate should be revoked by the issuing certificate authority.

Digital certificate replacement and revocation are summarized here:

Replacement | Revocation |

|---|---|

Regular replacement of expired certificates | Replacement of certificate when associated private key has been compromised |

Revocation confirmation methods, on the other hand, are:

Certificate Revocation List (CRL) | Online Certificate Status Protocol (OCSP) |

|---|---|

Client downloads and searches the list of serial numbers of all revoked certificates from the CA | Client queries CA for revocation status of specific certificate serial number |

With certificate pinning, when a certificate from a web server is trusted, each subsequent visit to the site does not include a request for a new copy of the certificate.

Finally, a certificate's life cycle includes a number of distinct phases:

- Enrollment

- Issuance

- Validation

- Revocation

- Renewal

Public key infrastructure

Public key infrastructure (PKI) is the basis for keys to be distributed and owners of public keys to be verified. It consists of several components:

Certificate Authority (CA) | Root of trust |

Registration Authority (RA) | Identity proofs on behalf of CA |

Intermediate/ Issuing CA | Issues certificates on behalf of CA |

Validation Authority (VA) | Responds to revocation queries on behalf of CA |

Certificate DB | List of certificates issued by CA and revocation list |

The root of trust in any PKI is the CA, which ultimately issues certificates.

Key management

Proper key management is paramount to the security of any cryptographic system and contains numerous key management activities, which are summarized in the following table:

Key Creation/ | The key generation/creation process includes the following attributes:

|

Key Distribution | Key distribution is the practice of securely distributing keys. Methods used could include:

|

Key Storage | Key storage is one of the most critical—if not the most critical—aspects of securing a cryptographic system. Two types of systems are utilized for key storage:

|

Key Change/Rotation | Key change/rotation refers to how often encryption keys should be replaced. |

Key Destruction/ Disposition | Key disposition refers to how keys are handled, especially in instances where data in the cloud is concerned. Two primary methods of key disposition/destruction are most often used:

|

Key Recovery | Key recovery refers to techniques used to recover a key. Three primary techniques exist: split-knowledge, dual control, and key escrow. |

Finally, Kerckhoffs' principle states that a cryptosystem should be secure even if everything about the system, except the key, is public knowledge.

S/MIME

S/MIME is a standard for public key encryption and provides security services for digital messaging applications. It requires the establishment or utilization of public key infrastructure (PKI) in order to work properly.

The basic security services offered by S/MIME are:

- Authentication

- Nonrepudiation of origin

- Message integrity

- Confidentiality

MIME does not address security issues, but security features were developed and added to MIME to create S/MIME.

S/MIME adds features to email messaging, including:

- Digital signatures for authentication of the sender

- Encryption for message privacy

- Hashing for message integrity and nonrepudiation of origin

3.7 Understand methods of cryptanalytic attacks

Cryptanalysis

The goal of cryptographic attacks can vary. Multiple types of cryptographic attacks, including popular ones like:

- Man-in-the-middle attack. The attacker pretends to be both parties in relation to the communication.

- Replay attack. It's like a man-in-the-middle attack, as the attacker is again able to monitor traffic flowing between two or more parties. However, this time the attacker aims to capture useful information.

- Pass-the-hash attack. The goal of the attacker is to gain access to valid password hashes that can then be used to bypass standard authentication steps and authenticate to a system as a legitimate user.

- Temporary files attack. The attacker may be able to read the memory space and gain access to the key, which could then be used for much broader purposes.