Domain 7 of the CISSP Certification exam covers security operations, one of the front lines of an organization's cybersecurity. The fight against cybercrime includes the concept of security operations center (SOC), logging and monitoring, preventive measures, and security integration within organizational operations.

Security operations refer to how organizations handle security investigations to apply resource protection and maintain detective measures. Essentially, they are the day-to-day activities in which the security function is involved and supports the entire organization concerning security initiatives.

These are the essential points you need to know about CISSP's domain 7 to pass the exam.

7.1 Understand and comply with investigations

Securing the scene

Investigators need to be able to conduct reliable investigations that will stand up to scrutiny and cross-examination. Hence, securing the scene is an essential and critical part of every investigation.

Securing the scene might include any/all of the following:

- Sealing off access to the area where a crime may have been committed.

- Taking photographs.

- Documenting the location of evidence.

- Avoid touching computers, mobile devices, thumb drives, hard drives, and so on—anything that may have been used as part of the crime.

With forensic computer investigations, the same concept applies. Otherwise, once evidence has been contaminated, it can't be decontaminated.

Evidence collection and handling

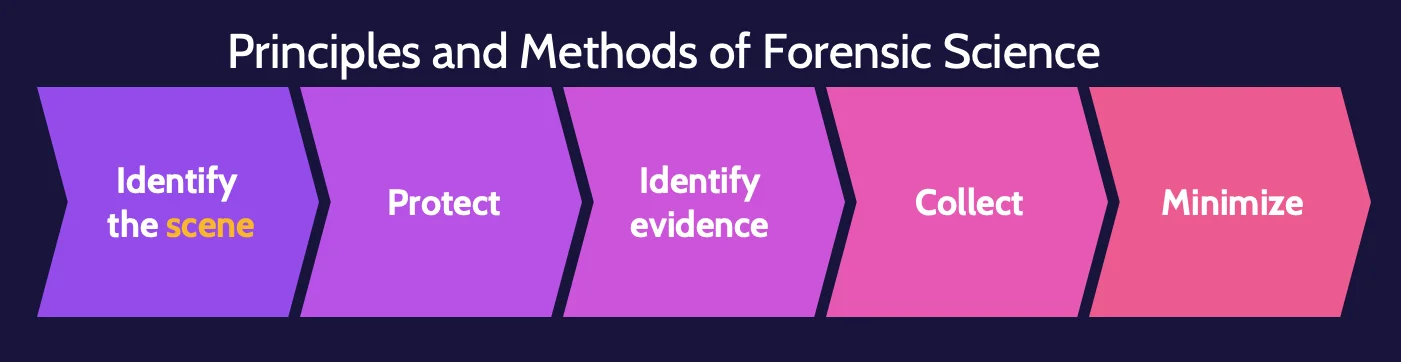

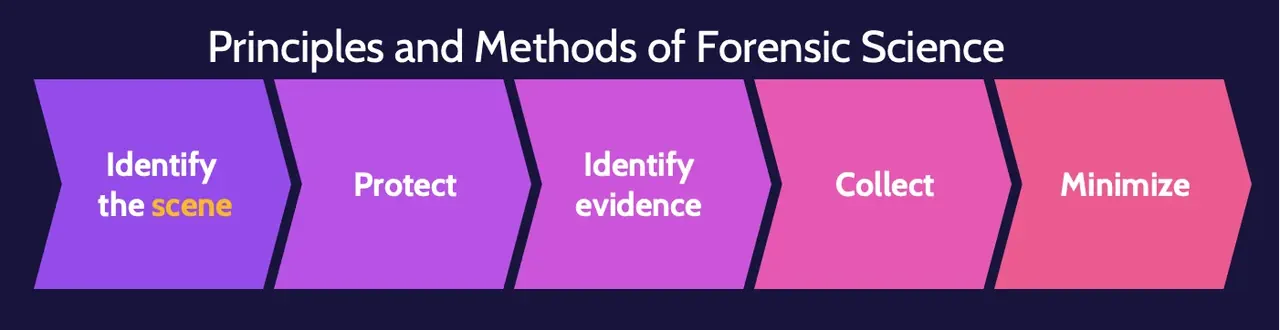

Forensic investigation process

Whether in response to a crime or incident, a breach of organizational policy, troubleshooting system or network issues, or several other reasons, digital forensic methodologies can assist in finding answers, solving problems, and, in some cases, successfully prosecuting crimes.

The forensic investigation process should include the following:

- Identification and securing of a crime scene.

- Proper collection of evidence that preserves its integrity and the chain of custody.

- Examination of all evidence.

- Further analysis of the most compelling evidence.

- Final reporting.

Sources of information and evidence

Sources of information and evidence as part of a computer security investigation often include:

- Oral/written statements. Statements given to police, investigators, or as testimony in court by people who witness a crime or who may have information deemed pertinent to an investigation.

- Written documents. Handwritten, typed, or printed documents such as checks, letters, wills, receipts, or contracts, to name a few, that may be relevant for the sake of an investigation.

- Computer systems. In the context of an investigation, a computer system could include the unit that houses the CPU, motherboard, and other system-related components that might store data in a non-volatile manner, as well as the storage devices—SSD, HDD (external/internal), and USB devices.

- Visual/audio. As part of a computer security investigation, visual and audio evidence could include photographs, video, taped recordings, and surveillance footage from security cameras.

In addition to sources of information, you also need to take numerous types of evidence into account:

- Real evidence

- Direct evidence

- Circumstantial evidence

- Corroborative evidence

- Hearsay evidence

- Best evidence rule

- Secondary evidence

A simple yet effective method for identifying where to look for evidence is the notion that something is taken whenever a crime is committed and something is left behind. This is called Locard's exchange principle.

Digital/computer forensics

Digital forensics is the scientific examination and analysis of data from storage media so that the information can be used as part of an investigation to identify the culprit or the root cause of an incident.

Live evidence

Live evidence is data stored in a running system in places like random access memory (RAM), cache, and buffers, among others.

If the keyboard is tapped, the mouse is moved, the plug is pulled, or the system is powered off, the live evidence changes or disappears completely. Examining a live system changes the state of the evidence.

At the same time, if power to the system is disrupted, the live evidence is gone.

Forensic copies

Another major source of digital evidence on a computer system is the hard drive. Whenever a forensic investigation of a hard drive is conducted, two identical bit-for-bit copies of the original hard drive should be created first.

A bit-for-bit copy is an exact copy, down to every bit on the original drive, and specialized tools are required to create them.

Why is it harder to do a forensic analysis of mobile devices?

- Manufacturers frequently change OS structure, file structure, services, and connectors.

- No single method or tool can extract all the data.

- Hibernation and suspension of apps.

- Extensive new training is required for examiners.

Reporting and documentation

At this point, all evidence has been examined, and the most relevant evidence should be documented for the sake of use by all relevant stakeholders, including:

- Prosecution/defense

- Judge/jury

- Regulators

- Investors

- Insurers

Artifacts

Forensic artifacts are remnants of a breach or attempted breach of a system or network and may or may not be relevant to an investigation or response.

Artifacts can be found in numerous places, including:

- Computer systems

- Web browsers

- Mobile devices

- Hard drives

- Flash drives

They're breadcrumbs that can potentially lead back to an intruder or at least identify their actions and the path they followed while in the system or network.

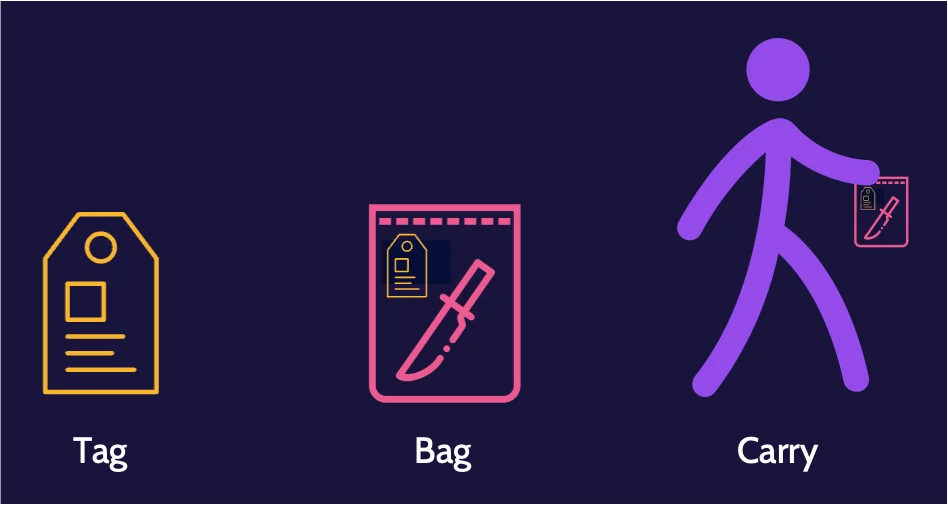

Chain of custody

The primary focus of chain of custody is control of evidence to maintain integrity for the sake of presentation in court. It must be established immediately and maintained.

The chain of custody ultimately focuses on having control of the evidence: who collected and handled what evidence, when, and where.

A helpful way to think about establishing the chain of custody is to tag, bag, and carry the evidence.

Five rules of evidence

For any evidence to stand the best chance of surviving legal and other scrutiny, it should exhibit five characteristics, also known as the five rules of evidence, as shown in the following table:

Authentic | Evidence is not fabricated or planted and can be proven so through crime scene photos or things like bit-for-bit copies of hard drives. This points back to securing the scene. |

Accurate | Evidence has not been changed or modified—it has integrity. |

Complete | Evidence must be complete, and all parts presented. In other words, all pieces of evidence must be available and shared, whether they support or fail to support the case. |

Convincing or reliable | Evidence must be conveyed to allow anybody to understand what is being presented. Evidence must display a high degree of veracity—it must demonstrate a high degree of truth. |

Admissible | Evidence is accepted as part of a case and allowed into the court proceedings. Chain of custody can help, but it does not guarantee admissibility. |

Investigative techniques

Several investigative techniques can be used when conducting analysis. One is media analysis, which examines the bits on a hard drive that may no longer have pointers, but the data is still there.

On the other hand, software analysis focuses on an application, especially malware. With malware, the goal is to determine exactly how it works and what it is trying to do. An important facet relates to attribution and determining who or where the software was created.

Types of investigations related to an incident include criminal, civil, regulatory, and administrative.

7.2 Conduct logging and monitoring activities

Security Information and Event Management (SIEM)

Security Information and Event Management (SIEM) systems ingest logs from multiple sources, compile and analyze log entries, and report relevant information.

SIEM systems are complex and require expertise to install and tune properly. They require a properly trained team that understands how to read and interpret what they see, as well as what escalation procedures to follow when a legitimate alert is raised. SIEM systems represent technology, process, and people, and each is relevant to overall effectiveness.

With traditional logging, where log files are only captured and maintained on individual systems, it would take enormous effort to access and analyze all the captured data and determine if anything out of the ordinary or malicious is taking place. With a SIEM system, significant intelligence is incorporated into their functionality, allowing significant amounts of logged events and analysis and correlation of the same to occur very quickly.

SIEM capabilities include:

- Aggregation

- Normalization

- Correlation

- Secure storage

- Analysis

- Reporting

Example sources of event data

SIEM systems allow log data from multiple sources, such as those noted below, to be captured in one location.

- Security appliances

- Network devices

- DLP

- Data activity

- Applications

- Operating systems

- Servers

- IPS/IDS

Threat intelligence

The term threat intelligence is an umbrella term encompassing threat research and analysis and emerging threat trends. It is an important element of any organization's digital security strategy that equips security professionals to proactively anticipate, recognize, and respond to threats.

Actionable threat intelligence can also be gleaned from documents like vendor trend reports, public sector team reports (like US-CERT), related information sharing and analysis centers (ISACs), and more.

User and Entity Behavior Analytics (UEBA)

UEBA (also known as UBA) analysis engines are typically included with SIEM solutions or may be added via subscription. As the name implies, UEBA focuses on the analysis of user and entity behavior.

At its core, UEBA monitors the behavior and patterns of users and entities, logs and correlates the underlying data, analyzes the data, and triggers alerts when necessary.

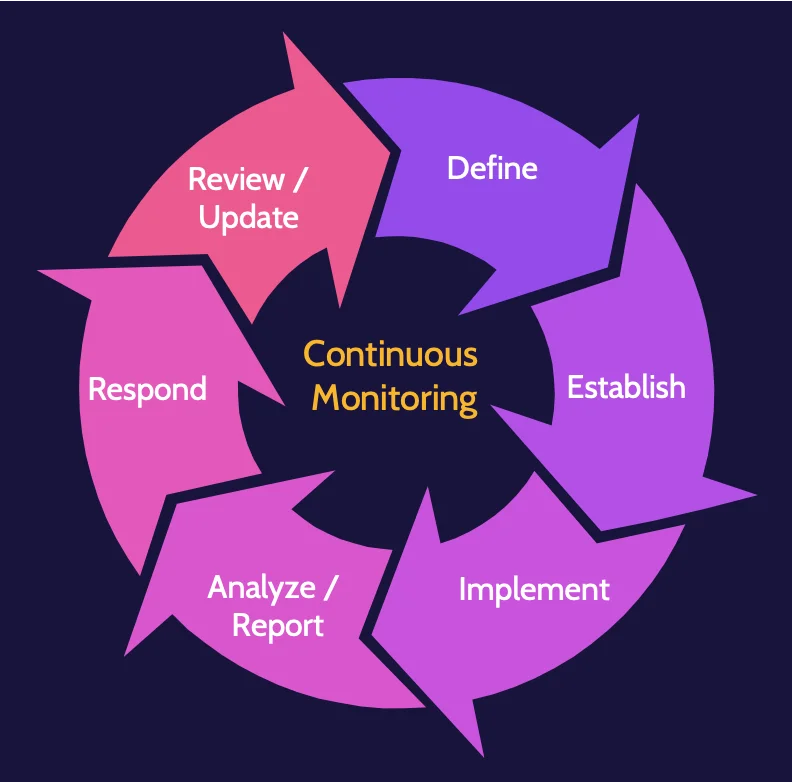

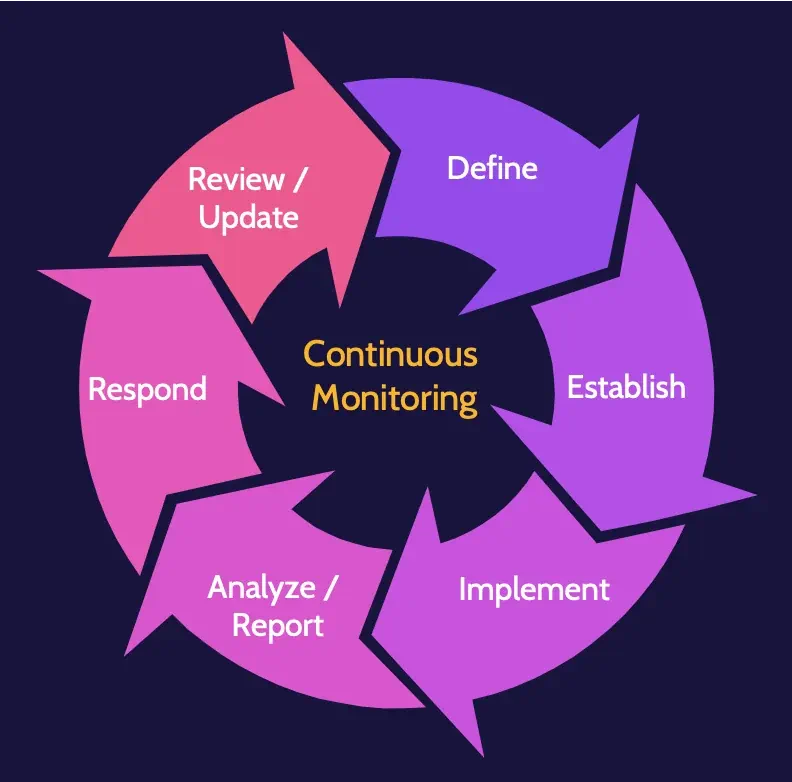

Continuous monitoring

After a SIEM is set up, configured, tuned, and running, it must be routinely updated and continuously monitored to function most effectively. Effective continuous monitoring encompasses technology, processes, and people.

Continuous monitoring steps are:

- Define

- Establish

- Implement

- Analyze/report

- Respond

- Review/update

7.3 Perform configuration management (CM)

Asset inventory

Provisioning relates to the deployment of assets—hardware, software, devices, and so on—within an organization. Part of provisioning should include maintaining and updating a related asset inventory database anytime an asset is added or removed.

Assets should be managed as part of an overall asset management life cycle.

Configuration management

Configuration management is an integral part of secure provisioning and relates to the proper configuration of a device at the time of deployment.

Policies, standards, baselines, and procedures inform configuration management, and hardening should be considered as part of the configuration management process.

Additionally—especially in large organizations—automated tools can be used for provisioning purposes.

7.4 Apply foundational security operations concepts

Foundational security operations concepts

Implementation of foundational security operations concepts can significantly improve security within an organization.

Foundational security operations concepts include: need to know/least privilege, separation of duties (SoD) and responsibilities, privileged account management (PAM), job rotation, and service level agreements (SLA).

Privileged account management

Privileged accounts refer to system accounts that typically have significant power. System administrator accounts are one example of a privileged account, often giving what's known as "root" or "admin" access to a system.

Job rotation

When an organization employs job rotation, they're essentially telling employees that from time to time, another employee will assume their duties, and they'll assume the duties of somebody else.

Service Level Agreements (SLAs)

They contain terms denoting related time frames against the performance of specific operations agreed upon within the overall contract.

7.5 Apply resource protection techniques

Protecting media

Media management should consider all types of media as well as short- and long-term needs and evaluate:

- Confidentiality

- Access speeds

- Portability

- Durability

- Media format

- Data format

Data storage media might include any of the following:

- Paper

- Microforms (microfilm and microfiche)

- Magnetic (HD, disks, and tapes)

- Flash memory (SSD and memory cards)

- Optical (CD and DVD)

In addition, Mean Time Between Failure (MTBF) is an important criterion when evaluating storage media, especially where valuable or sensitive information is concerned.

Associated with media management is the protection of the media itself, which typically involves policies and procedures, access control mechanisms, labeling and marking, storage, transport, sanitization, use, and end-of-life.

The management of hardware and software assets is closely aligned with the information above. An owner should be assigned to each asset, with each owner accountable for protecting that asset.

7.6 Conduct incident management

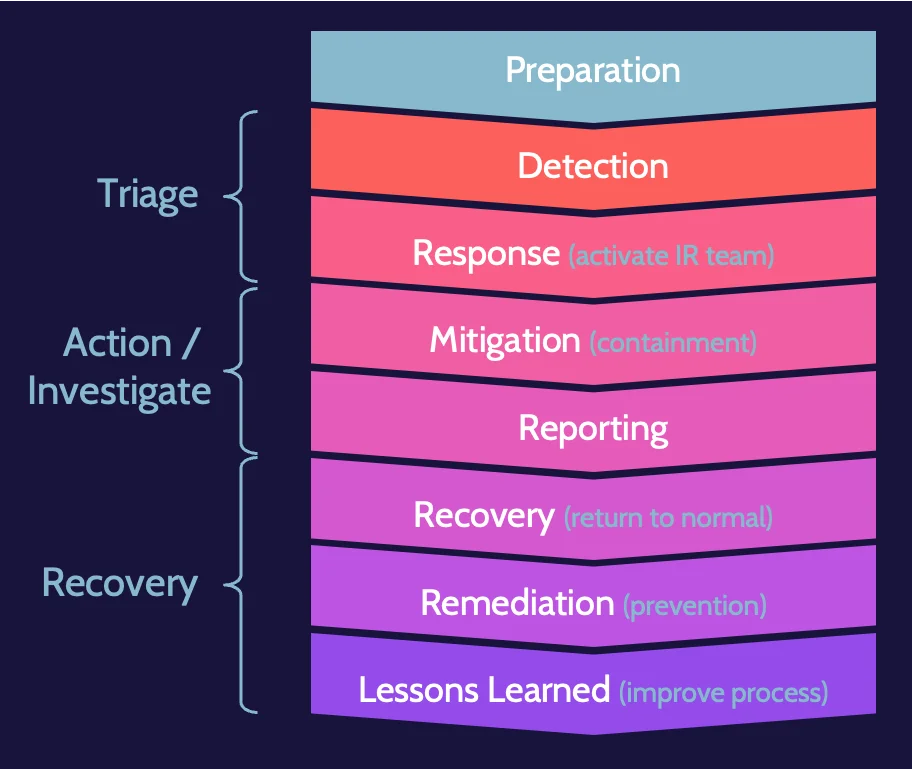

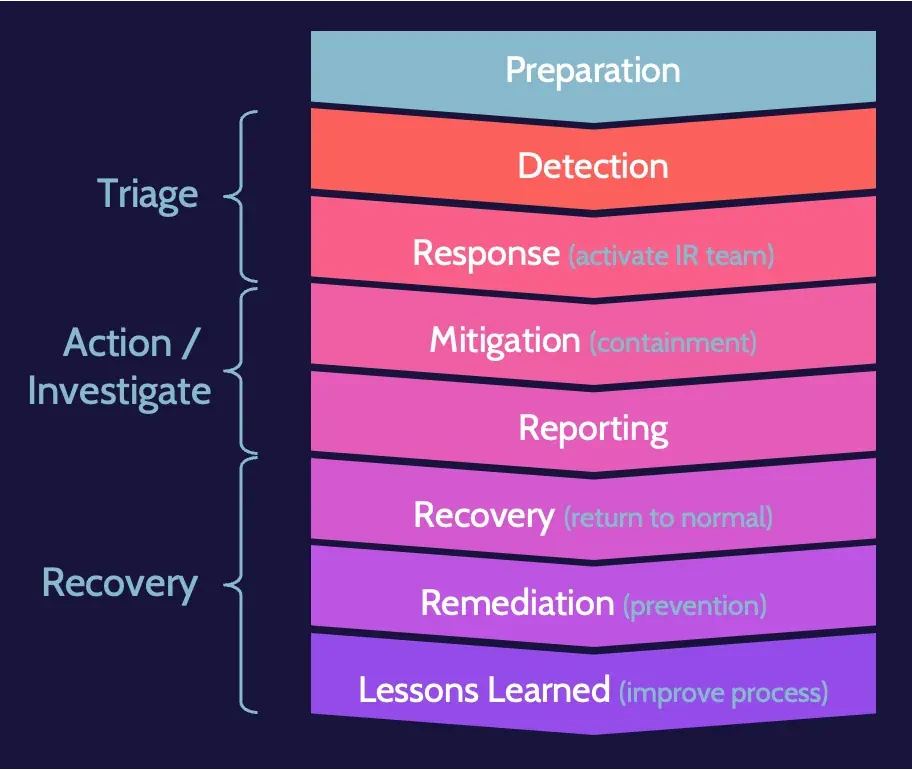

7.6.1 Incident response process

Incident response is the process used to detect and respond to incidents and to reduce the impact when incidents occur. It attempts to keep a business operating or to restore operations as quickly as possible in the wake of an incident.

These are the main goals of incident response:

- Provide an effective and efficient response to reduce impact to the organization.

- Maintain or restore business continuity.

- Defend against future attacks.

Events and incidents

An important distinction needs to be made to know when an incident response process should be initiated.

Events take place continually, and the vast majority are insignificant; however, events that lead to some type of adversity can be deemed incidents, which should trigger an organization's incident response process.

Detection examples

Organizations must have tools in place that can help detect and identify incidents. A combination of automated and manual tools is usually the best and most effective approach:

- IPS/IDS

- DLP

- Anti-malware

- SIEM

- Administrative review

- Motion sensors

- Cameras

- Guards

Examples of incidents are as follows:

- Malware

- Hacker attack

- Insider attack

- Employee error

- System error

- Data corruption

- Workplace injury

The incident response process steps are:

Preparation | Preparation is critical, as this will help anticipate all the steps to follow. Preparation would include developing the IR process, assigning IR team members, and everything related to what happens when an incident is identified |

Detection | Pointing back to the distinction between an event and an incident, the goal of detection is to identify an adverse event—an incident—and to begin dealing with it. |

Response (IR team) | After an incident has been identified, the IR Team should be activated. Among the first steps taken by the IR Team will be an impact assessment to determine how big of a deal the incident is, how long the impact might be experienced, who else might need to be involved, and so on. |

Mitigation (containment) | In addition to conducting an impact assessment, the IR Team will attempt to minimize—to contain—damage or impact from the incident. The IR Team's job at this point is not to fix the problem; it's simply to try and prevent further damage. |

Reporting | Reporting occurs throughout the incident response process. Once an incident is mitigated, formal reporting occurs because numerous stakeholders often need to understand what has happened. |

Recovery (return to normal) | At this point, the goal is to start returning things to normal, to getting back to business as usual. |

Remediation (prevention) | In parallel with recovery, remediation is also taking place. The goal of remediation is to implement fixes and improvements to systems and processes to prevent a similar incident from occurring again. |

Lessons learned (improve process) | An all-encompassing view of the situation related to an incident. |

7.7 Operate and maintain detective and preventive measures

Malware

Malware is malicious software that negatively impacts a system. These are the characteristics of each malware type:

Virus | The defining characteristic of a virus is it's a piece of malware that has to be triggered in some way by the user. |

Worm | A worm is a piece of malware that can self-propagate and spread through a network or a series of systems on its own by exploiting a vulnerability in those systems |

Companion | Companion malware is helper software. It's not malicious on its own; rather, it could be something like a wrapper that accompanies the actual malware. |

Macro | A macro is something often found in Microsoft Office products, like Excel, and is created using a straightforward programming language to automate tasks. Because a programming language is involved, macros can be programmed to be malicious and harmful. |

Multipartite | Multipartite means the malware spreads in different ways. Stuxnet is a perfect example of multipartite malware. |

Polymorphic | Every time it replicates across a network, polymorphic malware can change aspects of itself, like file name, file size, code structure, and so on, to evade detection. |

Trojan | A Trojan horse is malware that looks harmless or desirable but contains malicious code. Trojans are often found in software that is easily and freely downloadable from the internet. |

Botnet | A botnet is many infected systems that have been harnessed together and act in unison. |

Boot sector infector | Boot sector infectors are pieces of malware that can install themselves in the boot sector of a hard drive. |

Hoaxes/pranks | Hoaxes and pranks are not actually software. Instead, they're typically social engineering—via email or other means—that intends harm (hoaxes) or just a good laugh (pranks). |

Logic bomb | A logic bomb is a bit of code that will execute based on some logic. |

Stealth | Stealth malware is malware that uses various active techniques to avoid detection. |

Ransomware | Ransomware is gaining in popularity very rapidly. It is a type of malware that typically encrypts a system or a network of systems, effectively locking users out, and then demands a ransom payment (usually in the form of a digital currency, like Bitcoin) to gain access to the decryption key. |

Rootkit | Similar to stealth malware, rootkit malware attempts to mask its presence on a system. As the name implies, a rootkit typically includes a collection of malware tools that an attacker can utilize according to specific goals. |

Data diddler | A data diddler is a piece of malware that makes minimal changes over a long period to evade detection. |

Zero-day | A zero-day is any type of malware that's never been seen in the wild before. The vendor of the impacted product is unaware, as are security companies that create anti-malware software intended to protect systems. |

Anti-malware

Anti-malware software is designed to prevent malware from being triggered. One of the best anti-malware solutions is effective policy and providing user training and awareness to staff members, considering that a virus requires human interaction to trigger it.

Signature-based anti-malware

More technical methods of detecting malware include using signature-based anti-malware systems. These systems contain what are known as definition files—files that have signature characteristics of currently known malware—and scan systems using this information to detect suspicious and compromised files.

They cannot detect zero-day malware, and they're only accurate based upon accurate definition files, so they constantly need to be updated.

Heuristic systems

Heuristic systems do not look for malware based on a particular pattern or signature. Rather, they look at the underlying code or behavior of a file. They generally work one of two ways:

- Static code scanning techniques: the scanner scans code in files, similar to white box testing

- Dynamic techniques: the scanner runs executable files in a sandbox to observe their behavior.

The major advantage of heuristic scanners is they can potentially detect zero-day malware.

Machine learning and Artificial Intelligence (AI)-Based Tools

Together, machine learning and artificial intelligence-based tools can:

- Empower systems to use data to learn and improve without being explicitly programmed.

- Make predictions through the use of mathematical models to analyze patterns.

ML/AI security application

ML/AI is specifically being leveraged to provide:

- Threat detection and classification

- Network risk scoring

- Automation of routine security tasks and optimization of human analysis

- Response to cybercrime:

- Unauthorized access

- Evasive malware

- Spear phishing

7.8 Implement and support patch and vulnerability management

Patch management

Patch management helps create a secure environment by fixing—patching—security flaws and vulnerabilities in systems. Patching only secures a system against known vulnerabilities.

Once the need for an available patch has been identified, a change management process should be employed as part of the decision to move forward and install the patch.

Determining patch levels

There are three methods for determining patch levels:

- Agent. Update software (agent) installed on devices.

- Agentless. Remotely connect to each device.

- Passive. Monitor traffic to infer patch levels.

Deploying patches can be done manually or automatically.

7.9. Understand and participate in change management processes

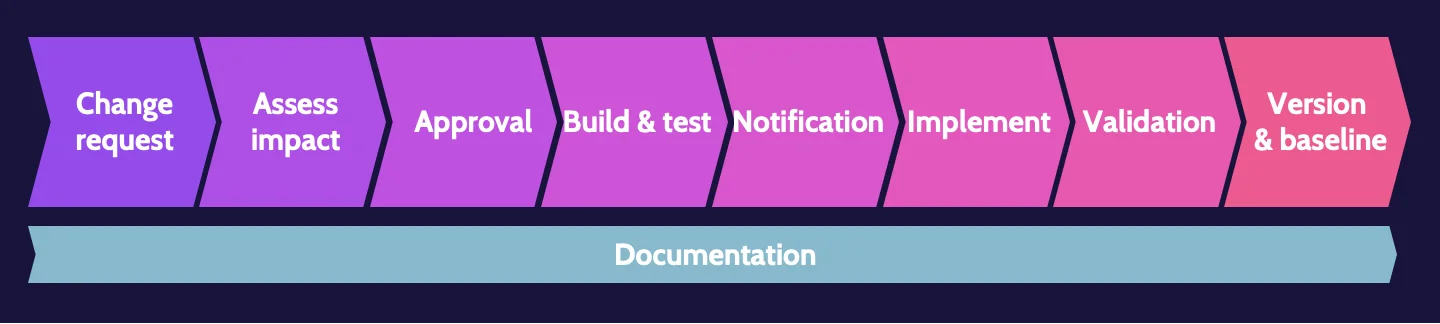

Change management

Change management ensures that the costs and benefits of changes are analyzed and changes are made in a controlled manner to reduce risks.

Change management process

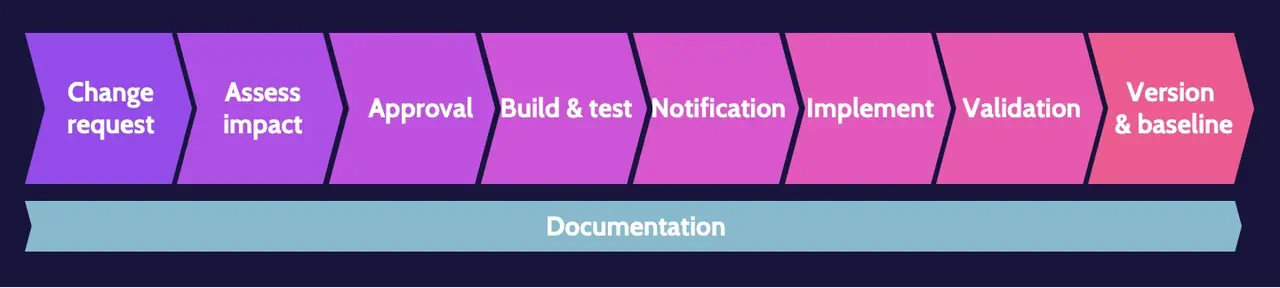

The change management process includes multiple steps that build upon each other. The steps are outlined below:

- Change request. A change request can come from any part of an organization and pertain to almost any topic. Organizations typically use some type of change management software.

- Assess impact. After a change request is made, however small the request might be, the impact of the potential change must be assessed.

- Approval. Based on the requested change and related impact assessment, common sense plays a big part in the approval process.

- Build and test. After approval, any change should be developed and tested, ideally in a test environment.

- Notification. Prior to implementing any change, key stakeholders should be notified.

- Implement. After testing and notification of stakeholders, the change should be implemented.

- Validation. Once implemented, senior management and stakeholders should again be notified to validate the change.

- Version and baseline. Documentation should take place at each step noted. Still, at this point, it's critical to ensure all documentation is complete and to identify the version and baseline related to a given change.

7.10 Implement recovery strategies

Failure modes

Failure modes refer to what happens in an environment when something—a component in a system, an entire system, or a facility—fails. Three failure modes exist:

Fail-soft(Fail-open) | Fail into a state of less security, for example, a firewall allowing all traffic through if it fails. This could cause significant security problems |

Fail-secure (Fail-closed) | Fail into a state of the same or greater security, for example, a firewall blocking all traffic if it fails. Though this might block legitimate users, it would also maintain security by blocking all attackers. |

Fail-safe | Fail into a state that prioritizes the safety of people; for example, the doors to a secure facility unlock automatically in the event of a fire alarm going off. |

Backup storage strategies

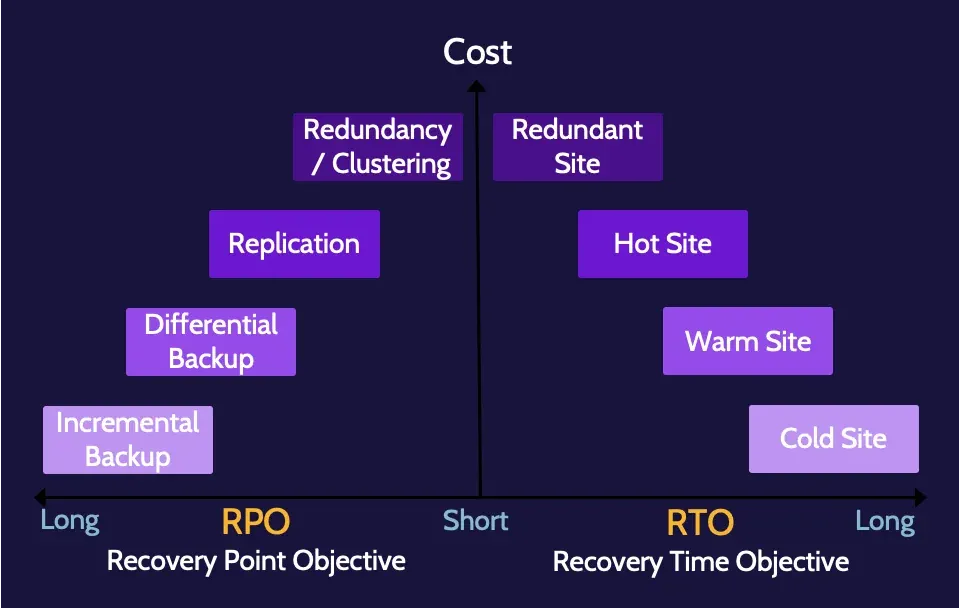

Backup strategies are driven by organizational goals and objectives and typically focus on backup and restore time as well as storage needs.

Archive bit

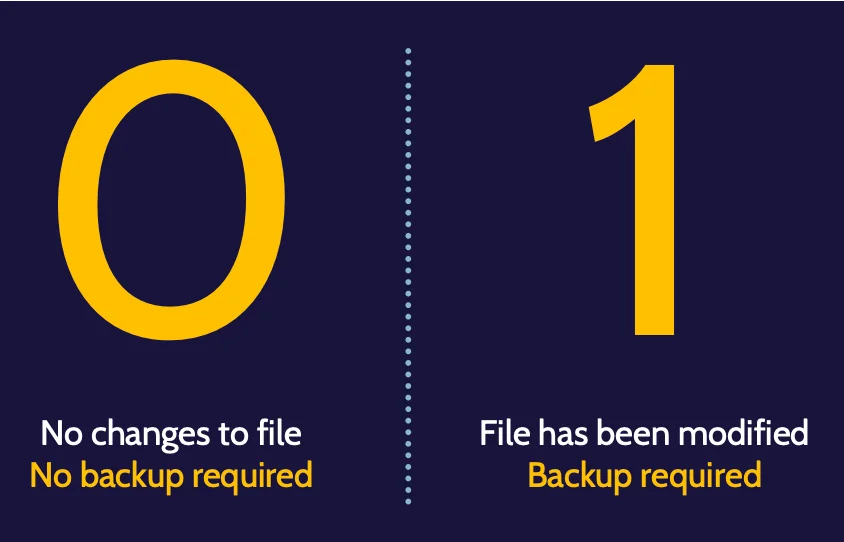

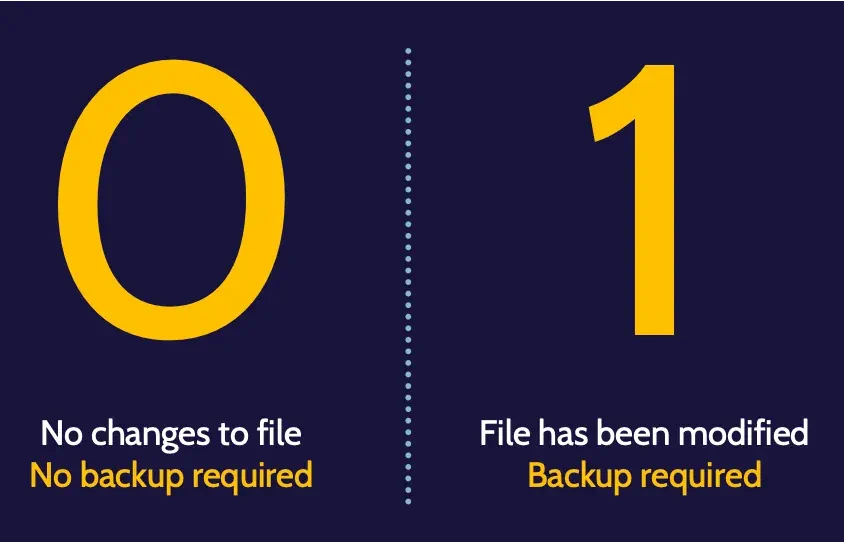

Archive bit is technical detail—metadata—that indicates the status of a backup relative to a given backup strategy.

- 0 = no changes to the file or no backup required

- 1 = file has been modified or backup required.

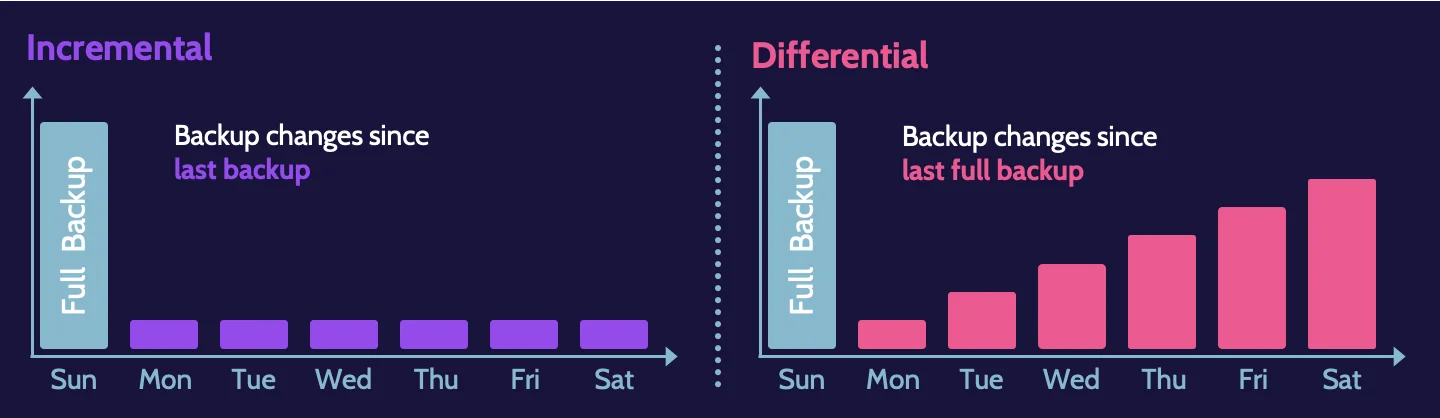

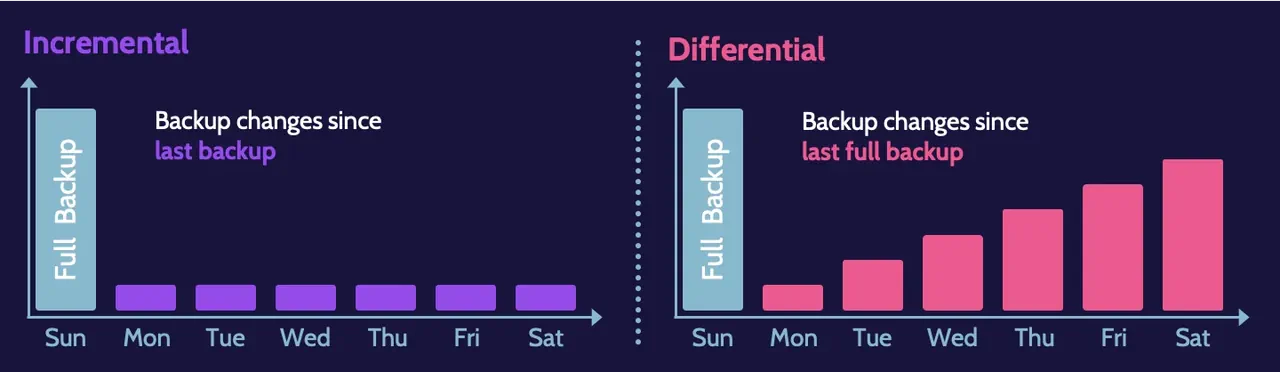

Different backup strategies deal with the archive bit differently. Incremental and differential backup strategies do not treat the archive bit in the same manner.

- Incremental backup: Changes since the last incremental backup

- Differential backup: Changes since the last full backup

Mirror backup

A mirror backup is an exact copy of a data set is created, and no compression is used.

Of the three types of backups mentioned, mirror is the fastest to backup and restore, but it requires a tremendous amount of data storage; incremental backups require the least amount of data storage.

Backup storage strategies

Some of the most common storage strategies are:

- Offsite storage refers to a place where, for example, backup tapes and copies of other important media can be stored.

- Electronic vaulting usually implies some type of automated tape management system, like a tape jukebox.

- Tape rotation refers to different types of tape backup strategies.

Cyclic redundancy check (CRC)

A CRC is a bit of math that is used to ensure the integrity of data, and CRC can be used anywhere data resides—hard drive, when moving data across a network, and even in RAM. Among other uses, a CRC can be used to validate backups in order to ensure the integrity of the backed-up data.

Spare parts

It’s important to have spares of critical components for those systems on hand. Spare parts strategies include:

- Cold spare. Parts sitting on a shelf, perhaps in a storage room.

- Warm spare. Installed in a computer system, but not powered and available.

- Hot spare. Installed in a system, powered, and available.

Redundant Array of Independent Disks (RAID)

Redundant array of independent disks (RAID) refers to multiple drives being used in unison in a system to achieve greater speed or availability. The most well-known RAID levels are:

- RAID 0—Striping. Provides significant speed data writing and reading advantages.

- RAID 1—Mirroring. Utilizes redundancy to provide reliable availability of data.

- RAID 10—Mirroring and Striping. Requires a minimum of four hard drives and provides the benefits of striping (speed) and mirroring (availability) in one solution; this type of RAID is typically one of the most expensive.

- RAID 5—Parity Protection. Requires a minimum of three hard drives and provides a cost-effective balance between RAID 0 and RAID 1; RAID 5 utilizes a parity bit, computed from an XOR operation, for purposes of storing and restoring data.

Clustering and redundancy

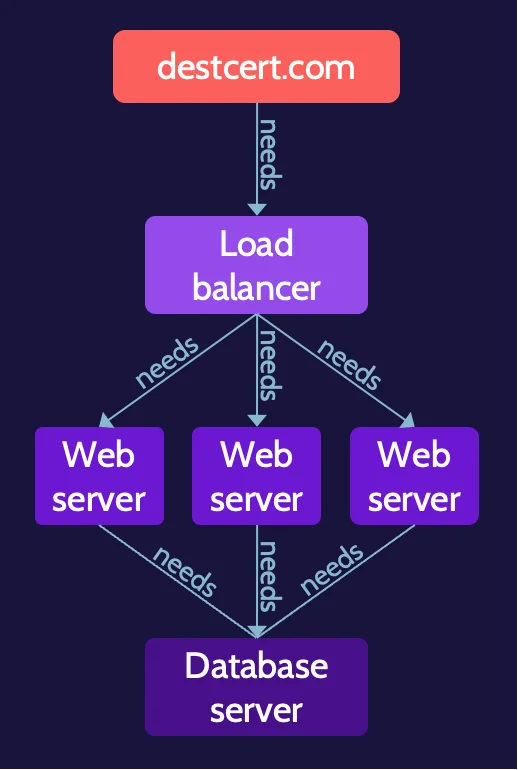

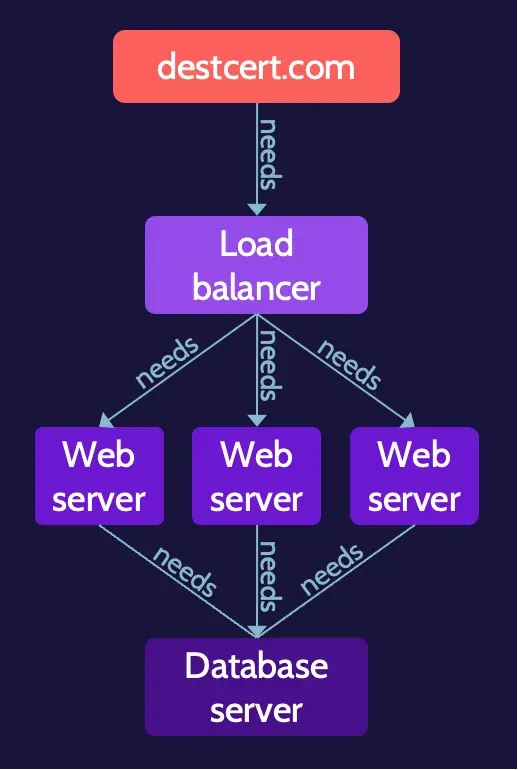

Clustering refers to a group of systems working together to handle a load. This is often seen in the context of web servers that support a website. Typically, incoming traffic will be managed by a load balancer that distributes requests to multiple web servers, the cluster.

Redundancy also involves a group of systems, but unlike a cluster, where all the members work together, redundancy typically involves a primary system and a secondary system. The primary system does all the work, while the secondary system is in standby mode. If the primary system fails, activity can fail over to the secondary.

Both clustering and redundancy include high availability as a by-product of their configuration.

Recovery site strategies

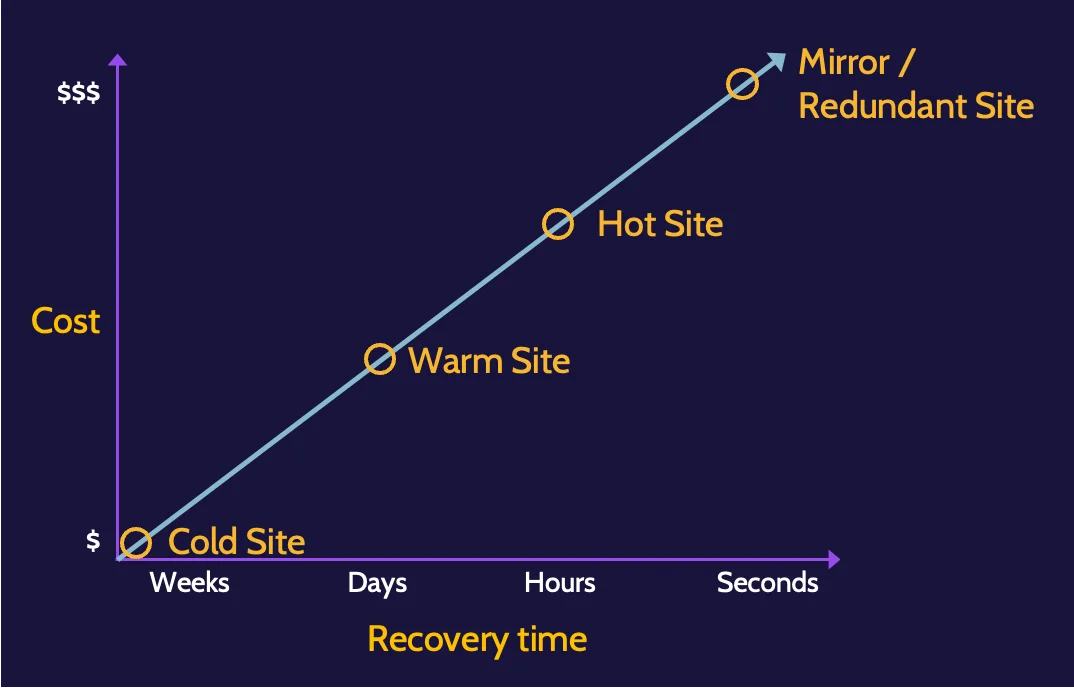

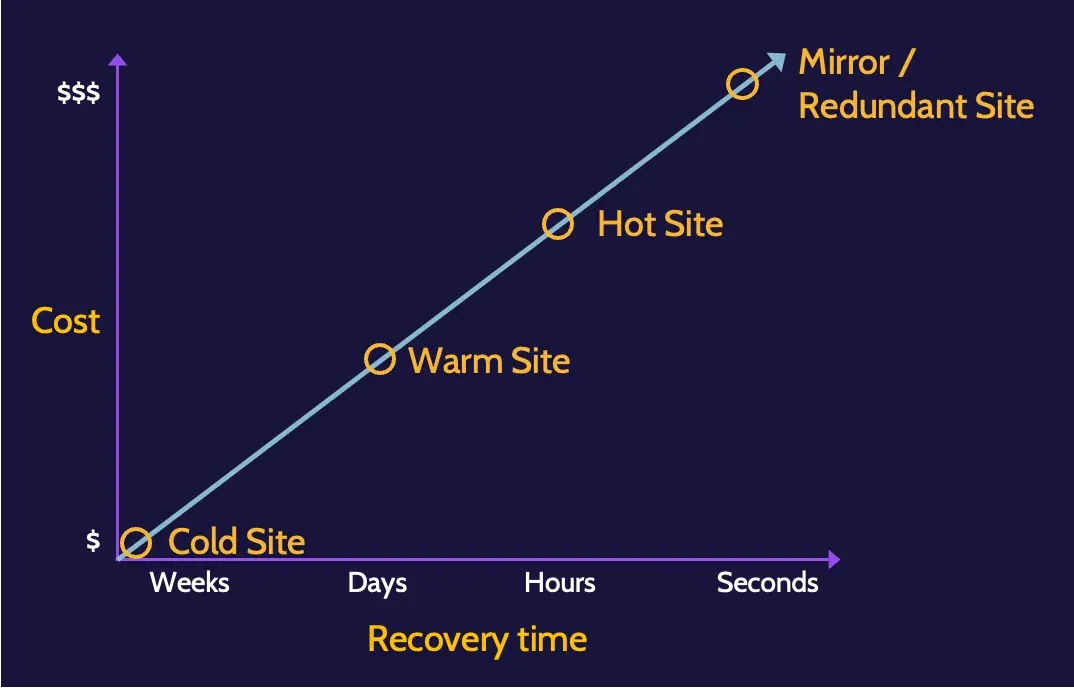

Recovery site strategies consider multiple elements of an organization—people, data, infrastructure, and cost, to name a few examples—as well as factors like availability and location.

Elements like time to recover and money are important components of each. Something called a cold site is relatively inexpensive, but it takes a relatively long time to bring online.

A warm site is better than a cold site because, in addition to the shell of a building, basic equipment is installed.

A mirrored or redundant site, on the other hand, can be brought online very quickly, but this type of site is also extremely expensive.

Geographically remote and geographic disparity refers to where a recovery site is located relative to the primary site. The idea is that you don’t want whatever may have brought a primary site down to also impact the recovery site.

Internal recovery sites are owned by the organization, while external recovery sites are owned by a service provider.

Also, multiple processing sites are more than one site where key business functionality is performed.

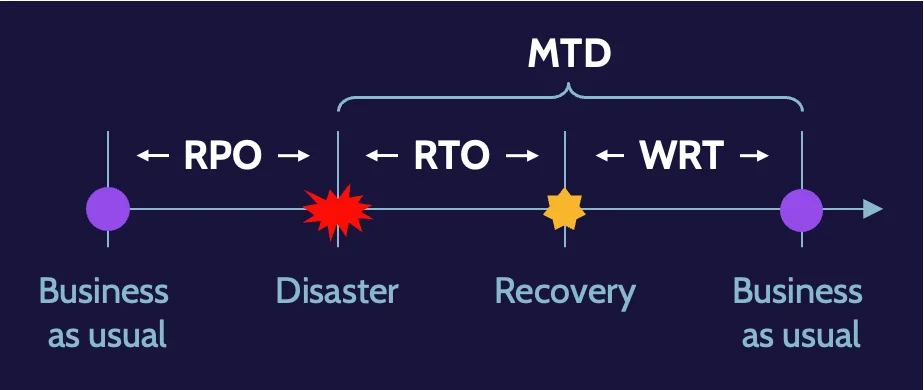

RPO and RTO

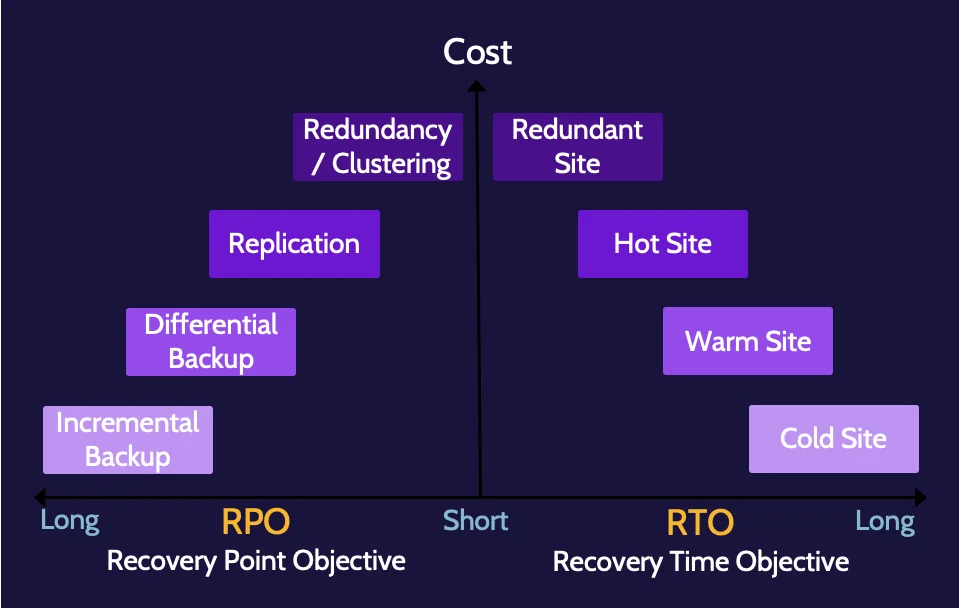

RPO stands for recovery point objective, and it refers to how much data an organization could afford to lose.

RTO stands for recovery time objective, and it refers to how long it takes an organization to move from the time of disaster to the time of operating at a defined service level.

The business impact analysis (BIA) is the process that helps an organization identify its most critical functions, services, assets, systems, and processes. RPO and RTO are subsequently determined to understand how much data and how quickly these systems and processes need to be recovered.

7.11 Implement disaster recovery (DR) processes

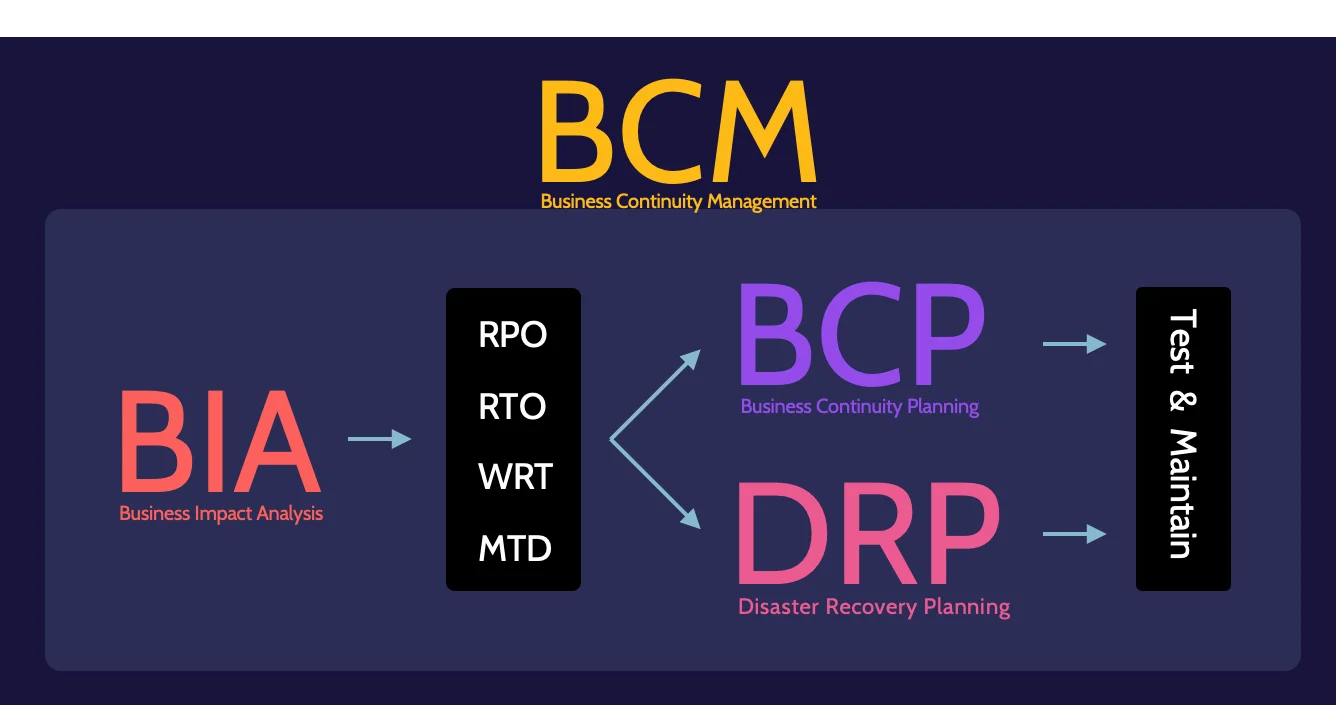

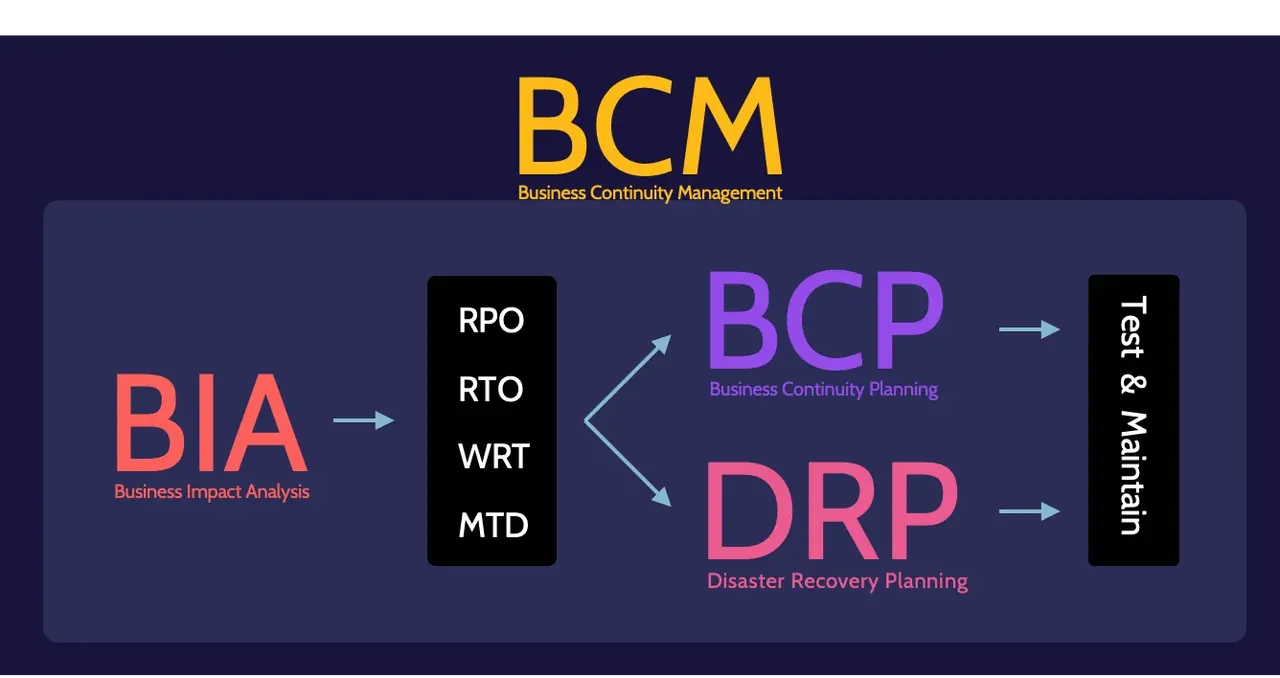

BCM, BCP, and DRP

The processes described in this topic all help mitigate the effects of a disaster, preserving as much value as possible.

Business Continuity Management (BCM), Business Continuity Planning (BCP), and Disaster Recovery Planning (DRP) are ultimately used to achieve the same goal—the continuity of the business and its critical and essential functions, processes, and services.

- BCM is the process and function by which an organization is responsible for creating, maintaining, and testing BCP and DRP plans.

- BCP focuses on the survival of the business processes when something unexpected impacts it.

- DRP focuses on the recovery of vital technology infrastructure and systems.

The key BCP/DRP steps are:

- Develop contingency planning policy

- Conduct BIA

- Identify controls

- Create contingency strategies

- Develop contingency plan

- Ensure testing, training, and exercises

- Maintenance

RPO, RTO, WRT, and MTD

When dealing with BCP and DRP procedures, there are four key measurements of time to be aware of:

- RPO = max tolerable data loss measured in time

- RTO = max tolerable time to recover systems to a defined service level

- WRT = max tolerable time to verify system and data integrity as part of the resumption of normal ops

- MTD/MAD = max time-critical system, function, or process can be disrupted before unacceptable/irrecoverable consequences to the business.

The shorter the RPO and RTO requirements, the more significant the cost becomes.

Business Impact Analysis (BIA)

The purpose of Business Impact Assessment (BIA) is to predict the consequences of a disaster or a disruption to business processes and functions and then identify them as time parameters for the whole purpose of gathering information to develop recovery strategies for each critical and essential function and process.

BIA process identifies:

- The most critical/essential business function, processes, and systems.

- The potential impacts of an interruption as a result of disaster.

- The key measurements of time (RPO, RTO, WRT, and MTD) for each critical function, process, and system.

These are the steps in the BIA process:

- Determine mission/business processes and recovery criticality

- Identify resource requirements

- Identify recovery priorities for system resources

Disaster response process

A disaster should be declared when the maximum tolerable downtime (MTD) is going to be exceeded.

Disaster response should include all personnel and resources necessary to quickly respond to the situation and restore normal operations. Its team personnel should include stakeholders from throughout the organization.

Disaster communications are critical and should include all relevant stakeholders.

If the MTD is going to be exceeded, a disaster should be declared, and the response process and response team should be activated.

Restoration order

The BIA determines restoration order when recovering systems—the most important and critical should be recovered first. Dependency charts and mapping can help inform system restoration order.

After declaring a disaster, the most critical systems should be brought online at a recovery site. As time progresses and the primary site is rebuilt and ready to once again host business operations, the processes and systems that should be restored first are the least critical, in order to make sure the site is working properly.

7.12 Test disaster recovery plans (DRP)

7.12.1 BCP and DRP testing

After recovery plans have been created, it’s imperative to test them. DRP testing is a critical component of plan creation and development.

The following table is a summary of all DRP test types:

Type | Description | Affects backup/ parallel systems | Affects production systems |

|---|---|---|---|

Read-through/Checklist | The author reviews the DR plan against the standard checklist for missing components/completeness | Cell | Cell |

Walkthrough | Relevant stakeholders walk through the plan and provide their input based on their expertise | Cell | Cell |

Simulation | Follow a plan based on a simulated disaster scenario. Stop short of affecting systems or data. | Cell | Cell |

Parallel | Test DR plan at recovery site/on parallel systems | Cell | |

Full-interruption/ | Cause an actual disaster and follow the DR plan to restore systems and data |

Goals of Business Continuity Management (BCM)

BCM includes three primary goals:

- Safety of people

- Minimization of damage

- Survival of business

BCM should focus on the most critical and essential functions of the business.

Security training and awareness programs, emergency response and management training, and physical security and access control all help in the safety of humans.

Prepare to pass the CISSP exam

We have all you need to pass the CISSP exam. What we shared in this article are some of the most important points of Domain 7. Still, you can benefit from our decades of experience, deep involvement with the ISC2, and our intelligent learning system to prepare for your exam.

Enroll now in our CISSP MasterClass. Your cybersecurity path begins here.