The Importance of Logging and Monitoring in CISSP Domain 6

Download FREE Audio Files of all the MindMaps

and a FREE Printable PDF of all the MindMaps

Your information will remain 100% private. Unsubscribe with 1 click.

Transcript

Introduction

Hey, I’m Rob Witcher from Destination Certification, and I’m here to help YOU pass the CISSP exam. We are going to go through a review of the major topics related to Log Review & Analysis in Domain 6, to understand how they interrelate, and to guide your studies.

This is the third of three mind map videos for domain 6. I have included links to the other MindMap videos in the description below. These MindMaps are one part of our complete CISSP MasterClass.

Logging events from multiple systems, aggregating the data and analyzing the data - essentially logging and monitoring is an important part of security assessment.

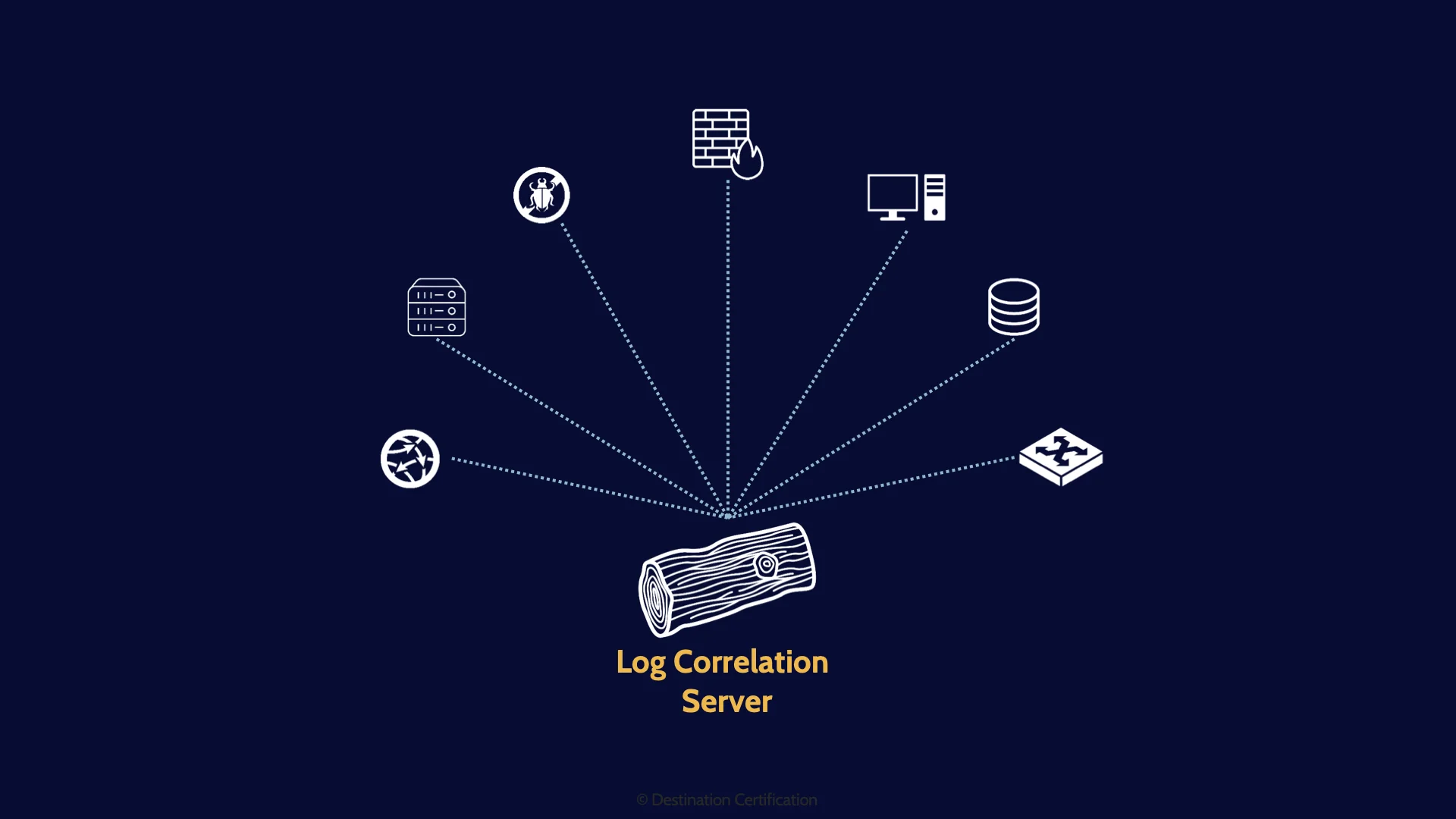

Where can we collect logging data from across the organization? The answer is essentially everywhere. Almost every system can generate log event data:

- Network devices like firewalls, routers, and switches

- IDS & IPS - Intrusion Detection and Intrusion prevent systems

- Servers, desktops, laptops

- Operating systems

- Applications

- Anti-malware

- Etc etc.

We must be selective though. Many systems are capable of generating an avalanche of event data, so we need configure systems to only log what is relevant.

We also need the capability to review all the logging event data that is being generated. Ideally as close to real-time as possible. It’s not super ideal to review your logs and realize you’ve had a significant breach months after it has occurred.

And what are we looking for when analyzing the logs? Errors and anomalies.

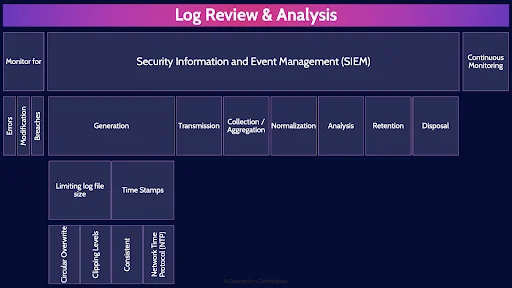

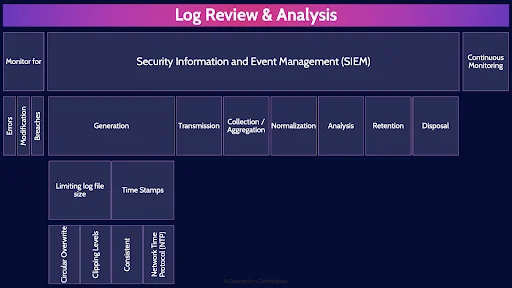

Log Review & Analysis

Monitor for

More specifically, what exactly are we monitoring for?

Errors

Errors. If we see for example that our webserver is generating many Error 404 – file not found messages, this is a clear indication that something is broken and needs to be fixed on the web-server

Modification

Modifications – more specifically, unauthorized modifications. It is not uncommon for attackers to exploit a vulnerability to break into a system, and then patch that vulnerability behind themselves – after they have installed something like a backdoor. Therefore, looking for unauthorized patching of a system may be an indication of a breach.

Breaches

And of course from a security perspective, one of the main things we are monitoring for is if any of our systems have been breached: being used for crypto currency mining, or data exfiltration is occurring or if we are about to have a bad time with ransomware.

As I mentioned, one of the major challenges is the plethora of devices and systems that can generate log data across an organization, and the volume of event data that they can produce. It is very much the challenge of looking for a needle in a haystack. Accordingly, we need to use systems that automate many of the tasks and analysis required for logging and monitoring.

Security Information and Event Management (SIEM)

These systems are commonly referred to a SIMs - Security Information and Event Management systems.

Generation

Before we can begin feeding data into a SIM system, we first need to enable logging on devices across the environment so that we are generating log data.

Limiting log file size

Something we have to be careful about though is limiting log file sizes on these endpoint devices – such as firewalls, routers, switches, etc. Many of these devices can generate a lot of data but have very limited onboard storage to store this log data. We therefore need a couple of methods to limit log files sizes.

Circular Overwrite

Circular overwrite is the idea that you set a maximum log file size, say 10mb of 10,000 lines and then begin writing log event data. When the system reaches that maximum, then it will circle back to the top of the log file and begin overwriting until it reaches for max file size again, and circles back yet again. Rinse & repeat.

Clipping Levels

Clipping levels are about setting a threshold. Below the threshold, log nothing, above the threshold, begin logging. For example, we typically don’t care about one or two failed login events – we all mistype our password occasionally. But 10 failed login attempts in quick succession, or 50, or 10,000. We definitely care about that. Someone is trying to brute force a password. So, we could set the threshold at say 3 failed login attempts. Below 3, nothing is logged. 3 or more, we log it.

Time Stamps

Another important consideration when generating log data is the timestamps for each log event

Consistent

We need consistent timestamps. We need timestamps in the same format: say year, month, day, and 24 hour clock. This way we can more easily correlate events from different system because they have consistent timestamps

Network Time Protocol (NTP)

We also need the clocks in all our systems across the environment to by synchronized. It is very difficult to trace how and attacker traversed a network if one system’s clock is 3 seconds slower, another is 5 seconds fast, another’s date is sent to 1979. There is a protocol we can use to synchronize all our system clocks: NTP – network time protocol.

Transmission

When a log event is generated on any device in the environment, we want to transmit that event data in Realtime to our SIM system

Collection / Aggregation

Our SIM system collects and aggregates all this event data from across the environment in one central system.

Normalization

Next the SIM system will normalize the data. Clean up the event data from disparate devices so that all the data, the variables, are comparable.

Analysis

So that the SIM system can now analyze all the event data that is pouring in to look for that proverbial needle in the haystack. The SIM system will apply various analysis techniques such as event correlation, statistical models, rules, etc. to look for errors and anomalies.

Retention

SIM systems will also retain log event data for long-term storage to enable longitudinal analysis and tracking, and to meet contractual or regulatory requirement for log retention.

Disposal

And finally, when event data no longer needs to be retained, it can be securely and defensibly destroyed.

Continuous Monitoring

Continuous Monitoring or sometimes referred to as Continuous Security Monitoring (CSM) is the process where an organization identifies all their systems, identifies the risks associated with each system, applies the appropriate controls to mitigate the risks, and then continuously monitors the controls to assess their effectiveness against the ever changing threats landscape.

And that is an overview of Logging and Monitoring within Domain 6, covering the most critical concepts you need to know for the exam.

Want to learn three of the most common mistakes people make when preparing for the CISSP exam - and of course, most importantly, how to avoid these mistakes?

If the answer is yes, you should checkout our free guide here: Avoid these 3 CISSP Exam Pitfalls (Free Guide!) https://destcert.com/3-mistakes-to-avoid-mmyt/

Link in the description below as well.

If you found this video helpful you can hit the thumbs up button and if you want to be notified when we release additional videos in this MindMap series, then please subscribe and hit the bell icon to get notifications.

I will provide links to the other MindMap videos in the description below.

Thanks very much for watching! And all the best in your studies!